//DEVIL//

Member

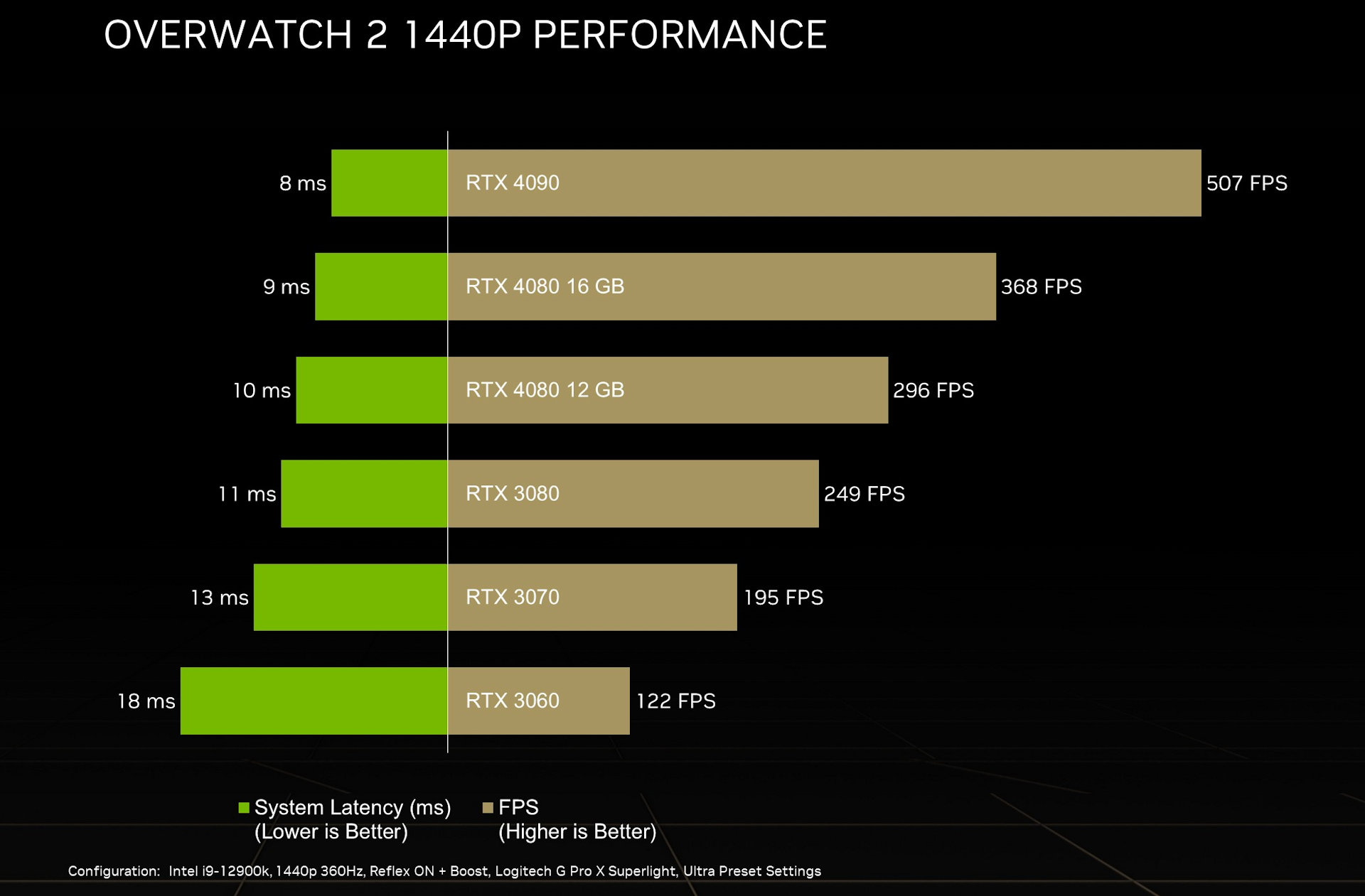

Of a 3080 at 2k. There is a difference of 30 to 40 frames between 3090 and 3080 for this game at 2k.Looks like it does provide near double the fps.

Ideally the 3090 if this game anything goes to go by, is on par with the 4080 12 gig.

Which is in line with previous gens or what I said before . The 4080 12g ( aka 4070 ) is about same performance of 3090 / 3090ti ( with 5 to 10 frames difference which won’t mean anything )

Same goes for the difference between 3070 and 2080ti

With that being said , I still want to buy the 4090 ( I think . I am motivated but not much because I still think my 3090 FE not only more than enough for me at 4K even, it also looks sexy and well built and ( well I got it for 800$ Canadian lol