cinnamonandgravy

Member

was expecting more.

will be interesting to see real world thermals.

TDP dont mean a thing.

will be interesting to see real world thermals.

TDP dont mean a thing.

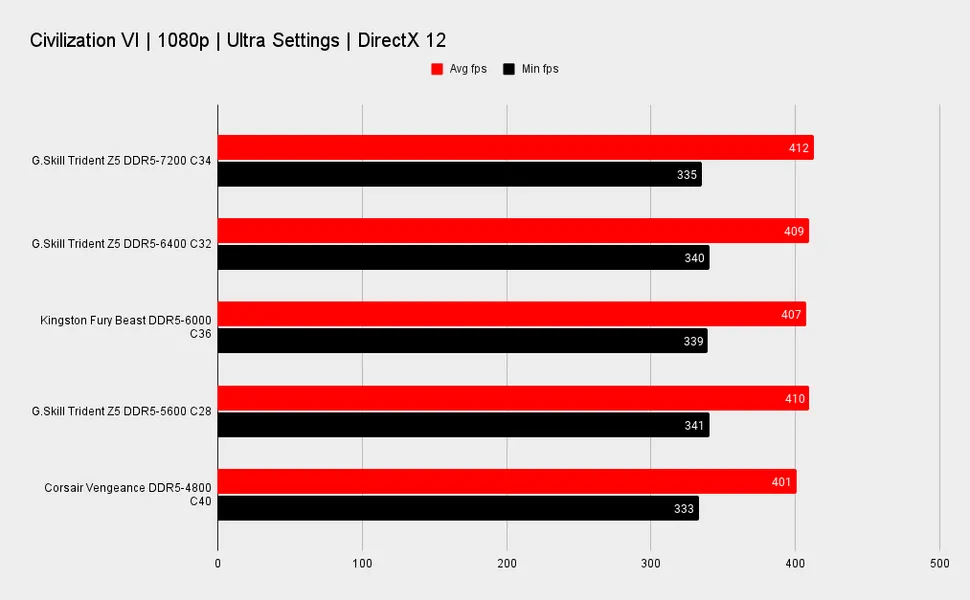

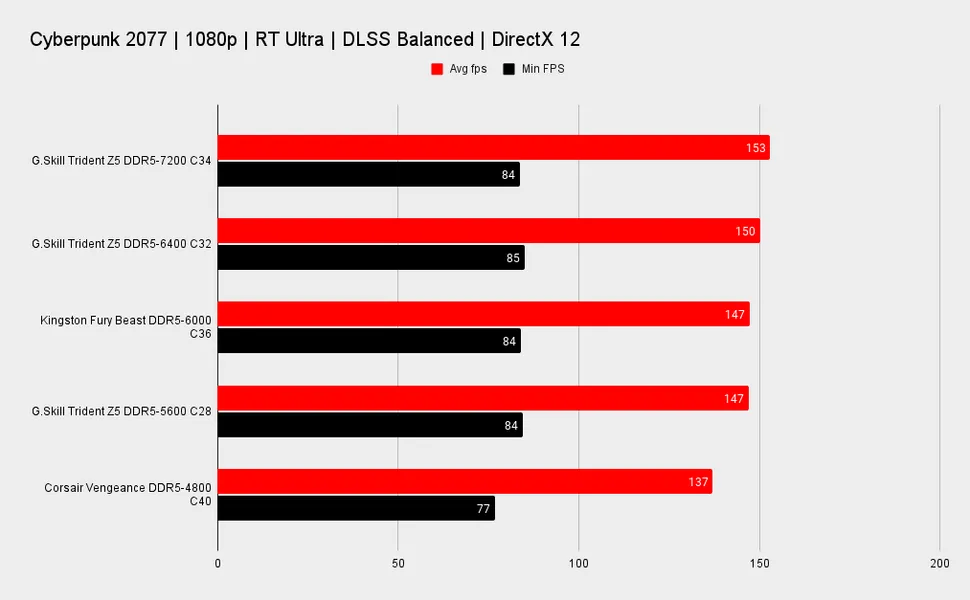

There's always exceptions. And I'm not saying DDR5 6000 is terrible, it's good.I have an i9-13900k paired with 64GB (2x32GB) of DDR5-6000-CL30 RAM. This RAM has lower latency, which is great, and it keeps me within a few FPS of the highest speed RAM for far less money.

Your broad, sweeping claims are ridiculous. I don't know if you're just ignorant to the technology, or if you're an elitist pushing an elitist ideology. Either way, comments like this show that you're out of touch with the subject matter and your audience.

It seems slow in the world of the i9 13900K (a $570-$720 (KS) CPU) Today you can get DDR5 6800 32 GB for $200. If you're buying an i9 why wouldn't you just pay the extra $50 or whatever it is to get the faster RAM? That's all's I'm saying.And I get what you're trying to say in the original post but in what world is 6000mhz DDR5 slow?

I get 6400 with a simple button click to turn on XMPOnly hardcore overclockers are going to push memory much higher than that.

The common gamer doesn't know how to tweak impedances and voltages.

Most people in the PC space dont even buy the 13900k. The point im seeing the other poster make is that there is room left on the table for Intels CPU with regards to ram where there is not for AMDs chip. If each had no bottle necks it is reasonable to assume the average gap is less the the 6% that the AMD review leak curated.Enabling XMP is not just about the speed the memory can achieve.

It's a mixture of the memory, the memory controller on the CPU and the motherboard.

Most people who buy a CPU, even if it's a 13900K, don't care about overclocking. We are a very small minority.

For the vast majority of people, 6000MT/s is pretty good already. And it's already an overclock over the Intel spec.

Definitely correct. I wouldn't gimp myself if I went that route. Its crazy how the prices have come down on some of these kits that were 400~ are under 200 now. Every review I've watched is somewhere around the time of release for DDR5 and none of the prices are close to what they said at the time last year.It seems slow in the world of the i9 13900K (a $570-$720 (KS) CPU) Today you can get DDR5 6800 32 GB for $200. If you're buying an i9 why wouldn't you just pay the extra $50 or whatever it is to get the faster RAM? That's all's I'm saying.

I got 6400 for like 160. Was surprised it was so cheapDefinitely correct. I wouldn't gimp myself if I went that route. Its crazy how the prices have come down on some of these kits that were 400~ are under 200 now. Every review I've watched is somewhere around the time of release for DDR5 and none of the prices are close to what they said at the time last year.

I got my GSKILL 6000 CL32 for 150, worth it. I only went for 32gb just due to the dual channel support and I'm not sure I need 64gb yet.I got 6400 for like 160. Was surprised it was so cheap

I mean the two are related. More power, more heat.was expecting more.

will be interesting to see real world thermals.

TDP dont mean a thing.

There's always exceptions. And I'm not saying DDR5 6000 is terrible, it's good.

But I am saying it holds Intel back in AMDs numbers and allows AMD to claim 6%, where that number would have otherwise been lower...

I see that you do you have higher capacity, where faster speeds aren't really available (that I saw when I just searched now) so my statement doesn't apply to you, but if you had the choice, wouldn't' you have paid $40 more if you could have gotten 64 GB 2x32 DDR5 6400 C32 instead?

It seems slow in the world of the i9 13900K (a $570-$720 (KS) CPU) Today you can get DDR5 6800 32 GB for $200. If you're buying an i9 why wouldn't you just pay the extra $50 or whatever it is to get the faster RAM? That's all's I'm saying.

144hz and only really newer games like Cyberpunk with RT on, and certain emulators (RPCS3.) Honestly the need for faster CPUs is totally overrated today. We've had around 50-75% more IPC in ~7 years whereas in that same timespan back in the 90s, you're getting 200-300% more IPC. When a CPU goes from 200Mhz to 1Ghz in 7 years, that's absurd linear clock speed gains, nevermind the raw IPC where clock for clock that 1Ghz is substantially faster than the old CPU. People today have no idea how hard we've stagnated. Yeah more cores is nice for doing multitasking and compiling shaders, but games are still largely single thread bound and perf hasn't gone up enough in the last decade.What is your monitor refresh rate, and what games are you struggling to run today?

That's fair, I just wanted insight into an actual 13900K owner like yourself.I dunno man. It seems like you're assuming that people have an unlimited budget. These components aren't cheap. If someone is intelligent then they're going to make a budget for what they can spend, and they will stay within that budget even if it means not having the latest and greatest.

If you can get the best CPU and alongside really good RAM then it makes more sense to do that and just upgrade the RAM down the line IF you feel like the upgrade will be worth it.

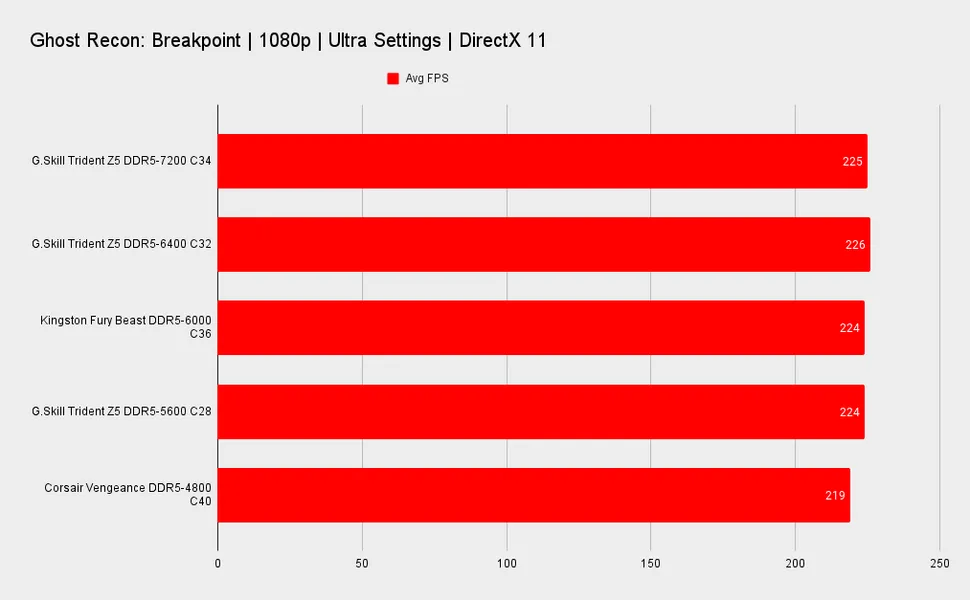

My statement only applies to you if you saw the above prices when you were looking for RAM and still went with the middle option.But for real, in no universe should an FPS gain of 3% (when the FPS is already over 200 regardless and the difference is 7 whole FPS) be the reason that you drop 30% more on RAM. As has already been stated, you're just throwing your money away at that point. If you have unlimited funds, that's awesome. Go for it. But most people on this forum aren't millionaires, and I think it is silly for you to proclaim that 6000MHz RAM with an i9-13900k is nonsensical.

yeah because we buy a 700$ cpu to run full hd...Is there something bad about 1080p benchmarks? It makes the most sense for a CPU test. Otherwise, there are other non-CPU related factors that influence the test.

Intel must be spooked if they have people doing damage control 3 days before the reviews of these new AMD 3D chips.

That's fair, I just wanted insight into an actual 13900K owner like yourself.

I'm not talking about infinite money.

RAM prices of your capacity are as follows. If you had to pick today which would you choose?

2x32 Pricing

DDR5 5600C28 $260

DDR5 6000C30 $310 (what you have today)

DDR5 6400C32 $360

If you sell and buy a faster kit you'll likely lose another $50-$100. May as well have spent an extra $50 up front.

My statement only applies to you if you saw the above prices when you were looking for RAM and still went with the middle option.

Wouldn't you pay $50 for the faster RAM? If not wouldn't you save $50 for the slightly slower RAM? If not why would you still go with the middle option?

DDR5 6800C34 2x16 is $200. $50 more than DDR5 6000C30. I'm not talking the expensive stuff... just with that extra $50 Intel gains a few percent vs. AMD's benchmark.

It's $50. If $50 breaks the back of an i9 build something is wrong...Bruh. Read my post. People have budgets. If the $50 extra puts you over budget then you sacrifice something. It would be more idiotic of someone to go over their budget for RAM than to sell and re-purchase the RAM later on when they have the budget for it.

It's $50. If $50 breaks the back of an i9 build something is wrong...

If $50 of a $1500 upgrade is that big of a deal I'm still trying to figure out why you wouldn't have gone for the $50 cheaper 5600C28 if it's only slightly slower.If I budget $1,500 to upgrade my PC build, nothing is wrong when I choose not to go over that budget, because I'm not an irresponsible child. Apparently you'll say anything to avoid admitting that you're wrong, and that there are legitimate scenarios in which someone would be justified in not spending extra on the RAM.

If the price is that big of a deal I'm still trying to figure out why you didn't go for the $50 cheaper if it's only around 1% slower...

You don't have to justify yourself, I'm only asking because I'm trying to understand.Why do you think I need to justify my purchase to some random person on the internet who asserts his ill-thought opinions as if they're somehow fact? I bought the RAM that I bought because that's the RAM that I wanted.

You don't have to justify yourself, I'm only asking because I'm trying to understand.

I've spent $50 more on faster RAM in the past and I'm in the midrange...

I thought that an i9 buyer wouldn't think twice to spend an $50 for better RAM.

So what I'm getting is that the extra $50 for the 6400 would have broke the back of the budget for the i9 build. That is fascinating, this is the first time I heard this.Why would I buy slower RAM if I can afford the faster RAM? Conversely, why would someone buy the faster RAM if they can't afford it? I am not trying to be mean here, but the things you're trying to understand are basic common sense. Why would someone buy the RTX 4090 instead of the RTX 4080? Why would someone only buy an RTX 4080 instead of an RTX 4090? The answer to these questions always revolves around money, and whether or not the purchase is worth it to that individual. There's nothing more for you to figure out.

If I budget $1,500 to upgrade my PC build, nothing is wrong when I choose not to go over that budget, because I'm not an irresponsible child. Apparently you'll say anything to avoid admitting that you're wrong, and that there are legitimate scenarios in which someone would be justified in not spending extra on the RAM.

These are obviously not real world scenarios. They are the most flexible benchmarks that can be extrapolated to get a relative comparison between products. Testing at higher resolutions makes competing products harder.yeah because we buy a 700$ cpu to run full hd...

I get it . this is how you really test in gaming. but lol that is also stupid. because no matter what, the GPU will be a bottleneck. ( especially when you have something like 4090 ).

The 5600C28 as it has the lowest latency which benefits games more than benchmarks programs.If you had to pick today which would you choose?

2x32 Pricing

DDR5 5600C28 $260

DDR5 6000C30 $310 (what you have today)

DDR5 6400C32 $360

CL30 6000 easy work.That's fair, I just wanted insight into an actual 13900K owner like yourself.

I'm not talking about infinite money.

RAM prices of your capacity are as follows. If you had to pick today which would you choose?

2x32 Pricing

DDR5 5600C28 $260

DDR5 6000C30 $310 (what you have today)

DDR5 6400C32 $360

The 7800X3D is probably gonna be the chip that benefits the most from 3D-V versus the 7700X.If true I'm curious why the V-cache benefits aren't as dramatic here as they were with the 5800X3D.

The 7950X3D only has Vcache on one complex not both complexes, so likely theres some weirdness going on at a software level.

Seems like a logical solution.Seems the way AMD is dealing with this is by having an option on the uefi to select which ccx has priority.

My guess is that if we select the ccx with cache, game threads will be allocated first to that ccx. But then, if using a program with many threads, it will spill over to the next ccx.

It's getting physically impossible to cool Intel's CPU's even if you use liquid nitrogen. There are limits to the laws of physics and Intel trying to pile on 8 P-cores and 14 E-cores and make the CPU draw 300W at load is absolutely near the limit, if not already well past it.Intel's gonna win the race in the end though like a true champion entering the champion's hall in a crowbar-like watts-empowering manner!

In case of doubt, add even more watts and declare their CPU as the winner again..

I upgraded from a 3900X to a 5800X3D and got a very noticeable improvement in 1% lows even in 4K with my 3090. Games felt better, smoother, more responsive, and stuttered less.4090 1080p.

I could probably use my 3700x forever if I play at 4k. I will always be gpu limited lol

Is there something bad about 1080p benchmarks? It makes the most sense for a CPU test. Otherwise, there are other non-CPU related factors that influence the test.

Really disappointing results. With 13900KF's being $519 it seems really stupid to delay what is their only desirable part (the 7800X3D). They're going to lose a lot of sales to discounted Intel parts.

Just as we expect the Ryzen 7000X3D series to launch next week, AMD has just announced a new promotion for existing Ryzen 7000 CPUs. The company and their partners are to offer $75 up to $125 saving on select AM5 builds.

Yeah, because few people run that resolution when gaming, so what's the point? To test some nonsense you'll never experience? To feel better about your purchase while gaming at 1440P and 4k? "Well, even though I'm not gaming at 1080P, I'm sure the performance there is helping me out at this resolution."

Think of it this way:

My guess is: a lot.

- How many basement-dwelling fanboy chodes actually go buy a CPU based on some ridiculous set of 1080P benchmarks, then proceed to NEVER actually game at that resolution, but then fill threads like this across the internet with their awful purchasing advice and commentary?

I said it before in another post and I'll say it again: buying a CPU based on 1080P gaming performance is stupid. But let's be honest: a lot of PC gamers epitomize stupidity...even more so than their plastic box warrior brothers & sisters in many ways.

AMD and Intel performance at higher resolutions are virtually indistinguishable from each other, so my recommendation is to start looking at other variables:

- Cost

- Platform longevity

- TDP & cooling

- Performance in other applications

It's bad because AMD compared against a cheaper Intel system.How is having a most current CPU 'barely faster' than the opposition's current CPU a bad thing, OP?

Without the 3DVCache AMD themselves were saying they were ~10% slower than the 13900K. Unless AMD starts putting out 3DVCache from the start they will have to do a lot of work to outperform them next time (unless Intel stagnates again...).I mean, it's not great in that the opposition don't have to do much to do to surpass it with their next product, but it also means that you probably don't have to do much to match or outperform them next time either.

Pretty cool for that individual use case I guess but unless you are literally running the highest end GPU and playing at a lower resolution, it's virtually moot when stuff like the 13700k exists for cheap. 13700k with a slight undervolt is a cooler running, more energy efficient and all around better 12900k. Got one open box for $300k at Microcenter and couldn't be happier with it. Can still use a dd4 board and I'm good with that because I have 32gb of fancy looking Trident Royal 3200 ram that I didn't want to replace. You're paying a lot to upgrade all of the other components for super diminishing returns.Some games do have a big difference though - FF14 Shadowbringers (a very popular game that I play myself) shows a 15% difference (though the game isn't hard to run in the first place). Also, that 13900K is a furnace.

I'm looking forward to seeing the reviews next week.

And that's why you do 1080p benchmarks to test CPU performance. If CPU performances at higher resolutions are virtually indistinguishable from each other, then looking at a chart in that context is meaningless because there's no way to meaningfully compare one CPU against another since the graphs look too similar to each other. When you test at 1080p, you can actually start to see the differences between each CPU. That way, you can determine which one is "better" at the games you are interested in. If you as a gamer determine that the CPU is a bottleneck in your system for the given resolution you play at, you want to know which CPU upgrade will give you a meaningful performance uplift and the 1080p chart is the one that's going to give you that information.Yeah, because few people run that resolution when gaming, so what's the point? To test some nonsense you'll never experience? To feel better about your purchase while gaming at 1440P and 4k? "Well, even though I'm not gaming at 1080P, I'm sure the performance there is helping me out at this resolution."

Think of it this way:

My guess is: a lot.

- How many basement-dwelling fanboy chodes actually go buy a CPU based on some ridiculous set of 1080P benchmarks, then proceed to NEVER actually game at that resolution, but then fill threads like this across the internet with their awful purchasing advice and commentary?

I said it before in another post and I'll say it again: buying a CPU based on 1080P gaming performance is stupid. But let's be honest: a lot of PC gamers epitomize stupidity...even more so than their plastic box warrior brothers & sisters in many ways.

AMD and Intel performance at higher resolutions are virtually indistinguishable from each other, so my recommendation is to start looking at other variables:

- Cost

- Platform longevity

- TDP & cooling

- Performance in other applications

3090 is not a fast enough GPU to see the true difference in CPU gaming performance. The results would have been different with a 4090.

Intel setups have been The majority for most of The gaming history, so you seriously claim that most(+80%?) have been overclocking gurus or know them?Buying a 13900K and pairing it with DDR5 6000 makes no sense, unless you're AMD and doing an "apples to apples" comparison because that is the suite spot for Ryzen 7000.

On Intel platforms most gamers do go above spec, and it's always been that way.

No one in their right mind bought an 10900K and paired it with 2933... even 3200 (which was faster than the 10900K spec) was slow back then... in the same way no one should buy a 13900K/KS and pair it with slow DDR5 6000.

I'm not talking about being an OC guru, I'm talking about buying RAM rated above Intel spec.Intel setups have been The majority for most of The gaming history, so you seriously claim that most(+80%?) have been overclocking gurus or know them?

Sounds like facts pulled from ass.

3090 is not a fast enough GPU to see the true difference in CPU gaming performance. The results would have been different with a 4090.

AMD is throwing in free RAM though with at least their 7000x series right now and probably the new ones as well. That changes the cost structure.

If you have a Microcenter near you could get quite a good price on the overall bundle once these are released.

OP is so defensive over this. It's hilarious.I can't believe we've got a full scale battle going over a 4 or 5 fps difference in situations over 200fps.

Does Techspot not count? They tested more games and found scenarios where RAM does make a difference...This is basically a know quantity at this point im not sure why you are arguing it so hard yet have no sources, ive posted yet you keep moving the goalposts.

Why is "best" in quotes? Best value? Or actual best?The "best" DDR5 kits currently will be low latency 6000 - 6400 kits.