There's a reason why the 3090 exists and we are going to find out what it is on October 28.

It exists because some people will pay anything for the best and NV are taking advantage of that fact.

There's a reason why the 3090 exists and we are going to find out what it is on October 28.

If people are buying the 3090 for the VRAM, why not just wait for the next 3080 iteration with more VRAM then? I get the feeling that this whole thing is a bait-and-switch.

Thelastword shitting on nvidia? That’s new!

With proper drivers and once the release is complete, with more cards available, the performance gap will surely increase.

More cores, more compute units, more rt and tensor core will surely translate to big gains in DLSS and such.

But yeah, we’re not getting double the perf of the 3080.

If people are buying the 3090 for the VRAM, why not just wait for the next 3080 iteration with more VRAM then? I get the feeling that this whole thing is a bait-and-switch.

It's worth it for me due toe VRAM, Titan RTX was just slighty faster than 2080Ti, so I was fully expecting this...

Nah it isn't for me, I am doing machine learning stuff, so I need a lot of memory : ) Also crypto mining when higher VRAM is alway welcomed : )This all the way. I really wish people would stop parting with their money so easily even if they have it. Do not fall for the 3090 trick. Details about cards with more VRAM have already leaked and they are coming. If you really want to spend your money get a 3080 and a fast Zen3 processor with the left over money you were going to spend on a 3090 later this year.

Stop being brainless.

Come on man you know that amount of VRAM is excessive.

Few things:

1. 3080s have very little OC headroom, close to zero appreciable gains.

2. RT performance increase is tiny. HU pegged it at just 10% over Turing.

3. 3090 being just 10% faster than 3080 is absolutely shocking. This makes the claims 'RDNA2 will compete with 3080 probably but not the 3090!' seem odd now. If it gets close to the former it wont be far off the latter of course.

4. Ampere has been a disappointment and I laugh at all the idiots that were scrambling to get a '$700' (reality: closer to $800) card with just 10GB memory which draws 330-360W after being suckered into Nvidia marketing show earlier this month like complete PC noobs.

It's worth it for me due toe VRAM, Titan RTX was just slighty faster than 2080Ti, so I was fully expecting this...

More cores, more compute units, more rt and tensor core will surely translate to big gains in DLSS and such.

Was it? I honestly did not play on that card any game, so I don't know. I bough it for machine learning and there it absolutely destroyed 2080Ti, mainly due to VRAM, however compute was faster as well...You're comparing wrong cards. The Titan was massively faster than the 2080.

The 3090 (Ampere's Titan), is slightly faster than the 3080.

3080 at 700$ is a steal, plain and simple.

Brilliant deductive reasoning skills and explanation. Now I'm even more confident in my decision to get an RTX 3090.

You say 20-25%, I read elsewhere where some people were expecting a 30-35% uplift.......I could see there's a slight possibility in a very obscure vram fiendish game at 8K, so outliers more or less, but don't see anyway a massive uptick can be sustained over 3080 apart from these edge cases.....Meh, that rumored 20-25% performance uplift was very promising, but for a mere 10% (if not less) it's absolutely not worth double the price, it's better to just OC the 3080 and call it a day. The only way NV could make it gaming-worthy would be to allow that Ultra Performance DLSS mode to be used not just for 8K but also to upscale games to 4K but from 1080p instead of 1440p, giving it quite and edge compared to 3080/70.

4K resolution, average fps is shown in the table....What resolution are these test results at?

Wouldnt the benefit mainly be expected at 4k

Radeon 7's memory and bandwidth did help in many work related scenarios. Though the rumors have died down a bit, I still believe AMD may have a card with high Vram and bandwidth with HBM2E......AMD having lots of vram onboard is something they've always done. I would not be surprised if they have a 32GB card cooking......Maybe CDNA, maybe RDNA 2.Nah it isn't for me, I am doing machine learning stuff, so I need a lot of memory : ) Also crypto mining when higher VRAM is alway welcomed : )

Honestly CUDA is the reason to go with nVidia, they having amazing and mature SDK for this type of thing which I want to do. AMD has some vague Open CL documentation and that's it. nVidia is light years ahead in this regard.You say 20-25%, I read elsewhere where some people were expecting a 30-35% uplift.......I could see there's a slight possibility in a very obscure vram fiendish game at 8K, so outliers more or less, but don't see anyway a massive uptick can be sustained over 3080 apart from these edge cases.....

People are saying OC your 3080, but the 3080 only did 2300Mhz on LN2. On air and water, the OC headroom isn't much and there, the powerdraw will increase even more from it's astronomical heights. Curiously, the powerdraw on the 3090 should be much more than the 3080 too, so OC'ing that means you need more than 750 Watts. Emtek's power rating for it's 3 fan 3090 has a TDP of over 410 Watts already.....

https://www.dsogaming.com/news/emte...t-gpu-has-a-max-tdp-rating-of-over-410-watts/

4K resolution, average fps is shown in the table....

I want some Crysis benches......TBT, Nvidia's cores don't carry the same prowess as before, they boosted it for marketing reasons, but the performance increase is not 20% more because the 3090 has 20% more cuda cores than the 3080..

Radeon 7's memory and bandwidth did help in many work related scenarios. Though the rumors have died down a bit, I still believe AMD may have a card with high Vram and bandwidth with HBM2E......AMD having lots of vram onboard is something they've always done. I would not be surprised if they have a 32GB card cooking......Maybe CDNA, maybe RDNA 2.

I'm still trying to wrap my head around why Nvidia is releasing such low Vram cards now, then in a month's time, they will release 12-16-20GB variants of their GPU stack......That's really believing your customers will buy anything you put out and they do. Some got burnt on buying 2080ti's for over $1200 mere days and months ago, and now there is much more performance on a 3080 for only $700. Some want to go and spend $1500 on a 3090, when they've already heard rumors of a 3080Super with 20GB of Vram, which will no doubt be priced lower than a 24GB 3090......Yet, there is a reason why Nvidia has high Vram cards ready, it's not out of the kindest of their hearts. It's because AMD has high vram counts in their RDNA 2 cards.....Nvidia's first set of cards is just to get the Jensen tattoed guys to pay for yet another Ferrari to add to his garage. Look at how angry some of them are because they can't get a 3080.......They will probably buy the 3080 now and in a month buy the 3080 Super and call Nvidia's praises for blessing them with double the ram for maybe $1000....

What I think is happening is that 10GB 3080's are just for the "will buy anything Nvidia guys", they will make bank on these guys, but during that small space of time what they are doing is focusing on manufacturing 3080S 20GB GPU's in their factories as we speak to go against AMD in October. That's why you can't get 3080 stock, they made very little of these.....Soon Nvidia will cut off 3080 production and will have only the 12GB 3060S, the 16GB 3070S and the 20GB 3080S on the market......Could be as soon as late October, early November...

Is this for real? I want a 3090 just for the vram, but as seen witj the 3080s, I'm afraid the 3090 will be an absolute waste of money. Where did you get the info on new 3080s on october/november? Commonly it has been like 6-9 months after the initial release to reveal a new iteration. 2 months is really soonYou say 20-25%, I read elsewhere where some people were expecting a 30-35% uplift.......I could see there's a slight possibility in a very obscure vram fiendish game at 8K, so outliers more or less, but don't see anyway a massive uptick can be sustained over 3080 apart from these edge cases.....

People are saying OC your 3080, but the 3080 only did 2300Mhz on LN2. On air and water, the OC headroom isn't much and there, the powerdraw will increase even more from it's astronomical heights. Curiously, the powerdraw on the 3090 should be much more than the 3080 too, so OC'ing that means you need more than 750 Watts. Emtek's power rating for it's 3 fan 3090 has a TDP of over 410 Watts already.....

https://www.dsogaming.com/news/emte...t-gpu-has-a-max-tdp-rating-of-over-410-watts/

4K resolution, average fps is shown in the table....

I want some Crysis benches......TBT, Nvidia's cores don't carry the same prowess as before, they boosted it for marketing reasons, but the performance increase is not 20% more because the 3090 has 20% more cuda cores than the 3080..

Radeon 7's memory and bandwidth did help in many work related scenarios. Though the rumors have died down a bit, I still believe AMD may have a card with high Vram and bandwidth with HBM2E......AMD having lots of vram onboard is something they've always done. I would not be surprised if they have a 32GB card cooking......Maybe CDNA, maybe RDNA 2.

I'm still trying to wrap my head around why Nvidia is releasing such low Vram cards now, then in a month's time, they will release 12-16-20GB variants of their GPU stack......That's really believing your customers will buy anything you put out and they do. Some got burnt on buying 2080ti's for over $1200 mere days and months ago, and now there is much more performance on a 3080 for only $700. Some want to go and spend $1500 on a 3090, when they've already heard rumors of a 3080Super with 20GB of Vram, which will no doubt be priced lower than a 24GB 3090......Yet, there is a reason why Nvidia has high Vram cards ready, it's not out of the kindest of their hearts. It's because AMD has high vram counts in their RDNA 2 cards.....Nvidia's first set of cards is just to get the Jensen tattoed guys to pay for yet another Ferrari to add to his garage. Look at how angry some of them are because they can't get a 3080.......They will probably buy the 3080 now and in a month buy the 3080 Super and call Nvidia's praises for blessing them with double the ram for maybe $1000....

What I think is happening is that 10GB 3080's are just for the "will buy anything Nvidia guys", they will make bank on these guys, but during that small space of time what they are doing is focusing on manufacturing 3080S 20GB GPU's in their factories as we speak to go against AMD in October. That's why you can't get 3080 stock, they made very little of these.....Soon Nvidia will cut off 3080 production and will have only the 12GB 3060S, the 16GB 3070S and the 20GB 3080S on the market......Could be as soon as late October, early November...

Just the other day in one of the Nvidia threads someone was saying finally 4K 60fps cards are here. People always say these things when the games being shown are last gen games.....And yet not even all these lastgen games are 4k 60fps....With only a 10% uptick in RT performance over Turing, when I read articles before saying the RT performance of Ampere over Turing would be massive, it only means the Nvidia hype machine is just crazy, but too blatant for generally smart PC tinkerers to not put two and two together....So it must be the koolaid.Imagine the people who bought that convinced that they are gonna play 4k60 ultra+ rtx for an entire gen

Well this isn't a thread to prevent you or to chastise you from buying what you want. It's your money, just pointing out some real stats and facts and how everything is shaking up.......With the information you do with it what's best for you and what suits you. People have a right to purchase anything for whatever reason they deem tbh....It could be as simple as, I love Jensen Huang's black leather jacket......In truth I'm not being sarcastic here, people buy things for whatever suits them. an item is pink, they love the design, they have the disposable cash it happens.....I'm just saying, looking at your justification, it's work related reasons, so looking at some rumors, waiting a little to see how everything shakes up is never a bad thing....There will be benches on the PRO software you use I'm sure.......Honestly CUDA is the reason to go with nVidia, they having amazing and mature SDK for this type of thing which I want to do. AMD has some vague Open CL documentation and that's it. nVidia is light years ahead in this regard.

That made me laugh, thanks : DWell this isn't a thread to prevent you or to chastise you from buying what you want. It's your money, just pointing out some real stats and facts and how everything is shaking up.......With the information you do with it what's best for you and what suits you. People have a right to purchase anything for whatever reason they deem tbh....It could be as simple as, I love Jensen Huang's black leather jacket......In truth I'm not being sarcastic here, people buy things for whatever suits them. an item is pink, they love the design, they have the disposable cash it happens.....I'm just saying, looking at your justification, it's work related reasons, so looking at some rumors, waiting a little to see how everything shakes up is never a bad thing....There will be benches on the PRO software you use I'm sure.......

Well the Gigabyte leak is sound, the @_rogame leaks are sound. With that and looking at NV's history, where they released Supers and Ti's to compete with the 5700XT, 5700, 5600XT and 5500.....I can say affirmatively that they have cards ready for when AMD launches RDNA 2, which is said to compete from the top end to the low end, unlike their highest RDNA1 sku which started from high-mid.Is this for real? I want a 3090 just for the vram, but as seen witj the 3080s, I'm afraid the 3090 will be an absolute waste of money. Where did you get the info on new 3080s on october/november? Commonly it has been like 6-9 months after the initial release to reveal a new iteration. 2 months is really soon

You're comparing wrong cards. The Titan was massively faster than the 2080.

The 3090 (Ampere's Titan), is slightly faster than the 3080.

No, not really.3070 - 5888 shading units.

3080 - 8704 shading units.

3090 - 10496 shading units.

Indeed... It will disappoint the ones that bought a 3000 series card or were losing their shit because they weren't able to get one.Big Navi will disappoint.

It's the same chip. The pricing of the 3090 is atrocious.I think you guys are looking at it all wrong.

It's not that the 3090 is so bad but that the 3080 is so good.

We are getting within 10% performance of a $1500 card for only $700 bucks, amazing.

Because it unnecessarily hikes the prices for the ones that actually buy what they need rather than what they feel they need.Why does it matter what someone does with their money? There are plenty of performance cars out there, and some will spend double the money, just to get a fraction of a second on a circuit.

This. A 20GB 3080 has pretty much been confirmed.If people are buying the 3090 for the VRAM, why not just wait for the next 3080 iteration with more VRAM then? I get the feeling that this whole thing is a bait-and-switch.

It's been "here" for the last 2 years.4k is there guys, because 2080/2080s consoles are targeting it as base resolution.

So, in reality, the number of hardware/ physical shading units in the RTX 3090 is 5,248? Is each unit larger and/ or faster than a unit in the RTX Titan?4k is there guys, because 2080/2080s consoles are targeting it as base resolution.

Although fps they'll target is likely 30.

No, not really.

The real number of shading units is half of the claimed.

It is just Huang thought that since new units could do either 1 int + 1 fp or 2 fp ops per clock, he could claim double the number.

So, in reality, the number of hardware/ physical shading units in the RTX 3090 is 5,248? Is each unit larger and/ or faster than a unit in the RTX Titan?

Yes. Notably, it supports 2fp instead of int + fp op, sort of "doubling" tflops figure. It is a far cry from having twice as many units though.So, in reality, the number of hardware/ physical shading units in the RTX 3090 is 5,248? Is each unit larger and/ or faster than a unit in the RTX Titan?

This time it's here the way 1080p has been.It's been "here" for the last 2 years.

It depends on how much INT calculations there are, NV figured out that typically only about 30-35% are those while gaming, so it was a better design choice than a fixed 1:1 ratio between INT and FP cores. So usually there's around 7-7.5k CUDA cores available for games in 3090, and about 5.5-6k in 3080.

I agree with this 100%. 4k is about to become mainstream.This time it's here the way 1080p has been.

So, does the following demonstrate what you mean?

RTX 3090's "10,496" CUDA cores / 2 = 5,248 CUDA cores

5,248 CUDA cores * 0.30 = 1,574.4 CUDA cores for INT operations

5,248 CUDA cores * 0.35 = 1,836.8 CUDA cores for INT operations

Average number of CUDA cores available per clock = 5,248 CUDA cores for FP operations + 1,574.4 or 1,836.8 CUDA cores for INT operations = 6,822.4 to 7,084.8 total CUDA cores

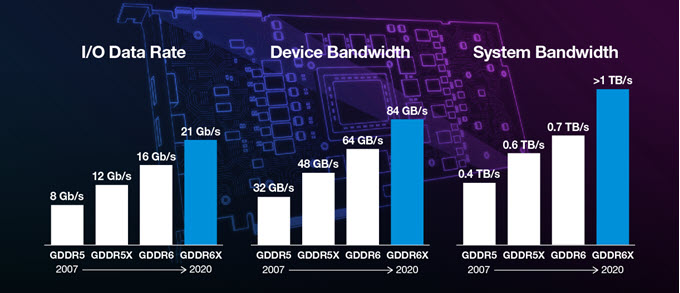

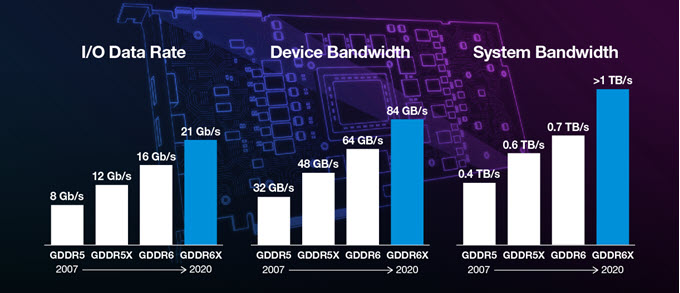

I don't know much about how RAM works in video cards, but the 3080/90 use GDDR6x as opposed to just GDDR6 that the 2080TI used. I don't think you can compare 10GB in a 1080TI to 10GB in a 3080/90.

GDDR6X memory is a big deal for Nvidia's RTX 3090 and 3080 graphics cards

Micron gets official with GDDR6X memory after some details were leaked last week.www.pcgamer.com

So, does the following demonstrate what you mean?

RTX 3090's "10,496" CUDA cores / 2 = 5,248 CUDA cores

5,248 CUDA cores * 0.30 = 1,574.4 CUDA cores for INT operations

5,248 CUDA cores * 0.35 = 1,836.8 CUDA cores for INT operations

Average number of CUDA cores available per clock = 5,248 CUDA cores for FP operations + 1,574.4 or 1,836.8 CUDA cores for INT operations = 6,822.4 to 7,084.8 total CUDA cores

No. Cores are generic programmable units. There are no "cores for int operations".

Think of them as mini CPUs.

I don't want to wait.Nvidia is going to release a 20 GB version of 3080 to wreck AMD's Big Navi rollout,3080 is alot cheaper than 3090.