The touryst has almost no textures at all to speak of that could eat up memory. So I guess memory size is really not a problem.

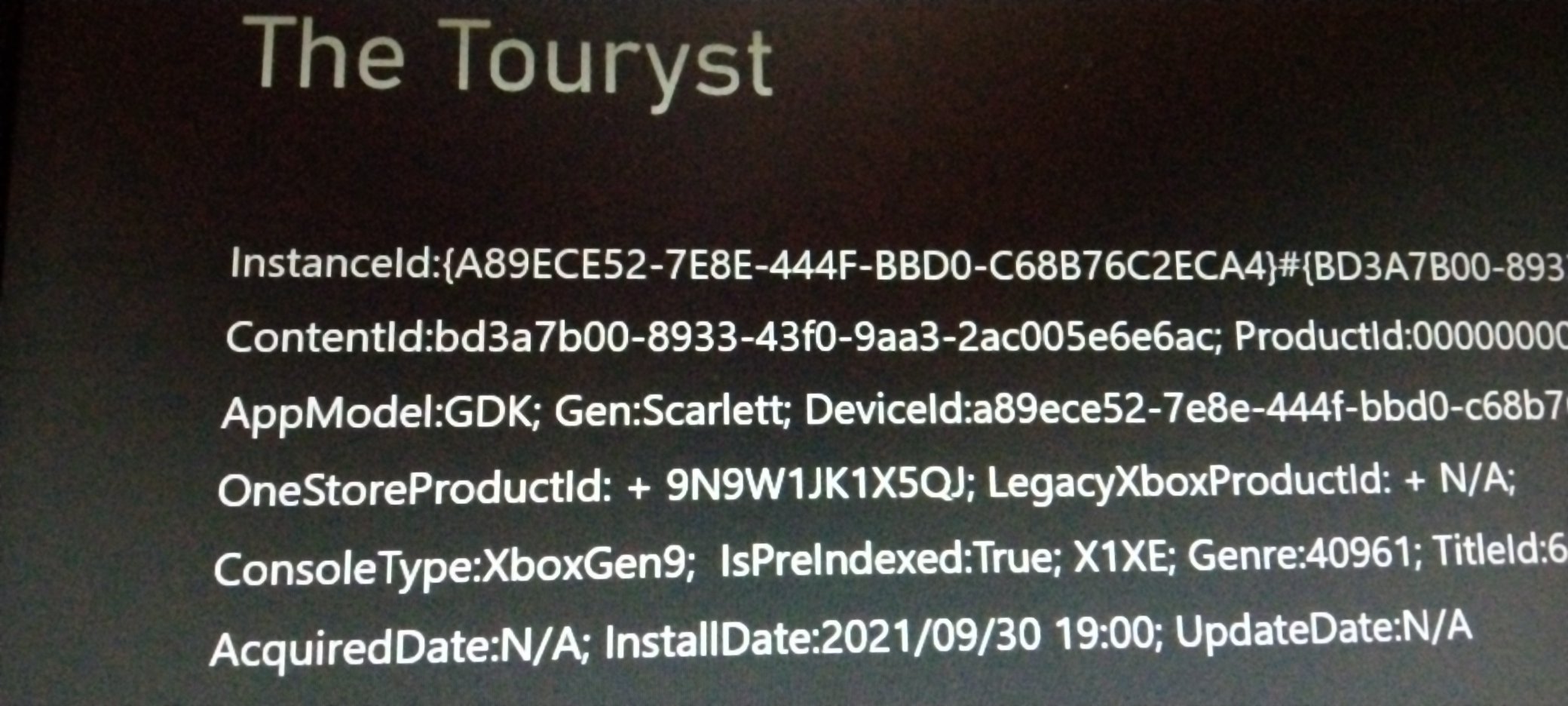

The game on xbox series is just a quick port of the xbox one version, which was just ported from the switch version to just run ok on xbox one and xbox one x (already 4k). Than it got a series X patch that just increases the resolution to 6k. A complete engine rewrite was necessary for a native PS5 app.

The game is really a good game. But from a technical perspective it is really not a demanding game. Why they opted out at 6k at series x? well I guess it was just to get it released without further improvements over the xbox one x version (other than the res bump and 120Hz mode). Now almost one year later and much more work later they have rewritten the engine to work with the PS5 API. Much more current knowledge etc went into the new code.

We can speculate abut this game a long time, but now we have more or less a game at two very different engines states we try to compare here. Even with small differences in visual features.

I guarantee the issue is memory.

Weaker PC GPUs, ones with less RAM and even less pixel fillrate run it at 8K. But on PCs VRAM isn't the only major source of RAM in the system. With Series X developers need to control more specifically where in RAM data is being accessed to guarantee the maximum performance out of the GPU. This is why the 10GB is labeled by Microsoft as GPU optimal and the rest is labeled standard memory.

Let's use Doom Eternal as an example. Xbox Series S in that game has zero ray tracing. Why? Is it because it can't do ray tracing? Not powerful enough to ray trace? Of course not. It has ray tracing in plenty of other games including Metro Exodus. But Doom Eternal on Series S has no ray tracing likely due to the fact with their current engine design and optimizations, ray tracing simply didn't work within the memory constraints of Series S. This could change in the future, for example, with Sampler Feedback Streaming, as textures then would take up much less space in memory, freeing up much needed RAM to ray trace on Series S. Or they may just optimize for it in other ways.

Series X, however, did get ray tracing. So what does this basic example tell us? Sometimes a piece of hardware not doing something isn't always evidence that it

can't do it. It could also potentially be a sign that it can't, gotta be fair there, but more often than not it is a matter of optimization.

How did they achieve 6K in Ori, for example? It was due in large part to all the optimizations and changes they made to get the game working for switch that they then went and applied intelligently to Series X. He makes clear that had they just taken the Xbox version of the game and put it on Series X, they wouldn't have achieved the performance targets they aimed for.

"If we were to just take the version that we shipped for the original Xbox, and we would have tried to hit 120hz that would have been quite a bigger leap than taking the switch version that we knew would have been that much more optimized." They had to break the game apart and put it back together again in order to achieve what they did on Series X.

They had to reinvent the rendering pipeline, reinvent the simulation, reinvent the streaming, the memory management, all of those things

Optimizing for the least performing switch allowed them to push things even further on Series X and even enhanced the quality. They created a bunch of sliders that they used to optimize different parts of the game for switch, and they were able to utilize those same sliders, though not all, to modify things like depth of field, resolutions of specific things in the back without anybody being able to tell the difference on Series X. But even after those switch optimizations it still wasn't enough in some cases, they had to go and do more on Series X. Some optimizations couldn't be brought over, he talks about making Ori very specific to the Series X. Things they did specific to the engine path on Switch "you couldn't just get for free" on Series X. They needed to engine specific changes to Series X. When going from 4K to 6K in using their motion blur, they're keeping the other slices the same because it's a high enough precision to still look great once brought up to 6K and most can't tell with the motion blur used. When they go to 6K they really only go to 6K with one specific center slice and the rest are secretly lower, and that's how they supersample the character, the artwork in the center, and that's how they get the crisp look of native 6K.

The developer explains much of this to DF from the point where this video starts to about the 25 minute mark. An excellent video where they go into a whole lot of detail of the kind of work it took to get a game like Ori working on Series X at 6K. At 27:57 John asks him what were the biggest bottlenecks on the way to getting there to 120fps. He said a huge challenge was giving themselves enough room in the window performance wise on the GPU to ensure they always nailed their 8.3ms window. He said a challenge was preventing the spikes in GPU performance (hello that sounds like the kinds of spikes that might occur if you run into that split memory situation), again he refers to all the tweaks and optimizations made to animation systems, particle systems and the like. They didn't want to rely on VRR he said.

The switch based optimizations weren't enough ultimately, so it'd be hovering above 100fps on Series X, and in some cases hitting 120fps, but it wasn't perfect, they had to balance work on cores and that kind of stuff. So Ori at 4K 120 and 6K 60fps was a challenge. He stresses it wasn't the hardest thing in the world, but it took some work.