Interesting choice to keep glossing over the fact the Xbox Series X version came at launch where it was well documented by DF that lots of aspects of Series X development were actually quite a ways behind where PS5 development was. And about a few months before the Series X version (3 months before) launched the dev for this game themselves acknowledged to DF that the PC and Xbox conversions of Touryst were about "getting to grips with DX12" In other words, they could just be scratching the surface of utilizing DX12.

Back in December 2019, Shin'en Multimedia wowed us with a unique Switch exclusive: The Touryst. Defined by a stunning v…

www.eurogamer.net

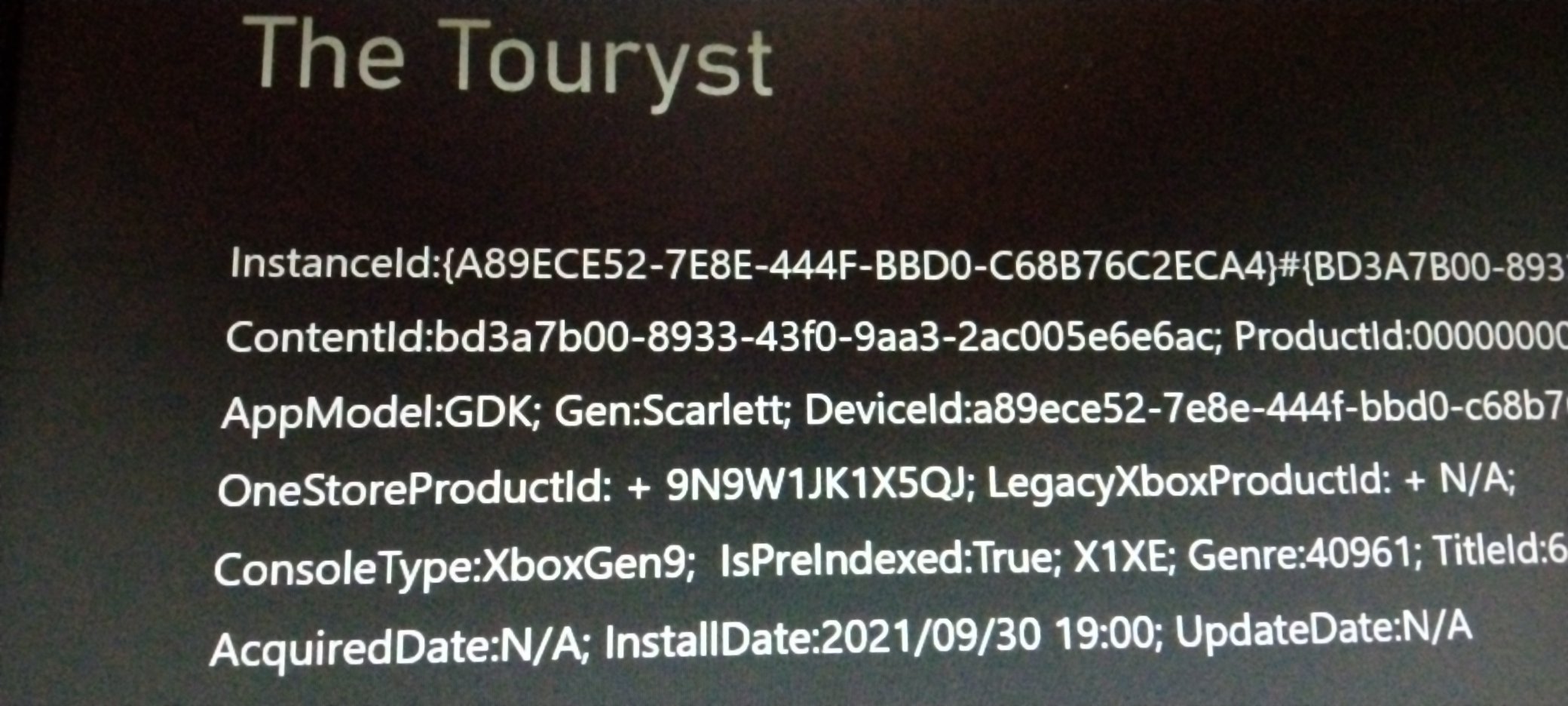

So what we essentially have here is confirmation that the Xbox One versions of Touryst are switch ports, and about 3 months later they took that Xbox One X work and ported it to the Series X GDK. But in the case of PS5 we have actual developer confirmation to Digital Foundry that they didn't just port the PS4 version of the game to PS5, they instead rewrote the game's engine for the PS5's low level APIs, something it's clear Series X did not benefit from nearly a whole year from the time the game released for Series X.

As to why the switch port hit 8K on PC but not Series X, the answer is pretty obvious, more RAM. PCs have more available RAM between GPU VRAM and system RAM so this is how PCs with weaker GPUs than what's inside Series X (slower GPU clock speeds also) can still achieve 8K resolution in this game. Consoles don't have their memory designed the way PCs do, and this is even more true for a system like Xbox Series X with its unique asymmetric memory setup, making a proper engine rewrite all the more important for that console, but that's not what Series X received. It got an Xbox One port of a switch port.