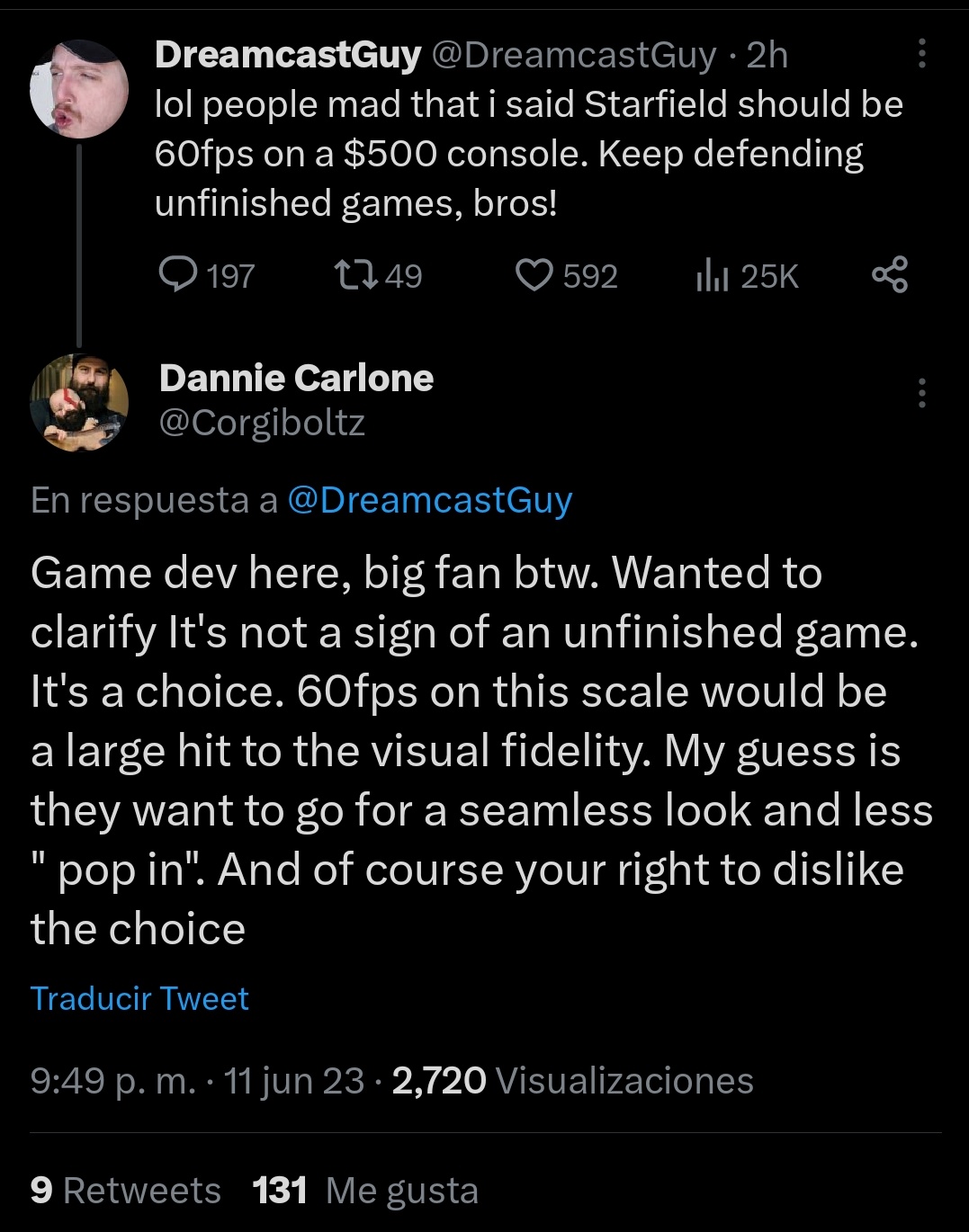

All the arguing about which is better feels like a distraction. Some people are fine with 30, others aren't -- it's all a matter of personal preference.

But where I think most of us are (rightfully) pissed -- and I know this is at least the case for me -- is in how we were sold both of these consoles promising major generational leaps. Higher frame rates, VRR, native 4K, up to 120FPS, possible 8K output. Now, yes, some of that we knew was marketing bullshit (especially the 8K stuff), but it wasn't unrealistic to expect that we'd get more options than we had in the past. Options that allowed us to prioritize frame rate over graphics if we chose. This was also part of the marketing campaign for both the XSX and PS5 -- pointing to the options we got in last generation's mid-gen refresh consoles saying "this is the future".

Many of us bought new equipment to take advantage of these features. But now we're sliding back to locked 30fps games where most of these features are meaningless. VRR, for example, is functionally useless at a locked 30fps. If we're just sliding back to the same standards as last gen with zero user preferences available to us, then yeah... I think being pissed off is justified. Especially when we all know they're probably going to try to make new mid-gen consoles with the same "improved performance" promises they gave us for these consoles.