VFXVeteran

Banned

Welcome to the Graphics Tech thread!

Let's begin by expressing what this thread is NOT:

1) A screenshot war thread. If you want to post screenshots of a game to show off some graphical prowess, it must be accompanied by factual data and information about the screenshot. Don't just make a blanket statement about how awesome a game looks and then post up a screenshot as some sort of proof.

2) Subjective comments about a game. If your views or opinions are subjective, then either state that is the case and move on or continue the artistic comparison in some other thread.

3) Console/PC war thread. While it is OK to demonstrate a company that implements an incredible tech feature, it is not meant to showcase how superior the hardware is. Talk about the game itself and (for PC owners) whatever options are enabled to make said game shine.

4) Gameplay comparison thread. This thread only discusses graphics aspects of the game. Animation is welcome. FX are welcome, etc.. but not about how this game is better than that game. Compare games in their appropriate threads.

What this thread can do for you:

1) Learn about all the ways developers use graphical techniques and compare those techniques with other games.

2) Become familiar with graphical terms and learn their meaning.

3) Share knowledge on different algorithms used throughout the industry (games, movies, or anything else).

4) Discuss comparison videos such as Digital Foundry's graphics analysis.

5) Discuss hardware across all platforms (without console warring).

6) Share the love of seeing your favorite graphical game shine when compared to other games.

Here are some recent vids that's been floating around the net (11/30/2019):

Nvidia boosts RTX Quake

Halo Reach Enhancements on PC

Common Graphics Terms:

Anisotropic Filtering

Anti-Aliasing

PBR - Physically Based Rendering/Shading

RTX - Ray Tracing

SSS - Subsurface Scattering

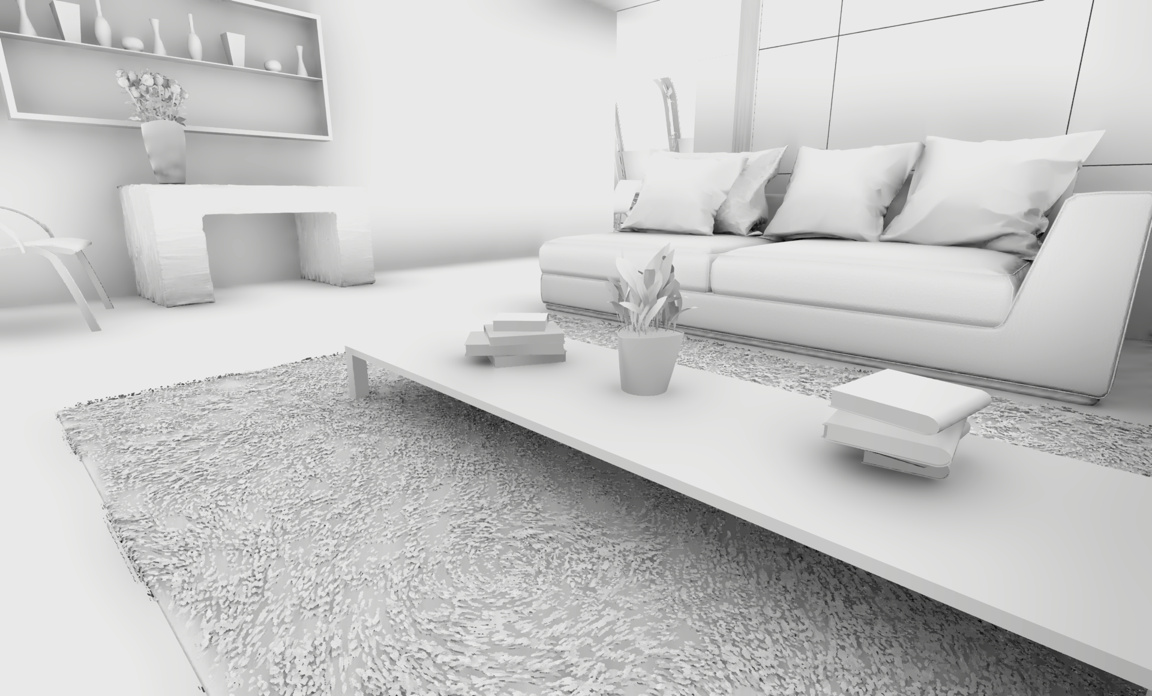

AO - Ambient Occlusion

WELCOME!

Let's begin by expressing what this thread is NOT:

1) A screenshot war thread. If you want to post screenshots of a game to show off some graphical prowess, it must be accompanied by factual data and information about the screenshot. Don't just make a blanket statement about how awesome a game looks and then post up a screenshot as some sort of proof.

2) Subjective comments about a game. If your views or opinions are subjective, then either state that is the case and move on or continue the artistic comparison in some other thread.

3) Console/PC war thread. While it is OK to demonstrate a company that implements an incredible tech feature, it is not meant to showcase how superior the hardware is. Talk about the game itself and (for PC owners) whatever options are enabled to make said game shine.

4) Gameplay comparison thread. This thread only discusses graphics aspects of the game. Animation is welcome. FX are welcome, etc.. but not about how this game is better than that game. Compare games in their appropriate threads.

What this thread can do for you:

1) Learn about all the ways developers use graphical techniques and compare those techniques with other games.

2) Become familiar with graphical terms and learn their meaning.

3) Share knowledge on different algorithms used throughout the industry (games, movies, or anything else).

4) Discuss comparison videos such as Digital Foundry's graphics analysis.

5) Discuss hardware across all platforms (without console warring).

6) Share the love of seeing your favorite graphical game shine when compared to other games.

Here are some recent vids that's been floating around the net (11/30/2019):

Nvidia boosts RTX Quake

Halo Reach Enhancements on PC

Common Graphics Terms:

Anisotropic Filtering

Anti-Aliasing

PBR - Physically Based Rendering/Shading

RTX - Ray Tracing

SSS - Subsurface Scattering

AO - Ambient Occlusion

WELCOME!