Panajev2001a

GAF's Pleasant Genius

Looks like this one was not too far off either:Leaked classified top secret video of Intel R&D team working on next generation advanced TDP for their future CPU's:

Looks like this one was not too far off either:Leaked classified top secret video of Intel R&D team working on next generation advanced TDP for their future CPU's:

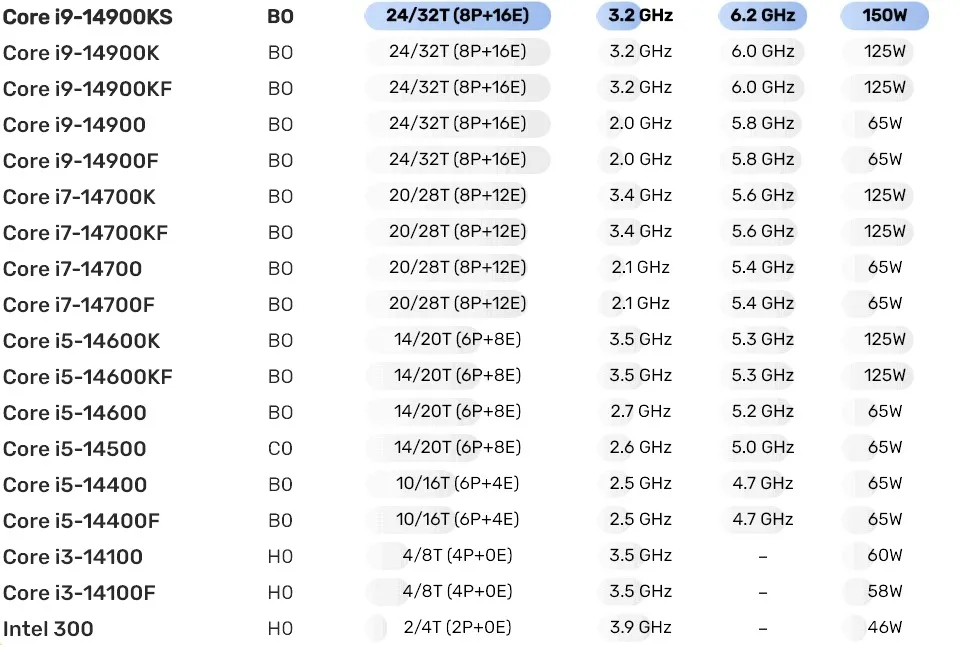

Always been Intel but stopped on the 12700k - it’s clear the technology has stalled - they are just feeding in 50% more watts and running them hot to eek out 20% gains. Will wait to see what AMD do next.

Looks like this one was not too far off either:

X86 is done.

This is exclusive to Intel's high end ridiculous chips. Mid range or AMD products are not this ridiculous in power draw and heat (though the recent Ryzens do get silly with it)Death to X86.

It's not my fault you can't see this is reaching the breaking point. And it's not just CPU's, GPU's are also getting ridiculous in termos of size, power draw and heat generation. We need a paradigm shift ASAP.This is exclusive to Intel's high end ridiculous chips. Mid range or AMD products are not this ridiculous in power draw and heat (though the recent Ryzens do get silly with it)

It's not my fault you can't see this is reaching the breaking point. And it's not just CPU's, GPU's are also getting ridiculous in termos of size, power draw and heat generation. We need a paradigm shift ASAP.

I never blamed you for anything bro, calm down. I'm simply stating that the vast majority of X86 cpus are never this ridiculous when it comes to heat or power draw.It's not my fault you can't see this is reaching the breaking point.

It's not my fault you can't see this is reaching the breaking point. And it's not just CPU's, GPU's are also getting ridiculous in termos of size, power draw and heat generation. We need a paradigm shift ASAP.

Life is good on Comet Lake aka Comfy Lake. The day I need to upgrade from my 65w i7 will be a sad one.

It's coming in the next 2-3 years. It will be Nvidia who ushers in the new reality for PC. I'm not saying that's a good thing, given their penchant for market dominance and pricing shenanigans, but I look at all the players right now and I see them as being the ones to really strike the much-needed deathblow to X86.

Why do we need a deathblow on the X86?

Because it's old and outdated, and there's a ton of stuff included in X86 that is completely irrelevant to today's computing. Even Intel understands this, hence this whitepaper they released last year, which attempts to soften the blow.

Envisioning a Simplified Intel Architecture for the Future

PC computing rests heavily on Intel Architecture processors with an enormous installed base, and cloud computing is synonymous with Intel Architecture.www.intel.com

The problem is, Intel is a mess nowadays, and so the chances of them being the ones to usher in the change--the change that's coming whether you like it or not--is unlikely.

ARM is the future.

There is nothing in the market that signals that PCs are going to switch to ARM anytime soon. Almost every company has an ARM license, including AMD and Intel. But that doesn't mean they will push it to replace X86.

Qualcomm’s exclusivity agreement with Microsoft to provide CPUs for Windows on Arm PCs has been rumored to expire in 2024. Still, Arm CEO Rene Haas is the first person to confirm it officially in an interview with Stratechery. The end of the exclusivity agreement means Windows on Arm PCs can start to use non-Qualcomm Arm chips in the coming years.

You seem to be overlooking the fact that Windows ARM licensing exclusivity ends this year:

Windows on Arm exclusivity may be a thing of the past soon — Arm CEO confirms Qualcomm's agreement with Microsoft expires this year

Opening the doors for other manufacturers, such as AMD or Nvidiawww.tomshardware.com

That's why AMD and Nvidia are rushing in.

I'm not sure how you look at this information objectively, along with Apple's ARM success, and think "yes, it appears to be smooth sailing for X86."

Edit:

To help paint the picture, imagine an all-Nvidia machine marketed to gamers and creators. I think that should illustrate the point sufficiently.

A PC with NVidia using an ARM CPU would essentially discard several decades of backwards compatibility. Not only with games, but also game engines, tools, middleware, etc.

There is a reason that for the last few generations that X86 has been the standard for gaming consoles. And will probably continue to be in the future with the PS6 and the next Xbox.

This is a great advantage, because porting between PCs, PS5 and Series consoles, becomes simpler and quicker.

That could presumably be solved by an Apple Rosetta-type or other emulation solution, it wouldn't be insurmountable. I'm not saying it will happen, but the need for backwards compatibility wouldn't prevent it happening.

1. Rosetta is exclusive to Apple PCs.That could presumably be solved by an Apple Rosetta-type or other emulation solution, it wouldn't be insurmountable. I'm not saying it will happen, but the need for backwards compatibility wouldn't prevent it happening.

+400W

*twice as high as a PS5. On max load. in JUST the CPU.consumption is higher than a PS5

1. Rosetta is exclusive to Apple PCs.

2. It's slower than running Native

3. Translation solutions will never reach 100% compatibility and if there's even the most obscure software out there that cannot run on ARM through X86 emulation of any opf the sort, the change won't go through

4. the fastest ARM chips are still nowhere near what your average Ryzen 9/i9 are capable of, and so long as there is a need for that horsepower in the consumer market there is a need for X86.

I said Rosetta-type. That means something like it, not literally Rosetta.

Emulation being slower than native is a given, it doesn't mean it isn't viable.

If x86 is deemed a dead-end then this or something like it will happen, and the people who want to use legacy software will either have to deal with emulation or continue to use older hardware. There would be an overlap of years while x86 Windows was still supported. It's already happened with DOS software, people who used to rely on native DOS programs had to deal with it, and they did.

The difference between DOS and now is that DOS was around for like... a decade? couple of years? X86 has been the standard for twice that amount of time, not only that but during the most popular period for home computing.I said Rosetta-type. That means something like it, not literally Rosetta.

Emulation being slower than native is a given, it doesn't mean it isn't viable.

If x86 is deemed a dead-end then this or something like it will happen, and the people who want to use legacy software will either have to deal with emulation or continue to use older hardware. There would be an overlap of years while x86 Windows was still supported. It's already happened with DOS software, people who used to rely on native DOS programs had to deal with it, and they did.

7800X3D is literally the best gaming CPU you can get right now.I'm upgrading my CPU today. MicroCenter combos dictate the choices are as follows:

7700x

7800x3D

12600KF

12900K

To be frank, I'm not thrilled with any. Mostly because of thermals. 7700x was the preliminary winner, but that 95c operating temp doesn't sit well with me. Maybe that's based on old thinking. Now I see this.

so futile. The pointless struggles of an animal being slaughtered.To come on top of benchmark they're pushing +100% tdp just for 10% more performance .

The fking stage of cpu benchmark is a nomanland atm , no one follow rule anymore .

Someone tell Intel bigger numbers are not always better.

Microsoft for decades have been fighting not to be dependent on Intel.

MS missed out big time when ARM became the standard for mobile phones, while Windows never caught on and Android became dominant.

Ever since, that MS ha been trying to break into that part of the market.

Nvidia has no other option other than ARM. Nvidia doesn't have an X86 license.

AMD has an ARM license for decades. And they already tried to make some CPUs but never got far with it.

So they are not rushing in. Nvidia is using what it can use. And AMD is still in the X86 camp.

A PC with NVidia using an ARM CPU would essentially discard several decades of backwards compatibility. Not only with games, but also game engines, tools, middleware, etc.

There is a reason that for the last few generations that X86 has been the standard for gaming consoles. And will probably continue to be in the future with the PS6 and the next Xbox.

This is a great advantage, because porting between PCs, PS5 and Series consoles, becomes simpler and quicker.

And X86 isn't a dead end- Intel just fell off. It happens to every company at some point. Intel's incompetence doesn't mean that the entire architecture of X86 CPUs is some disgusting, archaic relic that needs to be shelved- it simply means Intel's having a bit of a moment.

Why is it some how an eventuality when there's nothing wrong with X86 CPUs currently?I acknowledge there will be challenges with a transition to ARM, but I don't believe any of them will be big enough to stop the eventuality of it.

and while the reduced heat and power usage is nice for laptops and computers, it's not anything useful for a desktop computer.

What advantage does ARM somehow bring to desktop computing that warrants this switch? It's less powerful than most X86 CPUs,

Hell no. 3rd time I've used amd and everytime I have issues. OK my Athlon XP back in the day didn't, but my 3600 gets bsods every day when idling. Memtests, prime95, and gaming it runs fine, but in a word doc or browser just sitting there... Irq_lessthan_equel, nstkernel, or some other kryptic code. Down clocking memory does shit.Well, IMO, it goes without saying that if productivity is the main use your PC is used for, you should be using AMD.

There are two things you need to understand before we go any further, and I say that not to be snobby or condescending, but to just set the stage. If we can't agree on the following two items, any further discussion will just be pointless.

So here they are:

Also, please remember that Nvidia tried to outright buy ARM back in 2020. These are not stupid people. They're licking their chops at the thought of dominating the PC market like Intel used to. Same goes for AMD. As for Microsoft, they want to be like Apple.

- The market is shifting away from desktop PCs...and has been for years.

- Just read through this if you don't believe me.

- It doesn't matter if you like big gaming desktops...overall, most people do not.

- Again, read the article I linked to...the data is unimpeachable.

- Many companies in the PC sector have watched Apple's foray into ARM with interest and envy...most notably Nvidia, AMD, and Microsoft.

- All three of them sense that the Windows market is ripe for disruption.

- Intel is also watching...but with fear, and would rather become a chip fabricator because deep down inside, Pat Gelsinger, a smart man, knows his company's goose is cooked if they can't suck on the government's tit.

- This is what happens when you sit around with your thumb up your ass for ten years...and do NOTHING to innovate.

If after reading through all of that, or this, and genuinely processing the info, any reasonable person would come to the conclusion that *SOMETHING* is afoot. The challenge, whichwinjer referenced in his post, would be the X86 to ARM Windows transition. There's no denying that it could be more difficult than Apple's transition to Apple silicon.

But it's not impossible. Not with AMD, Nvidia, and Microsoft all plowing ahead. Which they are.

That's a fantastic question, and I'm more than happy to answer, while semi-plagiarizing from a handful of articles. I'd recommend reading this one, though...if you're truly interested.

Also, from my vantage point, the Apple M3 laptops smoke most Intel or AMD ones. I also think that Nvidia has some of the best designers and engineers in the world, and that their stab at a modern ARM Windows chip could be a Sputnik moment.

- Simplified manufacturing and reduced production/materials cost.

- Lower transistor count...another reason why X86 sucks: a bunch of transistors spent on things very few people care about.

- Generally speaking, improved performance per watt (provided the design is competent and the fabbing process is up to snuff and not a generation behind).

- This usually means:

- Lower electric bills for consumers.

- And less heat.

- Smaller form factors, like the Apple Studio design.

- For laptops, the advantage should be obvious.

chipsandcheese.com

chipsandcheese.com

Incorrect - if you bought a 7950X3D and disabled the non-X3D CCX in the bios then it would be faster.7800X3D is literally the best gaming CPU you can get right now.

ARM or x86? ISA Doesn’t Matter

For the past decade, ARM CPU makers have made repeated attempts to break into the high performance CPU market so it’s no surprise that we’ve seen plenty of articles, videos and discussi…chipsandcheese.com

I mean yeah the 7950X3D is a higher bin but lol why would you do that when the 7800X3D performs at the top of benchmarks for like 210-250 less than the 7950X3D or 14900K? I guess if you are going for the top perf without worrying about price, that would make sense.Incorrect - if you bought a 7950X3D and disabled the non-X3D CCX in the bios then it would be faster.

Also, the 7800X3D doesn't beat the 14900K in all games.

It's the best bang for the buck though and wins a lot of scenarios.

The K is for Katon no jutsu the S is probably the rank.

Choosing anything on the list that isn't the 7800X3D makes you a suckerI'm upgrading my CPU today. MicroCenter combos dictate the choices are as follows:

7700x

7800x3D

12600KF

12900K

To be frank, I'm not thrilled with any. Mostly because of thermals. 7700x was the preliminary winner, but that 95c operating temp doesn't sit well with me. Maybe that's based on old thinking. Now I see this.