M1chl

Currently Gif and Meme Champion

Absolutely agree with second part, it sucks what kind of hassle is to get these services (well at least PS Now for me), thankfully we are at the same IP pool like Germany, so with some trick in the Router, it can be done, I had few times month of PS Now. Thankfully PS + is kicking ass and i am still paying for it, so I can snag those games, even though my PS5 is probably years away, but I am sharing acc with my brother, so it's just pennies for me : )You can get GP with some fuckery and hassle, but PS Now is so far IMPOSSIBLE as you need a US credit card, or a card from a country supported.

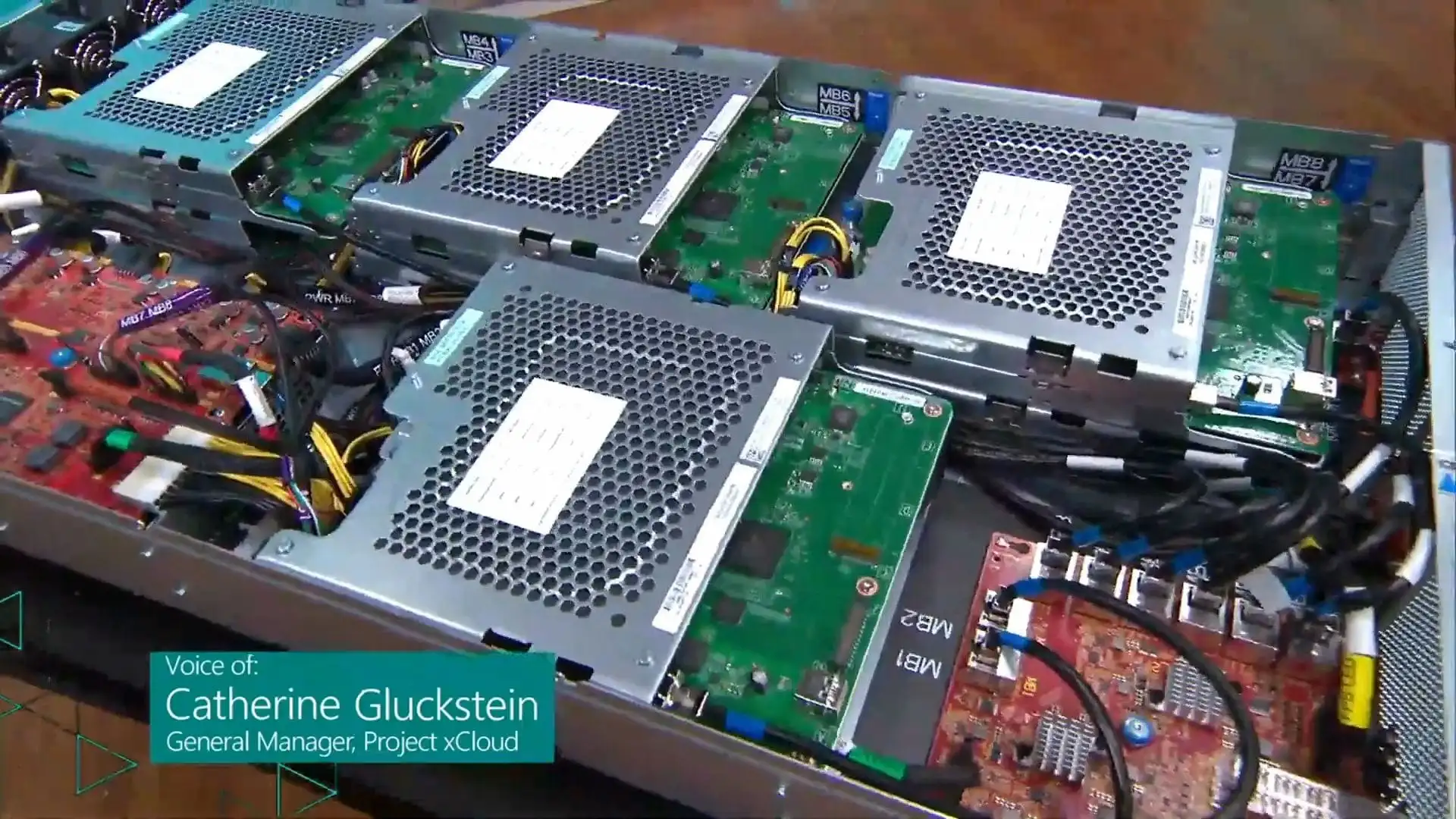

They should keep those streaming bullshot to those countries and offer download-only to other countries if they seriously want more exposure. It's an expensive, useless feature to most gamers (streaming) so cutting the expenses and making it a fully rental, downloadable model would make both have more money to spend on bringing better quality games to their services instead of dedicating more than $400-500+ HW per streamer.

Hopefully everything is going to be available soon™