MarkMe2525

Member

uhhh didn't mean to reply to this.. my bad!!What?

They're doing the exact same job and getting paid exactly the same. One can only do it with a little less strength.

Last edited:

uhhh didn't mean to reply to this.. my bad!!What?

They're doing the exact same job and getting paid exactly the same. One can only do it with a little less strength.

No one can be sure unless you are a programmer working on the consoles. But hole in my head blog probably has the best next gen tech articles I think. Including the one how he thinks the Xbox RAM will work with wider and narrow bus setup. Here is his part11:

http://hole-in-my-head.blogspot.com/2020/08/analyse-this-next-gen-consoles-part-11.html

I appreciate the warning. Much like you, I've been on these forums since well before the split, so I am well aware of the biases that GAF holds. The thing some forum users don't hold onto is the biases that reside with developers as well. If a Sucker Punch developer says that a Playstation product is the best, you have to weigh the obvious implied PR, same if it came from Ninja Theory and the Xbox. You either look at them all with skepticism or take them all for their word.You have to be very careful. People with a committed point of view tend to cherry-pick from the info that they think supports their case, leaving out what doesn't. The fact that they spend time doing so doesn't say anything about how true they information they present is.

I appreciate the warning. Much like you, I've been on these forums since well before the split, so I am well aware of the biases that GAF holds. The thing some forum users don't hold onto is the biases that reside with developers as well. If a Sucker Punch developer says that a Playstation product is the best, you have to weigh the obvious implied PR, same if it came from Ninja Theory and the Xbox. You either look at them all with skepticism or take them all for their word.

Well, this is what he wrote......

Seems pretty clear to me what he's saying.......

So, yeah.... you misspoke.

Wait for DF analysis, holiday line-up and actually see games running on XsX..that shit will make you humble, facts!!

Tired of this bs, but at the end of the day, we're at SonyGAF, afterall .

We are waiting. Since a whole year. Nothing so far. Not a good look. LMAO.

The main reason I would assume AVX2 is the decompressor on the XsX, is that (AFAIK) you get one unit per core, and if they are using a 10th of a CPU core, it is the most capable feature to do the job (IMO).

Your GPUdirectstorage point is interesting, but how does that work in regards to the very big indication that the XsX is really using lots of decompression tricks with BCpack - that uses zlib with RDO? The CPU would either have to decompress on the CPU core as we were told (IIRC) and write back the decompressed BCpack data to a temporary disk store, prior to the GPU requesting it uncompressed, directly - which will cost bandwidth/s on each read/write, or they'd need to have CUs in the GPU do the zlib decompression to make the BC files ready to use.

I know it was suggested that BCpack may include a random access ability to partially decompress BCpack to BC blocks - which after researching worked out that current random access for zlib has an overhead of 64KB per access, which is expensive for a potentially 6:1 compressed 4x4 pixel BC1 block that is 8bytes or 48bytes on access.

Right now you have either theory or PR. The people who can speak with authority, meaning they have dev kits, are all under NDAs. So believe what you will. Anyways... I'm moving on...So, it's not a blind read. Crytek Engineer for example said SeriesX could push more pixels in screen because more CUs. He said other SeriesX advantagens like hyperthreading in CPU. Of course he could say wrong things, but it's clearly not a Sony's lawyer.

I read this "researcher users" posts, and there are many interesting points. But many of these words are not from pratical experiences.. we could theorize many things, but only software developers could really talk about the real point. I read Matt Hargett, Jason Ronald, Mark Cerny, James Stanard and many other tweets and interviews to find some point.

It's about discover the truths, not to trust in someone that talks what you really want to hear.

Well thats just stupid.

Games that weren't designed with the Series X as the primary focus.

If I play PS1 Ridge Racer on PS4, I guess thats PS4 gameplay........ you got to be kidding me.

This is incorrect, Mesh shaders run on the Video Card(software is a reference to the programmability of the mesh shaders).Yeah, I just watched a good portion of the video, very interesting stuff. However the method he uses to achieve the culling is entirely different from how the PS5 is handling it. In the demo, he achieves the culling through use of "meshlets" or "mesh shaders" and this is done at a software level not hardware and it's impressive. However there are costs to this method, which he details in the video. I'm not going to begin typing them out since it's stuff I don't know too much about and there's way too much technical jargon lol

The PS5 method of handling the different types of culling is done through allowing full control of the "traditional" graphics pipeline and this is achieved on a hardware level through the GE. The engineer in the technical video states that it's "tough" beating hardware accelerated methods (which PS5 has btw) and that you should expect the hardware (Series X hardware he is referring to, I think) to handle back-face, zero area and frustum culling.

So what's the difference? Judging from the information from the video, achieving culling through use of meshlets can be tough depending on what type of culling you want to implement. The hardware based method is easier with less costs. So the question is how effective is the Series X hardware at things like culling and does it allow developers full control of rendering pipeline? I don't know as Microsoft hasn't revealed anything about it yet, Thic mentioned that the Series X GPU does have a multi-core command processor unit so maybe that will allow it. As of the now PS5's method seems more straight forward and easier, but I could be wrong. Maybe someone with more knowledge like @geordiemp could elaborate?

Btw you can watch the video here.

Yes, desperately trying to drive the narrative to fit you personal agenda is stupid. The Series X-only titles are still in the making.

No... just no.

This is what he REPLIED to, get it right:

Read the whole book, not just the cover. He replied directly to the comment about DF multiplatform comparisons coming this holdiay when Both XSX and PS5 are on store shelves and both have retail games running that can be compared.

The time machine comment is funny as hell and totally appropriate.

Not sure if serious lmao

For PS5 Pro?

That's all that needed to be said, the rest was fluff.

So you guys are arguing about tech you haven't seen in action and trying to tell people they will look amazing?

Then when people ask you to provide proof of this amazing tech outclassing everything, you say they should wait for DF?

Yeah sure..... Didn't know Phil Spencer had so many clones.

Tells me to read the whole book........ proceeds to post only the first page.

Uh huh.....

Right. Best to only take those heavily invested in Xbox seriously.Imo NXGamer is heavily invested in Playstation, so I would take his statements with a grain of salt.

Yes the culling happens early in the pipeline but the mesh shaders do have trade offs, he literally talks about it in the video, one example being high attribute shading cost. He also goes on to state it's hard to beat fixed function hardware blocks which handle the vertices/triangles (which are present in the PS5 and Series X).This is incorrect, Mesh shaders run on the Video Card(software is a reference to the programmability of the mesh shaders).

furthermore, this culling happens at the beginning of the pipeline make it more efficient, since the rest of the graphics pipeline never has to spend time processing culled geometry.

Sony has not indicated that the PS5 is using Mesh shaders,

if culling is done on the primitive shaders it's less efficient then than mesh shaders as it is further down in the graphics pipeline and would have taken up more processing time alone the pipeline.

Newsflash - consoles are now closed box PCs, build from PC parts, times of exotic unknown alien tech are long time gone, and it's not the first time that's happening, we had no less than 4 consoles in the past 7 years that were designed like that, where we could easily compare all 4 as well as with various PC configs. Not only that, we already saw quite a bunch of upcoming next-gen titles, which are basically PC High-Ultra settings plus RT effects here and there, and those titles are even starting to post their requirements, which again clearly indicate what to expect.

We're talking about a specific comment, aka the book, not the entire series lol.

Focus Daniel San!

No no no no no.

It's very simple.

You lot are speaking as if you worked on the consoles and are there designing each and every game.

Enough of that. You guys don't really know jack about how these games will look and perform until someone tells you what you are actually looking at and what to look for. Instead of running around this forum (and this is to BOTH sides) telling everybody how unreal and how amazing this is going to look and what this console can do and what this console can't, how about you just sit and wait?

It wasn't even over a month ago where this forum was told every hour about how amazing Halo would look............ and then we got that.

You don't know. You keep telling everybody, but you don't know and have no proof.

Just wait.

They're closer to the new 4700/4800 CPU portion of the APU's for Laptops, than the desktop 3700/3800 CPU's.

Imo NXGamer is heavily invested in Playstation, so I would take his statements with a grain of salt.

I don't know.. seems pretty much like common sense that a faster more capable machine is going to facilitate making better looking, better running games. Companies like Naughty Dog are going to have some amazing looking games. I know this because they already have amazing looking games on significantly lesser hardware.No no no no no.

It's very simple.

You lot are speaking as if you worked on the consoles and are there designing each and every game.

Enough of that. You guys don't really know jack about how these games will look and perform until someone tells you what you are actually looking at and what to look for. Instead of running around this forum (and this is to BOTH sides) telling everybody how unreal and how amazing this is going to look and what this console can do and what this console can't, how about you just sit and wait?

It wasn't even over a month ago where this forum was told every hour about how amazing Halo would look............ and then we got that.

You don't know. You keep telling everybody, but you don't know and have no proof.

Just wait.

NXGamer definitely knows his stuff (I think his stuff is a bit too in-depth which is why his channel has struggled. DF is much more mass market in its terminology and presentation etc). I definitely appreciate him though.

He definitely has a preference for Sony esp their first party games though and he fucking hates Nintendo lol (some of the lengths he goes to in a video to downplay their role in helping to save the industry in the 80’s was laughable). He was definitely a SEGA kid

It does him well to praise Sony as they are the market leader, provide him with free code and now and again their developers shout him out on Twitter. Their games are definitely impressive too so he’s not telling any lies he does go a bit overboard sometimes while not giving the same credit or plaudits to the big Xbox and even Nintendo games. Why would you upset that kind of relationship.

some insight courtesy of DF on the audio components found in both systems.

When compared to RX 5700 XT, XSX's on-chip L0/L1 cache and instruction queue storage are higher for higher CU count which is backed by 5 MB L2 cache. RX 5700 XT has 4 MB L2 cache.

some insight courtesy of DF on the audio components found in both systems.

NXGamer definitely knows his stuff (I think his stuff is a bit too in-depth which is why his channel has struggled. DF is much more mass market in its terminology and presentation etc). I definitely appreciate him though.

He definitely has a preference for Sony esp their first party games though and he fucking hates Nintendo lol (some of the lengths he goes to in a video to downplay their role in helping to save the industry in the 80’s was laughable). He was definitely a SEGA kid

It does him well to praise Sony as they are the market leader, provide him with free code and now and again their developers shout him out on Twitter. Their games are definitely impressive too so he’s not telling any lies he does go a bit overboard sometimes while not giving the same credit or plaudits to the big Xbox and even Nintendo games. Why would you upset that kind of relationship.

The same goes for 3D audio, which has made me think a lot about marketing and presentation. Sony made a remarkable pitch for a revolution in 3D audio with PlayStation 5 with its Tempest Engine, talking about hundreds of audio sources accurately positioned in 3D space - yet Microsoft has essentially made the same pitch with its own hardware, which also has the HRTF support that the Tempest Engine has. Microsoft hasn't made any specific promises about mapping 3D audio to the individual's specific HRTF, but then again, Sony hasn't really told us how it plans to get that data for each player.

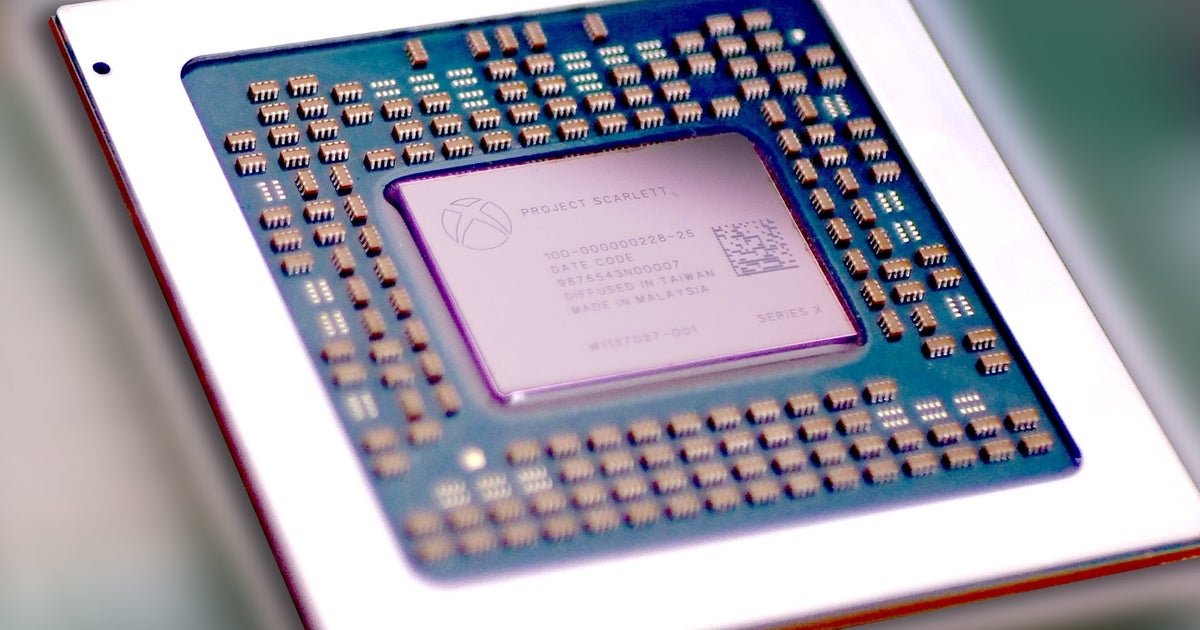

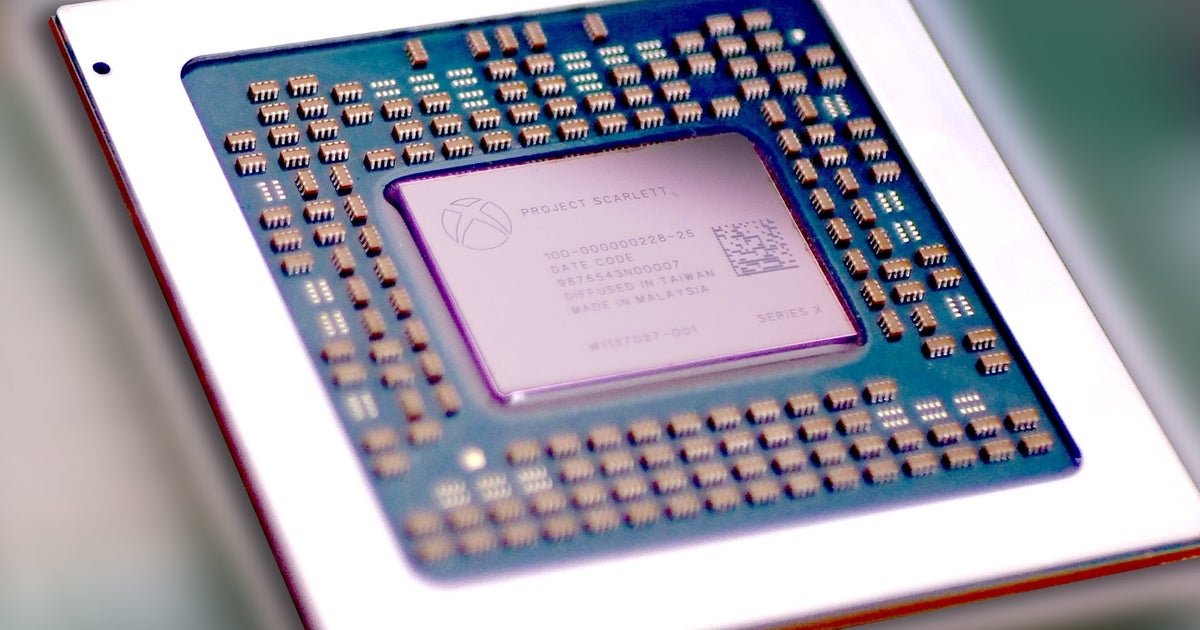

Xbox Series X silicon: the Hot Chips 2020 breakdown

Earlier this week, Microsoft took part in the Hot Chips 2020 symposium to deliver its now traditional console silicon b…www.eurogamer.net

keep in mind that that includes equal amount of read and write, and I am willing to bet that the ssds on both consoles will be of the type that are significantly faster reading, than writing.If you break down the transfer speed of the Quick Resume feature for Forza Horizon 3, 18 GB over the course of 6.3 seconds, that actually breaks the per second raw bandwidth for the drive down to 2.857 GB/s.

So it does look like MS's numbers were conservative on the raw and compressed speeds of the drive after all. But like also said in the video, developers have to be developed with XvA in mind (so probably a slight learning curve there, probably moreso than with Sony's solution but I'd probably say not by a huge amount).

Seem Series X audio system is comparable in power to PlayStation 5's

keep in mind that that includes equal amount of read and write, and I am willing to bet that the ssds on both consoles will be of the type that are significantly faster reading, than writing.

BCPack isn't a GPU friendly format, because as the jokey Xbox engineer tweet said(paraphrasing, of course) it is block compression(zlib (ant falvour) guided by RDO stats) of block compression textures BCn, but we didn't want to call it that.AFAIK, zlib and RDO are used alongside BCPack (or can be), not that BCPack is necessarily those things? It's similar to PS5 utilizing Oodle Texture alongside RDO. BCPack is being designed specifically for GPU-bound textures, so I think it would be logical to assume DirectStorage/GPUDirectStorage take this into consideration with the points you raise. FWIW MS have only said that 1/10th of a CPU core is used for XvA, this is probably 1/10th of the OS core, and they never said decompression work is done on this core. After all, it has decompression hardware in the APU itself where the decompression actually takes place, similar to PS5.

If the GPU is pulling in the texture files in a GPU-friendly format (BCPack) directly from storage, then the GPU is likely also handling the decompression for those files. That is if it even needs to decompress the files for use. We don't necessarily know where the decompression block is in the Series X, but we can assume it's within the I/O block, and if you look here:

.....

It is according to DF.Seem Series X audio system is comparable in power to PlayStation 5's

Comparing the Series X audio to Tempest itself it's actually the more "powerful" of the two in terms of raw performance and it supports virtually all of the same features Tempest does.

Thanks for the reply. So what percent of performance of a 3700x? Like 80%?

I’m bemused at the likes of AC Valhalla and WD Legion being 30fps with those CPU’s... Like wtf are they doing with all that 4x CPU compute performance gain. I can understand 30fps next gen only games but I really expected the cross gen games to be 60fps.

I think you are comparing apples and oranges here and by that same token PS3 was 2 TFLOPS with nVIDIA counting fixed function HW.

The numbers are there, 20-24 FP ops/cycle on the programmable DSP XSX has and 64 FP ops/cycle on Tempest running at GPU clocks (fully programmable + very likely PS4 like less flexible Sound DSP’s).

I am not sure we would be keen to people twisting PS5 numbers to somehow make it appear to have higher FLOPS rating for its GPU than it does, so not sure why like with the SSD’s and the XVA+SFS somehow the numbers kind of magically tell a suddenly different and inverted story.

Also, bus contention issues would be similar across designs... not sure why it is such a hot topic, as if we did not have essentially multi channel ring busses or a more mesh like interconnect fabric allowing multiple peripherals to share bandwidth without having to own and release the bus and local storage/buffers cover a lot of that latency too (and even then you had various ways to trade off speed with impact on the shared single bus).

BCPack isn't a GPU friendly format, because as the jokey Xbox engineer tweet said(paraphrasing, of course) it is block compression(zlib (ant falvour) guided by RDO stats) of block compression textures BCn, but we didn't want to call it that.

On PC the application performance between renoir (4000 APUs) and Matisse (3000 CPUs) is about the same unless it is a cache heavy app.

In gaming renoir sits between the 2000 series and the 3000 series when all are running the se memory config.

Low latency memory really helps both do better so the performance in the consoles will depend what speed the Infinity Fabric is running at and the memory timings.

If Sony were to tune the memory timings and MS don't ot would more than offset the 100Mhz difference in CPU clockspeed.

On the

I'm going by the raw performance comparisons both Sony and MS have provided: Sony compared Tempest raw performance to a PS4 CPU, MS compared theirs to One X CPU. PS4 CPU is around 102.4 GFLOPs, One X's CPU is around 137.2 GFLOPs. These are chips Sony and MS decided to compare their audio solutions to, so that is with

The DSP they quoted as programmable is the one I quoted the FP ops/cycle for and the one that compares to Tempest (in the sense that PS2’s GS was flexible but not freely/fully programmable in any modern sense of the term, or think about Flipper’s TEV, and NV2A shaders and their predecessors the register combiners before were programmable).

You can take that slide that way you are doing and I can state PS3 was a 2 TFLOPS machine because that is what Sony and nVIDIA stated in a slide too... no circling around that point.

You are also under quoting Tempest’s number with the lower simplified estimated bound Cerny have: 64 FP ops/cycle * 2.23 GHz = 142.72 GFLOPS and again you are comparing fixed function HW in the mix vs the Tempest compute unit which MS did deliberately to get a figure that compared more favourably to Tempest. That is not unlike how they were selling or trying to sell ESRAM bandwidth + DDR bandwidth as something that added up together to dwarf PS4’s bandwidth. I am sure there are possibly even slides with that written on them.

Your Tempest number assumes the engine is running at the same clock as the GPU, but Sony have already said it's its own separate chip and not part of the GPU. Therefore there's no guarantee it runs at the GPU's clock speed and they actually have never clarified that.

The Tempest engine itself is, as Cerny explained in his presentation, a revamped AMD compute unit, which runs at the GPU's frequency and delivers 64 flops per cycle.

....

Is there a link to this?

From DF’s satellite interview with Cerny:

PlayStation 5 uncovered: the Mark Cerny tech deep dive

On March 18th, Sony finally broke cover with in-depth information on the technical make-up of PlayStation 5. Expanding …www.google.co.uk

This was a few minutes search, not like I scoured the web for this by the way. I think and always stated that Cerny never boasts even when he could twist the numbers to make them seem even more impressive than they are, but that is beside the point.

Yeah, the point is that for BCpack to be anything more than just BCn (in comparison to a competitor solution), the decompression needs to happen in a place that the GPU can use the uncompressed BCn data instantly.....

PaintTinJr Appreciate the briefing. Mind parlaying this roundabout into your general point regarding GPU/CPU decompression and BCPack you were talking about earlier?

We have audio hardware acceleration in every console since years and it is not really used that way that was promised. E.g. Shape had truly remarkable power for that time. Something similar was in PS4 and now this is just used for some stereo headphones virtual 3d effects.Seem Series X audio system is comparable in power to PlayStation 5's

Low latency memory really helps both do better so the performance in the consoles will depend what speed the Infinity Fabric is running at and the memory timings.

Yeah, the point is that for BCpack to be anything more than just BCn (in comparison to a competitor solution), the decompression needs to happen in a place that the GPU can use the uncompressed BCn data instantly.

I did read/skim hrough some of the guy's other tweets, and the 64KB block size is mentioned in regards of mipmap pages (for SFS), so it does look like they are intending to use random access zlib decompression - which removes the need to unpack BCn files fully, and that sounded like a hardware solution in one of the tweets, however he said he's continuing to work on the DXTC in another, and there was tweets I remember from the next-gen thread where he claimed they could get more compression out of BCpack than the stated figures as they improve the algorithm, so that instantly says it is software based, but probably on the GPU side. He also mentioned multiple code paths - with AVX2 getting a mention but I'm not sure if that's more DX on PC.

To be honest, I can't really tell anymore if their strategy is zlib decompression to the 6GB through the CPU, or random access zlib decompression from the 10GB using SFS on the GPU. There was a tweet about they can't publicly disclose more, but are sharing the info with Xbox developers, but my hunch now is that they are using CUs for the BCpack decompression now, and using the AVX2 from standard zlib decompression into the 6GB for non-texture data.

So we're here now? We can't bring ourselves to say that Series X is more powerful so we must redefine our definitions so that PS5 is both 'exactly the same' while simultaneously being 'a little less' powerful?What?

They're doing the exact same job and getting paid exactly the same. One can only do it with a little less strength.

So we're here now? We can't bring ourselves to say that Series X is more powerful so we must redefine our definitions so that PS5 is both 'exactly the same' while simultaneously being 'a little less' powerful?

I don't think I want any of that, but thanks.

PS5 and XSX, both use GDDR6, which have a lot of bandwidth, but more latency when compared to DDR4 on PC.

From DF’s satellite interview with Cerny:

PlayStation 5 uncovered: the Mark Cerny tech deep dive

On March 18th, Sony finally broke cover with in-depth information on the technical make-up of PlayStation 5. Expanding …www.google.co.uk

This was a few minutes search, not like I scoured the web for this by the way. I think and always stated that Cerny never boasts even when he could twist the numbers to make them seem even more impressive than they are, but that is beside the point.