-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

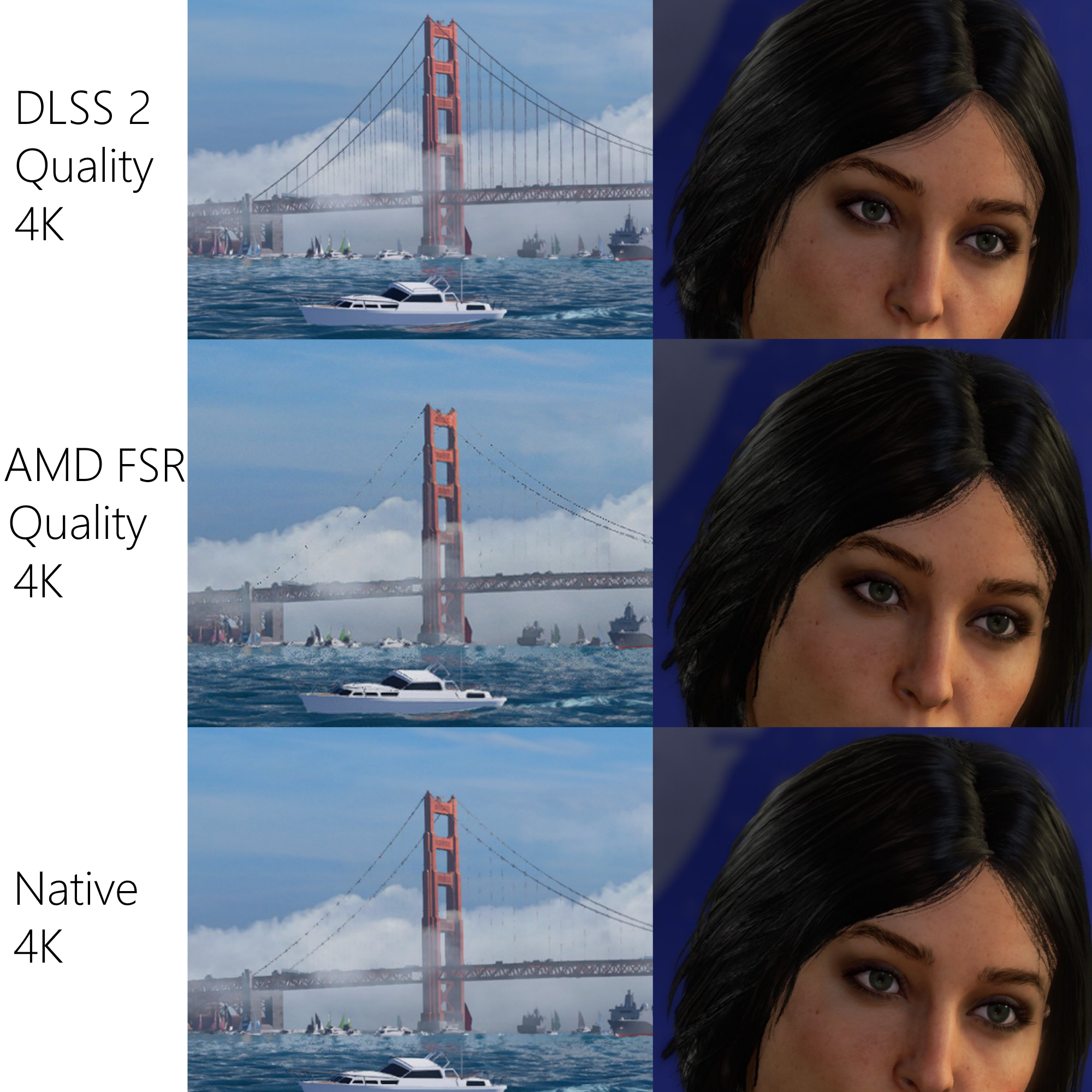

DLSS vs. FSR Comparisons (Marvel's Avengers, Necromunda)

- Thread starter Md Ray

- Start date

Md Ray

Member

It's even bitch slapping native rendering.Wow DLSS is just bitch slapping FSR!

Pagusas

Elden Member

I’d say 95% of artifacts and 99% of bugs are fixed. On rare occasion you can get ghosting/trails, but even those got fixed with the latest dll. I’ve only encountered one bug in the last 3 month using dlss games.Have they fixed all artifacts/bugs yet?

Md Ray

Member

Version 2.2.6 DLSS of Rainbow Six, IMO, was virtually perfect in every single way. Those ghosting and trails were completely gone from this version.DLSS being better than native is crazy to me.

Have they fixed all artifacts/bugs yet?

Last edited:

Bernd Lauert

Banned

DLSS is already incredible. A couple years down the line it's gonna be basically perfect. AMD needs to do something similar, FSR can't get much better without motion vectors.

TyphoonStruggle

Member

Once AMD implements motion vectors into FSR they're gonna have ghosting problems. Then the AMD fanboys will love ghosting.DLSS is already incredible. A couple years down the line it's gonna be basically perfect. AMD needs to do something similar, FSR can't get much better without motion vectors.

Insane Metal

Member

Deep Learning looks better than sharpening filter, shocking.

FSR is a lazy effort by AMD

FSR is a lazy effort by AMD

Bonfires Down

Member

I can't see any difference in the Necromunda video so I'd just put it on FSR to get those extra few frames. Impressive work by AMD and I'm not sure why there was so much negativity around it.

The bridge pics, sure DLSS has the advantage, but not all games use vistas like these. And FSR still compares admirably to native res.

The bridge pics, sure DLSS has the advantage, but not all games use vistas like these. And FSR still compares admirably to native res.

Bernd Lauert

Banned

The negativity comes mainly from DLSS being much better. If DLSS didn't exist, FSR would be a nice feature, but in comparison it's just very lackluster unfortunately.I can't see any difference in the Necromunda video so I'd just put it on FSR to get those extra few frames. Impressive work by AMD and I'm not sure why there was so much negativity around it.

The bridge pics, sure DLSS has the advantage, but not all games use vistas like these. And FSR still compares admirably to native res.

Last edited:

Md Ray

Member

Because of dark low contrast images in Necromunda. Also, YT compression butchers detail even further. The Avengers screenshots are direct captures tho.I can't see any difference in the Necromunda video so I'd just put it on FSR to get those extra few frames. Impressive work by AMD and I'm not sure why there was so much negativity around it.

The bridge pics, sure DLSS has the advantage, but not all games use vistas like these. And FSR still compares admirably to native res.

Iamborghini

Member

FSR already have ghosting because it uses TAA.Once AMD implements motion vectors into FSR they're gonna have ghosting problems. Then the AMD fanboys will love ghosting.

Fredrik

Member

This is crazy. Somebody needs to explain how DLSS works, how can it look better than native res?

JimboJones

Member

I'm guessing necromunda has decent TAA ?I can't see any difference in the Necromunda video so I'd just put it on FSR to get those extra few frames. Impressive work by AMD and I'm not sure why there was so much negativity around it.

The bridge pics, sure DLSS has the advantage, but not all games use vistas like these. And FSR still compares admirably to native res.

FSR will simply scale the image it's not an AA solution itself, it depends on whatever the engine or developer had already implemented.

Sean Mirrsen

Banned

DLSS is an AI reconstruction technique, it's machine "deep learning" with some really complex math involved, hence why it works best on hardware dedicated to AI tasks, i.e. Tensor cores. It's basically the equivalent of pummeling a self-adjusting image manipulation algorithm with sequences of high and low resolution images with motion data, until it can reliably convert one into the other.This is crazy. Somebody needs to explain how DLSS works, how can it look better than native res?

The short explanation for why the quality is so high, is that the DLSS sparse network uses 16K resolution images for the learning process. Basically, at any detail level, it tries to recreate an image as if it were a 16K image, scaled down to whatever the render resolution is. It doesn't actually do that, but that's just what the output works out to. It's taught to make images at 16K, but if it's only asked to do 1080p it'll stop at 1080p, still with as much detail as it can reconstruct from the data it has, and as close to that 16K target as possible.

Armorian

Banned

It's even bitch slapping native rendering.

More like shit implementation of TAA, native shouldn't look like that. FSR looks shittier than it should because of that.

But yeah, amazing work from DLSS in avengers.

Last edited:

DonJuanSchlong

Banned

It's even bitch slapping native rendering.

Version 2.2.6 DLSS of Rainbow Six, IMO, was virtually perfect in every single way. Those ghosting and trails were completely gone from this version.

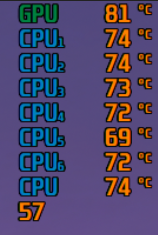

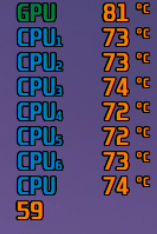

Around 3% loss in perf with DLSS for vastly superior image quality over FSR only makes DLSS look even more appealing. God damn.

Finally we can agree on somethingWhat AMD needs is AI/ML-based upscaling/reconstruction technique, and they need it soon if they want to be on par with NVIDIA's DLSS.

Kuranghi

Member

Its awesome how DLSS makes the hair look better than native, I wonder how native with TAA + sharpening would compare, does the Avengers engine have TAA/TAAU?

edit -

So that native shot is with TAA on definitely? If yes then I'd like to see the native + TAA image with sharpening, I would rarely use TAA without sharpening to reclaim the lost detail.

edit -

More like shit implementation of TAA, native shouldn't look like that. FSR looks shittier than it should because of that.

But yeah, amazing work from DLSS in avengers.

So that native shot is with TAA on definitely? If yes then I'd like to see the native + TAA image with sharpening, I would rarely use TAA without sharpening to reclaim the lost detail.

Last edited:

Armorian

Banned

Its awesome how DLSS makes the hair look better than native, I wonder how native with TAA + sharpening would compare, does the Avengers engine have TAA/TAAU?

edit -

So that native shot is with TAA on definitely? If yes then I'd like to see the native + TAA image with sharpening, I would rarely use TAA without sharpening to reclaim the lost detail.

Those details can't be recovered, something in their TAA implementation kills them. DLSS works works without TAA so it has all that stuff:

Native + TAA

DLSS Q

Even the fence looks much better

Kuranghi

Member

Those details can't be recovered, something in their TAA implementation kills them. DLSS works works without TAA so it has all that stuff:

Native + TAA

DLSS Q

Even the fence looks much better

Yeah, it has awful handling of transparencies, borderline pointless since thats where so much of the aliasing is going to be.

I think when they do sharpening + TAA properly its usually pre-sharpening to avoid sharpening the artifacts. I think thats how UE4 works but I'm not really sure.

bender

What time is it?

DLSS being better than native is crazy to me.

bender

What time is it?

OK.. I can see the difference... but it's really small, and I have to see them side by side..

Is that what we've come to? I'm getting old, back in the day any improvement was a huge difference.

This is tepid..

Just look at the bridge guidewires.

//DEVIL//

Member

I have a question ( i didnt watch the video yet )

but why they are showing the quality FSR setting and the ultra quality one ? AMD has 1 higher than just Quality.

Edit. just watched the first 3 minutes. he is using ultra quality. and I honestly cant tell the difference on my 2k monitor. maybe when zoomed in ?

Anyway I do not have doubt that DLSS is for now a better option. but honestly not by much. and seeing how community are modding their games to support FSR like GTA 5 and others, I would say if AMD can push to have FSR in the upcoming big games and improve it a little, they have a winner.

but why they are showing the quality FSR setting and the ultra quality one ? AMD has 1 higher than just Quality.

Edit. just watched the first 3 minutes. he is using ultra quality. and I honestly cant tell the difference on my 2k monitor. maybe when zoomed in ?

Anyway I do not have doubt that DLSS is for now a better option. but honestly not by much. and seeing how community are modding their games to support FSR like GTA 5 and others, I would say if AMD can push to have FSR in the upcoming big games and improve it a little, they have a winner.

Last edited:

TyphoonStruggle

Member

That’s okay. Just game at 1080p. Wish I was as blind as you then I could be happy with a 3050 Ti.OK.. I can see the difference... but it's really small, and I have to see them side by side..

Is that what we've come to? I'm getting old, back in the day any improvement was a huge difference.

This is tepid..

LiquidMetal14

hide your water-based mammals

Absolutely sons of the bitches stunning results

Fredrik

Member

Thank you!The short explanation for why the quality is so high, is that the DLSS sparse network uses 16K resolution images for the learning process. Basically, at any detail level, it tries to recreate an image as if it were a 16K image, scaled down to whatever the render resolution is.

martino

Member

if i'm correct dlss quality base resolution is equivalent to quality settings of fsr (66% of target reoslution)I have a question ( i didnt watch the video yet )

but why they are showing the quality FSR setting and the ultra quality one ? AMD has 1 higher than just Quality.

Edit. just watched the first 3 minutes. he is using ultra quality. and I honestly cant tell the difference on my 2k monitor. maybe when zoomed in ?

Anyway I do not have doubt that DLSS is for now a better option. but honestly not by much. and seeing how community are modding their games to support FSR like GTA 5 and others, I would say AMD if can push to have FSR in the upcoming big games and improve it a little, they have a winner.

Mister Wolf

Gold Member

The good thing about FSR, its that it can be implemented in consoles.

As long I can play games running at 60fps with FSR quality, DLSS doesnt matter.

FSR isn't even better than TAAU. Any game engine worth a shit should already be using TAA and have some form of temporal upscaling based off of it.

//DEVIL//

Member

WrongFSR isn't even better than TAAU. Any game engine worth a shit should already be using TAA and have some form of temporal upscaling based off of it.

Azelover

Titanic was called the Ship of Dreams, and it was. It really was.

I did... but I shoudln't need to do that.. to be quite honest.Just look at the bridge guidewires.

I've witnessed performance jumps way more significant than this.. I guess I'm spoiled.

Rikkori

Member

The reason DLSS resolves some details better than native is simply down to it being a different type of TAA, with + & -. On the + you resolve details like wires better, on the - you get more obvious ghosting. It's not "better" than native, it's just different and therefore it will deliver better results in some games, and for some elements, (Avengers) than others (RDR2). Since FSR simply tacks unto the already existing AA it will inherit the artefacts of the native image.

For people mentioning TAAU instead of FSR - joke's on you, you can have both, as they have done for Arcadegeddon on PS5.

Remember also, it's called FSR 1.0 for a reason. Expect to see significant improvements before the year's end.

ML upscaling has a future, but not with Nvidia, you can consider DLSS a zombie tech at this point.

If you know you know.

For people mentioning TAAU instead of FSR - joke's on you, you can have both, as they have done for Arcadegeddon on PS5.

Remember also, it's called FSR 1.0 for a reason. Expect to see significant improvements before the year's end.

ML upscaling has a future, but not with Nvidia, you can consider DLSS a zombie tech at this point.

If you know you know.

Last edited:

Thesuffering79

Banned

The reason DLSS resolves some details better than native is simply down to it being a different type of TAA, with + & -. On the + you resolve details like wires better, on the - you get more obvious ghosting. It's not "better" than native, it's just different and therefore it will deliver better results in some games, and for some elements, (Avengers) than others (RDR2). Since FSR simply tacks unto the already existing AA it will inherit the artefacts of the native image.

For people mentioning TAAU instead of FSR - joke's on you, you can have both, as they have done for Arcadegeddon on PS5.

Remember also, it's called FSR 1.0 for a reason. Expect to see significant improvements before the year's end.

ML upscaling has a future, but not with Nvidia, you can consider DLSS a zombie tech at this point.

If you know you know.

Ghosting has been solved on DLSS. It was only on there because the devs thought it was cool looking for some weird reason.

You are completely wrong why DLSS looks better than native. DLSS does machine learning from 16k output and that data is sent to retail versions. As time goes DLSS will look better an better as it learns more and more over time.

Rikkori

Member

Ghosting can't be solved, it's the nature of the technology (and of all temporal AA solutions that will ever exist). If you don't understand that then you don't understand what the parameters of DLSS are. Go watch this a couple of times so you know:Ghosting has been solved on DLSS. It was only on there because the devs thought it was cool looking for some weird reason.

You are completely wrong why DLSS looks better than native. DLSS does machine learning from 16k output and that data is sent to retail versions. As time goes DLSS will look better an better as it learns more and more over time.

Moreover, even if that weren't the case, because since DLSS 2.0 the ML model is a generalised one rather than trained for each game individually that means there's even less of a chance you'd have that magic combination of getting all the weights & heuristics right.

Thesuffering79

Banned

Ghosting can't be solved, it's the nature of the technology (and of all temporal AA solutions that will ever exist). If you don't understand that then you don't understand what the parameters of DLSS are. Go watch this a couple of times so you know:

Moreover, even if that weren't the case, because since DLSS 2.0 the ML model is a generalised one rather than trained for each game individually that means there's even less of a chance you'd have that magic combination of getting all the weights & heuristics right.

That is 2.0. Ghosting has been solved with 2.6 and upwards.

EDIT: Sorry 2.2 and upwards.

DLSS 2.2 Reported to Reduce Ghosting in Games

The new version addresses DLSS-related graphical anomalies in popular games like Cyberpunk 2077, Death Stranding, and Metro Exodus.

www.player.one

Last edited:

Sean Mirrsen

Banned

The model being generalized is why it can solve things like ghosting. Because ghosting is a repeating pattern that the AI can learn to recognize and eliminate during reconstruction, by recognizing particularly spaced clusters of pixels matching specific criteria for opacity and underlying motion data. Since it no longer tries to learn on each individual game, this kind of general problem that arises in very particular circumstances regardless of what game it's in, becomes eminently solvable.Moreover, even if that weren't the case, because since DLSS 2.0 the ML model is a generalised one rather than trained for each game individually that means there's even less of a chance you'd have that magic combination of getting all the weights & heuristics right.

Silent Viper

Banned

AMD tech is new and still not complete. It took Nvidia years to make DLSS good. It was not this good at launch.Wow DLSS is just bitch slapping FSR!

AMD solution just launched.