-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DLSS vs. FSR Comparisons (Marvel's Avengers, Necromunda)

- Thread starter Md Ray

- Start date

Kenpachii

Member

While DLSS looks better, my opinion still stays the same about DLSS vs FSR.

FSR is making DLSS far less interesting for the simple fact that everybody can use it even budget cards and get the same performance jump up. While the bridge wires etc look better etc, u won't notice this if you are not side by side comparing it. Its a minor difference at the end of the day. And the guy in the video says the same thing while he's comparing it.

I tried FRS in anno and frankly i couldn't see difference even at 1080p besides maybe minor things. Performance is what most people probably care about with features like these.

Nvidia also needs to figure out a system that pushes the latest dll file into games for there DLSS solution instead of having to google and do it yourself manually to get the best solution. maybe dlss 3.0 will solve this. But they gotta release this sooner rather then later.

FSR is making DLSS far less interesting for the simple fact that everybody can use it even budget cards and get the same performance jump up. While the bridge wires etc look better etc, u won't notice this if you are not side by side comparing it. Its a minor difference at the end of the day. And the guy in the video says the same thing while he's comparing it.

I tried FRS in anno and frankly i couldn't see difference even at 1080p besides maybe minor things. Performance is what most people probably care about with features like these.

Nvidia also needs to figure out a system that pushes the latest dll file into games for there DLSS solution instead of having to google and do it yourself manually to get the best solution. maybe dlss 3.0 will solve this. But they gotta release this sooner rather then later.

Last edited:

Armorian

Banned

Ghosting can't be solved, it's the nature of the technology (and of all temporal AA solutions that will ever exist). If you don't understand that then you don't understand what the parameters of DLSS are. Go watch this a couple of times so you know:

Moreover, even if that weren't the case, because since DLSS 2.0 the ML model is a generalised one rather than trained for each game individually that means there's even less of a chance you'd have that magic combination of getting all the weights & heuristics right.

Really?

AMD tech is new and still not complete. It took Nvidia years to make DLSS good. It was not this good at launch.

AMD solution just launched.

FSR 1.0 in a way it works right now will never be close to DLSS, and only right now it's easy to implement in games. Any version with motion vectors will be as hard to implement as DLSS.

Rikkori

Member

No, it hasn't. It reduced ghosting in some games while exacerbating other artefacts, and then in other games it didn't do even that. And if we look at the latest version of it in RDR 2 it's a complete mess. So don't be so eagerly premature in your celebration of "solved".That is 2.0. Ghosting has been solved with 2.6 and upwards.

EDIT: Sorry 2.2 and upwards.

DLSS 2.2 Reported to Reduce Ghosting in Games

The new version addresses DLSS-related graphical anomalies in popular games like Cyberpunk 2077, Death Stranding, and Metro Exodus.www.player.one

Yes, given infinite computing resources (and likely time) that's theoretically possible to at least a visually subjective lossless degree. Just like if we had infinite resources for curing cancer, fusion energy, intergalactic travel and world peace. Meanwhile, in the real world...The model being generalized is why it can solve things like ghosting. Because ghosting is a repeating pattern that the AI can learn to recognize and eliminate during reconstruction, by recognizing particularly spaced clusters of pixels matching specific criteria for opacity and underlying motion data. Since it no longer tries to learn on each individual game, this kind of general problem that arises in very particular circumstances regardless of what game it's in, becomes eminently solvable.

As a side note, it might not be a great idea to base your idea of a technology solely on an Nvidia-sponsored outlet like DF.Really?

Thesuffering79

Banned

No, it hasn't. It reduced ghosting in some games while exacerbating other artefacts, and then in other games it didn't do even that. And if we look at the latest version of it in RDR 2 it's a complete mess. So don't be so eagerly premature in your celebration of "solved".

Yes, given infinite computing resources (and likely time) that's theoretically possible to at least a visually subjective lossless degree. Just like if we had infinite resources for curing cancer, fusion energy, intergalactic travel and world peace. Meanwhile, in the real world...

As a side note, it might not be a great idea to base your idea of a technology solely on an Nvidia-sponsored outlet like DF.

That's a video of it upscaled to 1080p. DLSS 2.10 was a mess of an update. DLSS 2.6 manually used in RDR2 is a lot better.

Last edited:

Armorian

Banned

As a side note, it might not be a great idea to base your idea of a technology solely on an Nvidia-sponsored outlet like DF.

Hahahahaha

Skifi28

Member

If you're playing at a lower resolution, you're upscaling anyway. FSR gives better results than just letting your monitor upscale the image. It's getting silly at this point.I'd rather just play with a lower native resolution than try and upscale, if those are the results. DLSS 2.2 is just other-worldly good at this point.

Darius87

Member

This is crazy. Somebody needs to explain how DLSS works, how can it look better than native res?

M1chl

Currently Gif and Meme Champion

People linking Hardware Unboxed. Can't wait for the time, this source is going to be banned here. Massive Radeon fanboy, no objectivity.

It's really amazing AMD Radeon engineering, truly groundbraking.

Also good thing is that FSR does not need to be used at all, because it produces quality, which you can achieve cheaply by droping res.The good thing about FSR, its that it can be implemented in consoles.

As long I can play games running at 60fps with FSR quality, DLSS doesnt matter.

It's really amazing AMD Radeon engineering, truly groundbraking.

Last edited:

Armorian

Banned

No, it hasn't. It reduced ghosting in some games while exacerbating other artefacts, and then in other games it didn't do even that. And if we look at the latest version of it in RDR 2 it's a complete mess. So don't be so eagerly premature in your celebration of "solved".

There is this one version (2.2.6?) that Nvidia put in R6 that solves almost all issues with DLSS. Why there are "newer" versions in games that that works like previous DLSS builds is beyond me but WE HAVE this one near perfect version that can be implemented in all DLSS 2.0 games, just need to CTRL+C.

They look the same.

—

Sent from my Nokia 3310

LOL, this is quite common pattern for many "tech experts" here, watching comparisons/screens on lower resolution displays and saying:

Last edited:

There is this one version (2.2.6?) that Nvidia put in R6 that solves almost all issues with DLSS. Why there are "newer" versions in games that that works like previous DLSS builds is beyond me but WE HAVE this one near perfect version that can be implemented in all DLSS 2.0 games, just need to CTRL+C.

LOL, this is quite common pattern for many "tech experts" here, watching comparisons/screens on lower resolution displays and saying:

General public won’t see it ..

Last edited:

Werewolfgrandma

Banned

Dlss isn't nearly as good in rdr2. I think it's more the engine though.There is this one version (2.2.6?) that Nvidia put in R6 that solves almost all issues with DLSS. Why there are "newer" versions in games that that works like previous DLSS builds is beyond me but WE HAVE this one near perfect version that can be implemented in all DLSS 2.0 games, just need to CTRL+C.

LOL, this is quite common pattern for many "tech experts" here, watching comparisons/screens on lower resolution displays and saying:

Brofist

Member

Better to go with unbiased Youtubers like Hardware Unboxed and Morons Law is DeadHahahahaha

Armorian

Banned

General public won’t see it ..

General public don't go to threads like this and play Nintendo Switch/Minecraft

Dlss isn't nearly as good in rdr2. I think it's more the engine though.

It's oversharpen as fuck from what I tested in RDR2

Thesuffering79

Banned

General public won’t see it ..

Which is the same group of people that think they can see 4k. SMH.

Armorian

Banned

It's just the first release. Avengers looks bad, but Necromunda does not, so it has potential, I guess. Hopefully being open source will make FSR improve quickly.

It depends on how well TAA is implemented in game. In games where it's not so great... like Control, Death Stranding, Avengers DLSS can produce better results than TAA even with lower internal resolution. FSR is based on image with already implemented TAA so in games like that that are already not so good with TAA in native resolution FSR will just make everything look worse.

Necomunda use UE4 that has quite good TAA implementation.

MastaKiiLA

Member

DLSS is seriously some impressive-ass shit. No 2 ways about it. The future of gaming is going to be insane because you're not going to need to render at native resolutions to get stellar image quality. More horsepower to put towards putting assets on screen, and just let DLSS sort the bodies out for you. If only either the XSX or PS5 had launched with NVidia chips in them, this generation would be crazy.

AMD will definitely want to put some work into FSR to replicate the results DLSS is delivering. I assume it will only evolve with the next iteration of AMD GPU cores. My apologies if I'm talking out of my ass. I don't follow the hardware side of things anymore, so just my opinion as an outsider.

AMD will definitely want to put some work into FSR to replicate the results DLSS is delivering. I assume it will only evolve with the next iteration of AMD GPU cores. My apologies if I'm talking out of my ass. I don't follow the hardware side of things anymore, so just my opinion as an outsider.

omegasc

Member

I believe it does not need to be exactly as good or better than DLSS. If it starts producing great results and can be used more easily, better TAA will be developed with FSR in mind. New engines might factor this during development. If it gets to, say, 80% the IQ of DLSS at the same framerates, I'd say it's good enough, if that means any game can launch with FSR support on any platform without much tinkering, unlike DLSS, unless I got it wrong. (btw, I love what nvidia is doing with DLSS! Black magic stuff. I just want both to succeed!)It depends on how well TAA is implemented in game. In games where it's not so great... like Control, Death Stranding, Avengers DLSS can produce better results than TAA even with lower internal resolution. FSR is based on image with already implemented TAA so in games like that that are already not so good with TAA in native resolution FSR will just make everything look worse.

Necomunda use UE4 that has quite good TAA implementation.

AnotherOne

Member

Yeah except FSR is software based vs DLSS which is hardware based so don't expect FSR to catch up.The DLSS is mature, the result is spectacular after several versions.

The FSR is only at its first iteration, AMD will improve it for sure so wait and see.

michaelius

Banned

This is why you don't take AMD promises that they will deliver competing solution to something Nvidia does until it's actually delivered and can be judged on quality.

Armorian

Banned

I believe it does not need to be exactly as good or better than DLSS. If it starts producing great results and can be used more easily, better TAA will be developed with FSR in mind. New engines might factor this during development. If it gets to, say, 80% the IQ of DLSS at the same framerates, I'd say it's good enough, if that means any game can launch with FSR support on any platform without much tinkering, unlike DLSS, unless I got it wrong. (btw, I love what nvidia is doing with DLSS! Black magic stuff. I just want both to succeed!)

Yes, it's good that FSR is there - low/mid gen GPU users and people pursuing highest framerates have much better option than just lowering game resolution. I love to use DLSS/FSR with DSR so much more games will offer this options.

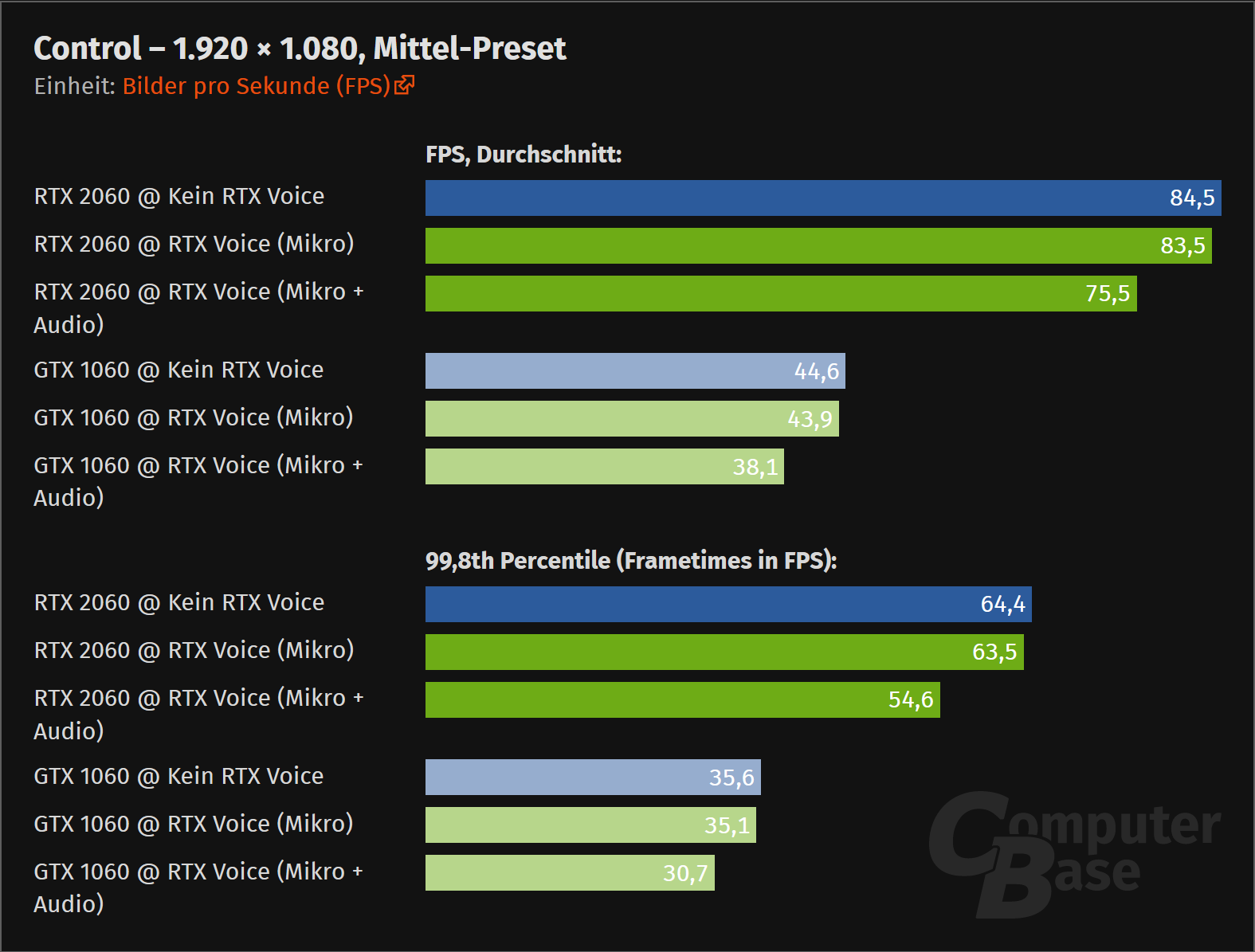

Yeah except FSR is software based vs DLSS which is hardware based so don't expect FSR to catch up.

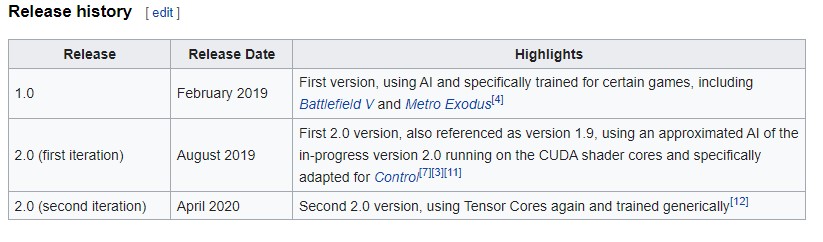

There is this weird case of DLSS 1.9

It was running on shader cores, so maybe Tensors are not exactly needed for this. Knowing nvidia this could be the case...

RTX Voice doesn't require RTX at all

Source: https://forums.guru3d.com/threads/nvidia-rtx-voice-works-without-rtx-gpu-heres-how.431781/ Quote The new nVIDIA [sic] RTX Voice works just fine without an RTX GPU and on windows 7 too, tested and working on a GTX 1080 and a Titan V, here's how to make it install... It turns out that the i...

linustechtips.com

linustechtips.com

RoadHazard

Gold Member

FSR seems no better than the reconstruction methods already used by a lot of console games, in fact it looks worse than some of them. Maybe it uses less resources, I dunno.

FukuDaruma

Member

Red Dead Redemption 2 DLSS 2.2.10.0 Benchmarks

Yesterday, Rockstar released the highly anticipated DLSS Patch for Red Dead Redemption 2 so we've decided to benchmark it.

www.dsogaming.com

www.dsogaming.com

"All in all, we are really disappointed by the DLSS implementation in Red Dead Redemption 2. Contrary to other games, DLSS does not bring a big performance boost in Red Dead Redemption 2. And even though it uses the latest 2.2.10.0 version, it brings a lot of aliasing at both 1080p and 1440p. Therefore, we strongly recommend avoiding it at these low resolutions. As for 4K, we can only recommend DLSS Quality (and certainly not the other modes) to those that have performance issues but do not want to lower their in-game settings. However, and if you can hit 60fps at all times, you should simply avoid using DLSS!"

Is RDR2's DLSS really that bad??

D

Deleted member 17706

Unconfirmed Member

Red Dead Redemption 2 DLSS 2.2.10.0 Benchmarks

Yesterday, Rockstar released the highly anticipated DLSS Patch for Red Dead Redemption 2 so we've decided to benchmark it.www.dsogaming.com

"All in all, we are really disappointed by the DLSS implementation in Red Dead Redemption 2. Contrary to other games, DLSS does not bring a big performance boost in Red Dead Redemption 2. And even though it uses the latest 2.2.10.0 version, it brings a lot of aliasing at both 1080p and 1440p. Therefore, we strongly recommend avoiding it at these low resolutions. As for 4K, we can only recommend DLSS Quality (and certainly not the other modes) to those that have performance issues but do not want to lower their in-game settings. However, and if you can hit 60fps at all times, you should simply avoid using DLSS!"

Is RDR2's DLSS really that bad??

It's less impressive than other games, but I've been enjoying playing it at 4K in Performance mode. Looks and runs better than what I was doing earlier (AA + reduced internal rendering resolution).

Werewolfgrandma

Banned

I've read it's a rage engine issue with transparency. So it can't be "fixed" by dlss.

Red Dead Redemption 2 DLSS 2.2.10.0 Benchmarks

Yesterday, Rockstar released the highly anticipated DLSS Patch for Red Dead Redemption 2 so we've decided to benchmark it.www.dsogaming.com

"All in all, we are really disappointed by the DLSS implementation in Red Dead Redemption 2. Contrary to other games, DLSS does not bring a big performance boost in Red Dead Redemption 2. And even though it uses the latest 2.2.10.0 version, it brings a lot of aliasing at both 1080p and 1440p. Therefore, we strongly recommend avoiding it at these low resolutions. As for 4K, we can only recommend DLSS Quality (and certainly not the other modes) to those that have performance issues but do not want to lower their in-game settings. However, and if you can hit 60fps at all times, you should simply avoid using DLSS!"

Is RDR2's DLSS really that bad??

FukuDaruma

Member

The hair is what shocked me the most. It's literally the total opposite example of the Avengers quality:It's less impressive than other games, but I've been enjoying playing it at 4K in Performance mode. Looks and runs better than what I was doing earlier (AA + reduced internal rendering resolution).

Left: TAA Right: DLSS

D

Deleted member 17706

Unconfirmed Member

The hair is what shocked me the most. It's literally the total opposite example of the Avengers quality:

Left: TAA Right: DLSS

I haven't noticed that, but I am playing with Performance level DLSS. It's almost as if Rockstar counted on the TAA muddiness to sort of blend the hair strands together.

Rikkori

Member

DLSS destroys a lot more details than FSR & Native, but the fanboy brigade is never eager to point those out, instead only showing wires. Don't forget that FSR is not a form of AA unlike DLSS, which means you can pick and choose your poison. Like I said, there's pros & cons to all these methods.

Or a cheap method that doesn’t require specialized hardware.Deep Learning looks better than sharpening filter, shocking.

FSR is a lazy effort by AMD

StreetsofBeige

Gold Member

Based on those pics, the only difference I can notice are the brigde cables.DLSS being better than native is crazy to me.

Have they fixed all artifacts/bugs yet?

But yeah, sounds crazy that DLSS is better than native, when you'd think native should be the best.

That makes no sense. Lowering resolution is not going to improve picture quality. The whole point is to increase resolution and still maintain high FPS.People linking Hardware Unboxed. Can't wait for the time, this source is going to be banned here. Massive Radeon fanboy, no objectivity.

Also good thing is that FSR does not need to be used at all, because it produces quality, which you can achieve cheaply by droping res.

It's really amazing AMD Radeon engineering, truly groundbraking.

StreetsofBeige

Gold Member

DLSS destroys a lot more details than FSR & Native, but the fanboy brigade is never eager to point those out, instead only showing wires. Don't forget that FSR is not a form of AA unlike DLSS, which means you can pick and choose your poison. Like I said, there's pros & cons to all these methods.

Good post.

Whereas the bridge images show DLSS being better, your pics show DLSS looking like shit. What's are those black boxes? Speakers? DLSS is a flat surface! lol

M1chl

Currently Gif and Meme Champion

...and since when FSR improves picture quality?That makes no sense. Lowering resolution is not going to improve picture quality. The whole point is to increase resolution and still maintain high FPS.

PaintTinJr

Member

But that is scientifically worse - because the reference image is what they are supposed to exactly look like.It's even bitch slapping native rendering.

From the female face picture set, FSR 1.0 is a much better quality reproduction of the native image. I prefer the DLSS 2.2 image, but if you changed the context of the things it is altering compared to native, then important detail would be getting lost, by comparison to FSR 1.0.

Fahdis

Member

you guys can keep your image reconstruction. Ive never had a great experiuence with it in any form and will continue to use native even if i have to knock down some settings.

Purist. Time to take you down in a witchhunt! How dare you not gag new tech down your throat heathen?!!!

Werewolfgrandma

Banned

I find it kind of silly comparisons use stills when games usually are played with lots of motion

Sean Mirrsen

Banned

Yes they're speakers.Good post.

Whereas the bridge images show DLSS being better, your pics show DLSS looking like shit. What's are those black boxes? Speakers? DLSS is a flat surface! lol

The native render and the FSR are actually the result of artifacting. Real speakers with mesh or cloth grilles usually look like the DLSS render, unless the grille is really sparse, really thin, or has light shining directly into it.

I understand if you've never seen such, the 'open' style of speakers are pretty popular nowadays, but they exist, and they do look like that.

edit: actually looking closer, the mesh on those speakers is indeed pretty sparse, but my overall point still stands. To me the image presented by DLSS looks neater and more realistic than the native-render one. However, it's possible that that result is unintended, so I'll admit it could be an issue that should be fixed.

Last edited:

Sean Mirrsen

Banned

Actually, no. The "reference image" in this case is not the native render. The "reference" in this case does not exist, it's a theoretical image rendered at infinite quality from the 3D scene presented by the game. DLSS works towards that theoretical "reference" using machine-learned 'guesses' to plug gaps and build in the native render, while FSR just works to not lose details from the native render.But that is scientifically worse - because the reference image is what they are supposed to exactly look like.

From the female face picture set, FSR 1.0 is a much better quality reproduction of the native image. I prefer the DLSS 2.2 image, but if you changed the context of the things it is altering compared to native, then important detail would be getting lost, by comparison to FSR 1.0.

Last edited:

Werewolfgrandma

Banned

DLSS destroys a lot more details than FSR & Native, but the fanboy brigade is never eager to point those out, instead only showing wires. Don't forget that FSR is not a form of AA unlike DLSS, which means you can pick and choose your poison. Like I said, there's pros & cons to all these methods.

Those speakers look like hot trash native or fsr and actually look like a speaker grill in dlss. The dlss is way way way above in that speaker pic like it's no contest at all. Native and fsr the grill is just sparse and hot trash.Good post.

Whereas the bridge images show DLSS being better, your pics show DLSS looking like shit. What's are those black boxes? Speakers? DLSS is a flat surface! lol

The words by the chips look better in dlss as well but the chips look bad in all pics. The Crystal pic with the ring around it, the ring looks much better with dlss but I don't notice much difference elsewhere.

Fsr is doing a fine job though, but it doesn't seem useful if dlss is also an option.

Last edited:

Hypno285

Banned

You know what? 4k and ray tracing are just a meme. Let it go. DLSS and FSR are just figments of your imagination.

DLSS looks really good, better then native. Hope FSR gets there but I heard both look bad in motion.

Those DLSS pics reminds of those anti-aliasing methods SGSSAA and OGSSAA with the way it improves the hair.

DLSS looks really good, better then native. Hope FSR gets there but I heard both look bad in motion.

Those DLSS pics reminds of those anti-aliasing methods SGSSAA and OGSSAA with the way it improves the hair.

Last edited:

PaintTinJr

Member

I just checked nvidia's blurb, and it does seem like they are doing more than their original DLSS - not least by no longer training the algorithm per game, and now using the same domain problem neural network to do all the inference for all games.Actually, no. The "reference image" in this case is not the native render. The "reference" in this case does not exist, it's a theoretical image rendered at infinite quality from the 3D scene presented by the game. DLSS works towards that theoretical "reference" using machine-learned 'guesses' to plug gaps and build in the native render, while FSR just works to not lose details from the native render.

However, their marketing words still doesn't "scientifically" meet the requirement for better than native 4K IMHO, even if the results are more comparable, to say 8K super sampled - or higher, with additional sub scene fx carrying less noise - and the main reason for that, is the term "better" requires a point of reference, which going by their own DLSS' webpage image comparison - native with DLSS off, and then with it on - the DLSS on image fails to pixel match the outline of the assets in the scene completely, the gun and hand showing the displacement when moving between the two renders.

Where that would be a non issue if this was for DLSS-ing non-interactive entertainment, an incoherent representation by even a pixel distance difference of outline geometry - compared to native res - in a footy game, a 3D fighting game, a driving game, or a first person shooter (as examples) could be the difference between success and failure - because of choices made from incoherent visual feedback of the game state/problem.

DLSS looks to do the job, and looks great, but making marketing claims to be better than native - because the higher frequency fx are superior, yet the low frequency scene composition is off by pixels - just feels like marketing to me. If there is a whitepaper on the latest DLSS to be read, I would certainly love to read more about their technique to assess the claim of "better".

PaintTinJr

Member

Where previously they were doing per game DLSS, the one neural net fits all situation seems to place DLSS into more extreme results at best and worse - going by the speaker and RDR2 DLSS advice in another comment.Yes they're speakers.

The native render and the FSR are actually the result of artifacting. Real speakers with mesh or cloth grilles usually look like the DLSS render, unless the grille is really sparse, really thin, or has light shining directly into it.

I understand if you've never seen such, the 'open' style of speakers are pretty popular nowadays, but they exist, and they do look like that.

edit: actually looking closer, the mesh on those speakers is indeed pretty sparse, but my overall point still stands. To me the image presented by DLSS looks neater and more realistic than the native-render one. However, it's possible that that result is unintended, so I'll admit it could be an issue that should be fixed.

Guessing (inference) with one model might be harder to tune for specific problems, because as you compensate for one scenario - biasing the algorithm - that overfit might then work against other newer games that the model hasn't seen, yet. I would have thought that the per game DLSS training would be superior in the long-run, mostly because you want it to bias to each game's data AFAIK - because that will yield the more accurate result to a super-res native output.

Sean Mirrsen

Banned

It also requires a measurement metric. Compared to the native render as a point of reference, and using the things you listed - higher fidelity resembling supersampled rendering and reduced noise in details - as the metric, the DLSS render is better.the term "better" requires a point of reference

You've chosen something else as the metric, and while I'd agree that for some cases it's important that the result pixel-match the native render, I also struggle to imagine the exact cases where it would be relevant considering the supposed deviation is in terms of pixels, on a 4K image.

And I also can't quite find what you're referring to in regards to examples, can you point me to the specific comparison? Seeing as a lot of comparisons are from normal gameplay, and recording both DLSS on and DLSS off footage is functionally impossible, it's not out of the question for the comparison shots to be slightly different in timing and scene composition.

Polygonal_Sprite

Gold Member

I'm so glad I'm at the point where I can barely tell between 1440p and 4k never mind this sort of stuff lol.