Ps5 uses boost clocks, variable. You can't just compare this way

You still spreading the MS fud?

There’s no better explanation that what Mark Cerny has already given in his talk, and later clarified in his DigitalFoundry interview.

It’s tied to power usage, not temperature. It’s designed so that for the most part the clocks stay at their highest frequencies, regardless of whether the console is in a TV cabinet or somewhere cold.

It’s designed to be deterministic. The purpose is to reduce clocks when they don’t need to be so high to help with power usage and keeping the fans quiet. If a GPU is expected to deliver a frame every 16.6ms (60 FPS) and it’s done its work already in 8ms, then there’s no point it sitting there idle at 2.23Ghz sucking power and generating heat. If it could intelligently drop the clocks so that it finishes its frame just before the 16.6ms you get the same 60 FPS game, the same graphical detail, but with much less fan noise.

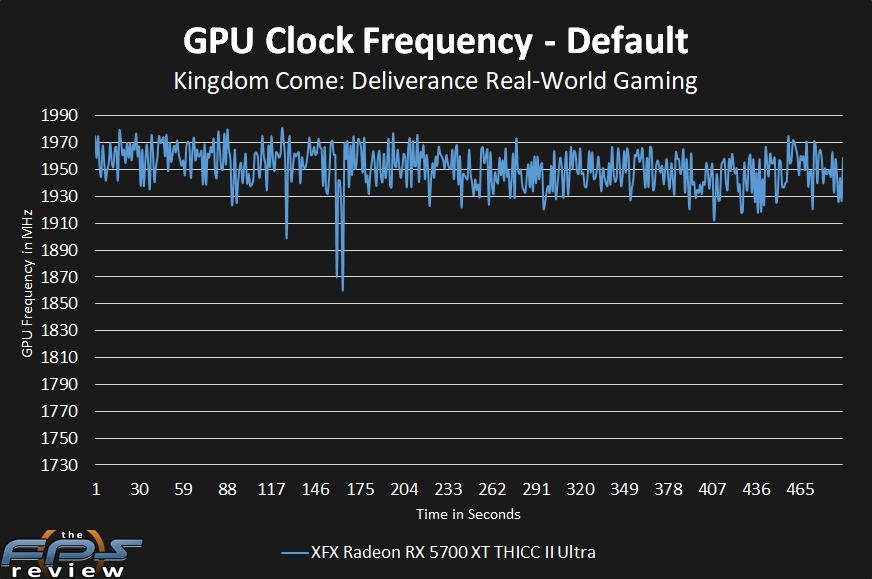

Anyone with a gaming PC will know that GPU utilisation is rarely at 100%

It typically takes burn tests and crazy benchmark software to get that.

Cerny seemed to suggest that you’d need quite a synthetic test to really load up both the CPU and GPU enough to cause them to declock for power reasons, and that it won’t show up in any normal game.

He said that same synthetic test would simply cause a PS4 to overheat and shutdown.

And even then, dropping power consumption by 10% only drops core clocks by a “few” percent. Which makes sense if you’re used to overclocking modern GPUs. You need to crank up the power to get even a minimal amount of extra clock, and cranking up an already jacked up GPU clock by a “few” percent barely makes a difference to performance anyway.

The PS5 can change powerdraw multiple times per frame.