I'm guessing the Titan II might have 10 GB with 1 SMM disabled and the cheaper GTX 1080 would be an 8 GB card with weaker DP and 2 SMMs disabled.

Then refresh in early 2016 on either same 28nm process (lol) or 20nm - Titan II Black no SMMs disabled and GTX 1080 Ti with only one SMM disabled.

In late 2016 comes the first cards in the Pascal family (mid sized GPx04 ?) on 16nm FinFET. 2017 we get 'Big Pascal' while the professional / server / supercomputer markets gets the Volta architecture (for the Tesla and maybe Quadro lines) which we won't see on the consumer/gaming side until 2018.

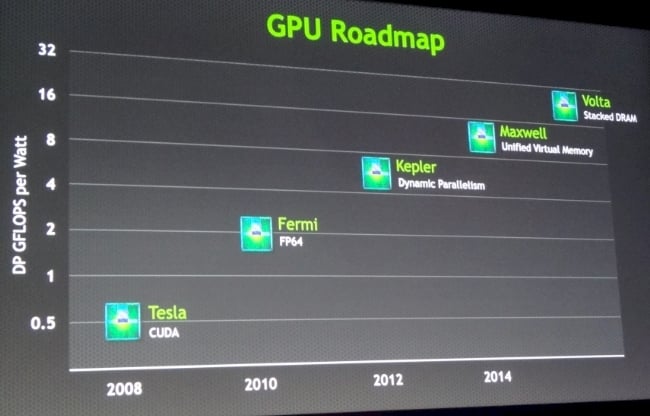

Remember Volta was originally meant to follow Maxwell but last year Nvidia announced Pascal.

It seems Volta was too ambitious for 2016 with both 1 TB/sec 3D stacked DRAM and NVLink. So they moved NVLink (and perhaps other features) to a later time and with the first generation of cards with 3D stacked RAM (HBM) the bandwidth will be high but not 1 TB/sec high, thus Pascal.

2013 roadmap

2014 roadmap

Volta might be to Pascal what Maxwell is to Kepler.

I know someone will come along and either pick apart my theory, or at least correct it or expand on it since I know absolutely nothing.