Agnostic2020

Member

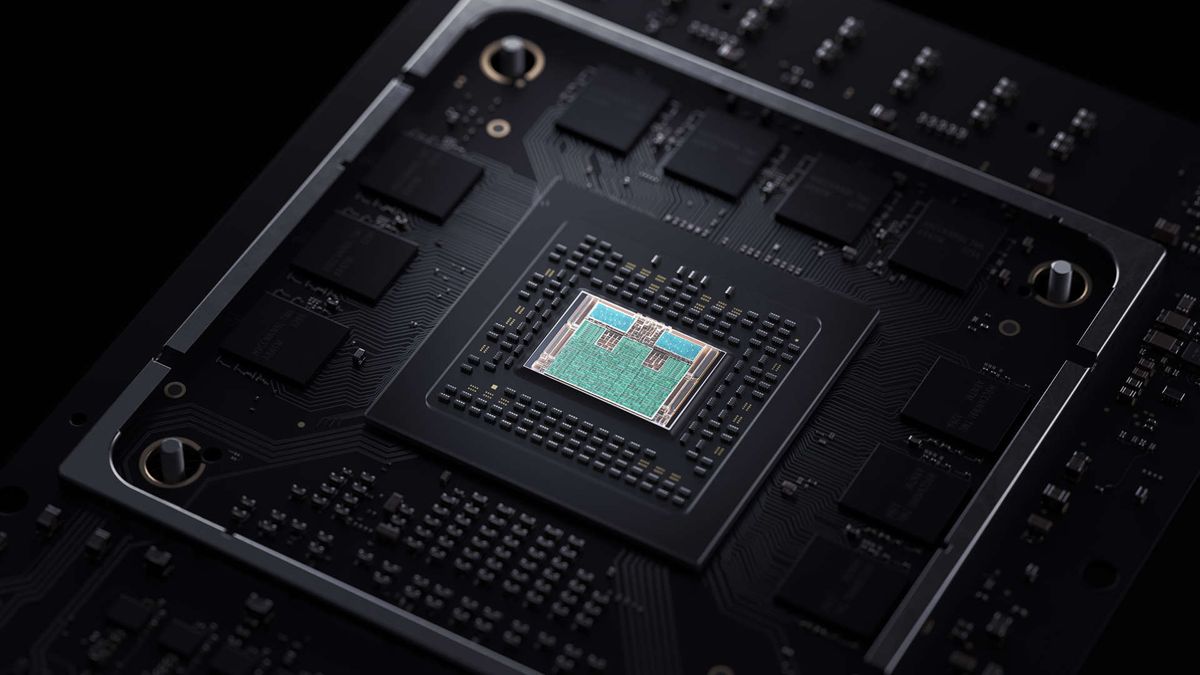

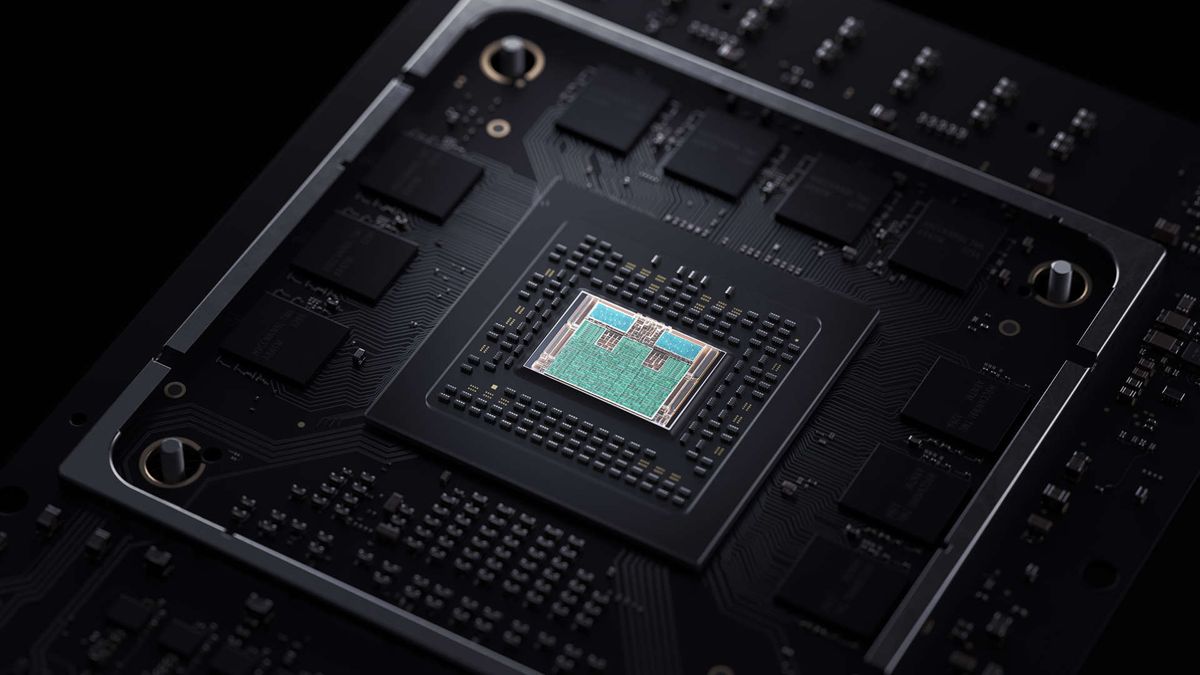

Mark Grossman, principal architect at Microsoft, has detailed the graphics silicon inside the Series X and just what role ray tracing has in it.

The short answer seems to be: not much.

while Microsoft has indeed worked with AMD to ensure there is some level of ray tracing support inside the Xbox Series X GPU, Grossman doesn't seem to be that enthused about how readily it will be utilised.

"We do support DirectX Raytracing acceleration, for the ultimate in realism™, but in this generation developers still want to use traditional rendering techniques, developed over decades, without a performance penalty," says Grossman sadly. "They can apply ray tracing selectively, where materials and environments demand, so we wanted a good balance of die resources dedicated to the two techniques."

www.pcgamer.com

www.pcgamer.com

I mean it was kind of given raytracing will be limited in these machine.

As long as we get some RT refelction and global illumination we should be good .

The short answer seems to be: not much.

while Microsoft has indeed worked with AMD to ensure there is some level of ray tracing support inside the Xbox Series X GPU, Grossman doesn't seem to be that enthused about how readily it will be utilised.

"We do support DirectX Raytracing acceleration, for the ultimate in realism™, but in this generation developers still want to use traditional rendering techniques, developed over decades, without a performance penalty," says Grossman sadly. "They can apply ray tracing selectively, where materials and environments demand, so we wanted a good balance of die resources dedicated to the two techniques."

Xbox architect on ray tracing: 'developers still want to use traditional rendering techniques without a performance penalty'

The Xbox Series X still has dedicated ray tracing hardware anyways...

I mean it was kind of given raytracing will be limited in these machine.

As long as we get some RT refelction and global illumination we should be good .