Anyway nice video nxgamer, enjoyed it.

People are still pushing this 52 cu bottleneck idiocy?

There is no indication the I/O in the PS5 does better then i/o in the xbox series X in the matrix demo. That's just tinfoil head nonsense. U can't diagnose the hardware on those boxes and without information from tim himself or a demonstration that goes into deeper detail from cerny or the xbox guy we don't know what is going on. Its funny how they always are silent when real data is asked for.

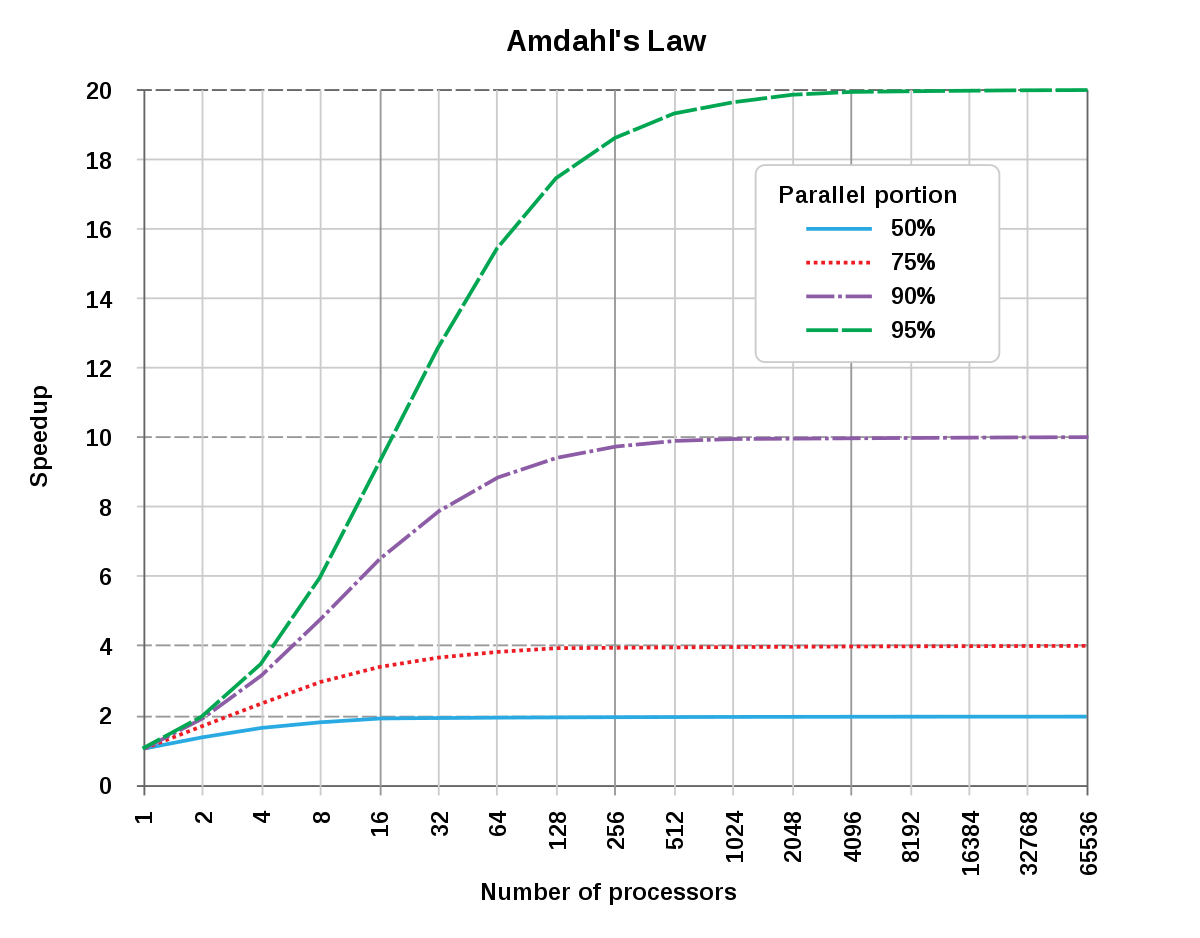

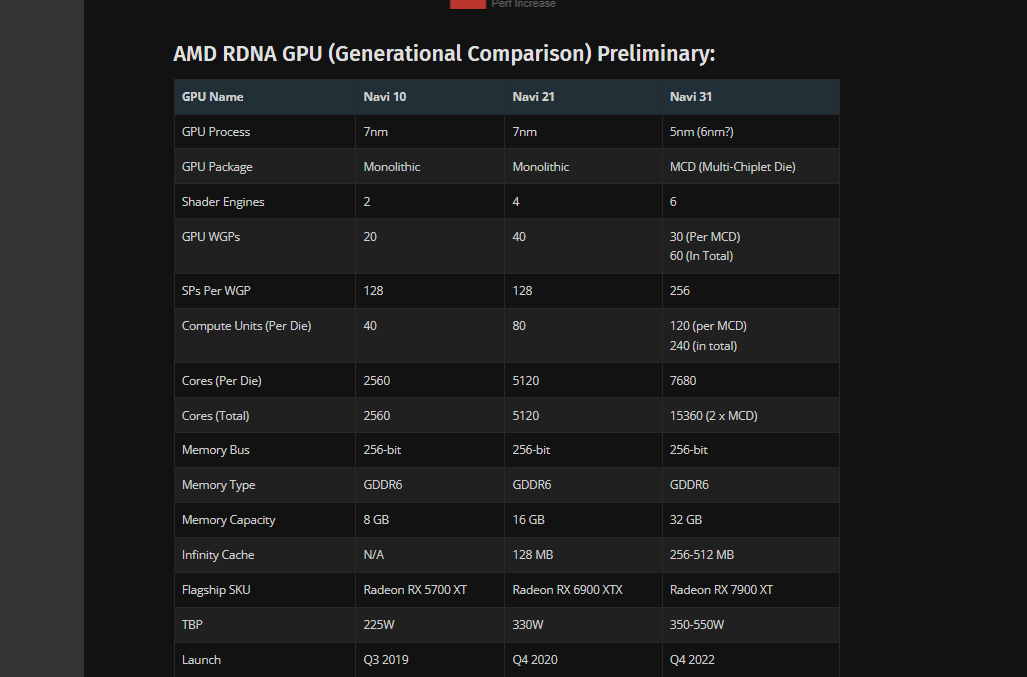

U keep talking about RDNA2 likes high clocks otherwise CU's are bottlenecked, but AMD doesn't agree with you

Here are there PC GPU's.

With your logic the 6900xt should just pack 36 cu's at best. Clearly AMD doesn't agree with you there, because they go as high as 80.

6600xt has 32 cu's and 2600 mhz

6700xt has 40 cu's and 2600 mhz

6800 has 60 cu's and 2100 mhz

6800xt has 72 cu's and 2250 mhz

6900xt has 80 cu's and 2250 mhz

Xbox sits at 52 cu's at 1.825 mhz

PS5 sits at 36 cu's at 2.230 mhz

It gets even more fun

Let's not pretend 52cu's are hard to feed at 1825 mhz.

6700xt not using 52 cu's doesn't mean anything, AMD makes cards to compete with nvidia and focuses on targets for that solution. the 6700xt is mostly going to be used as a 1080p/1440p card without RT, its probably better to push higher clocks lower cu's as a result which favors the card in benchmarks against nvidia ( hardware unboxed ) Both ps5 and xbox are built for high resolutions and some form of RT which will favor higher cu counts over lower faster ones. unless your software is still built around last gen solutions.

This is why u see barely a difference with a 3080 over a 6800xt in some titles, but in other titles like control / cyberpunk radeon falls off pretty hard.

Now at the end of the day what does 18% or whatever advantage xbox series X gpu deliver bring? minor advancements if devs are even bothered by it. because there is no unlocked framerate on consoles so anything not 60 will be locked to 30. The only differences that u will see is what performance tanks under the 30. But devs should never allow that to happen anyways because they failed at optimized in for those boxes.

Epic the maker of the engine and demo build it for the PS5, the port was done by a dev group from microsoft. It's safe to assume it works in the strengths of the PS5 over xbox series X because its straight up designed for it. And the optimization in a tech demo on a engine with features that are not done yet with limited time is probably not something that's going to yield in great results etc.

To make it more easier to understand.

A good example:

6800xt can perform like a 3080 ( borderlands 3 ), it can outperform a 3080 ( ac valhalla ) and it can be far worse then a 3080 ( control ). So what is better a 6800xt or a 3080?

Depends on where the game is made for, what optimization took place, and what solutions it pushes, and what targets where made.

Matrix demo:

Low resolution ( low cu usage ), built for PS5 ( performance targets which means stay within 36 cu solution )

What happens when u only allocate 36 cu's in a game and one box has higher clocks? u get more performance on that higher clock box.

But what happens when u get more performance, but 30 fps lock is there anyway so u can't really see it? performance differences dissapear. But luckely the demo didn't stay on 30 fps fps did it? it dropped way below the 30's, isn't that interesting while with games in general u always see steady 30 fps locks? I wonder why that is. How could a company like epic not retain a stable 30 fps? why such a low resolution? was it not builded specifically for the PS5? so why not just choose 4k as resolution and a rock solid 30 fps with framerates going wild at ~45.

What happens when u focused the demo on 4k resolution and 52 cu solution at 30 fps on a xbox series X. Would the PS5 perform worse? no, because the fps lock would be 30 stable on both and the 50 vs 35 fps ( random numbers ) won't be showcased. See how that works.

But this is just GPU, tons of other problems to simple optimization issue's or time could create problems. it's not always just hardware.

The matrix demo is made to showcase epic engine off, and showcase it can be used on current gen consoles in a tech demo. It does nothing else then that. comparisons of performance between the two boxes isn't much useful in a tech demo like this. It's interesting to look at for science but that's about it. This is also why DF didn't burn there hands on comparisons.

It's so hard that practically all besides a select few RDNA2 cards and RDNA3 cards have more cu's then 36's. Guess AMD that made the PS5 GPU architecture has no clue what they are doing.