ZywyPL

Banned

Ssex

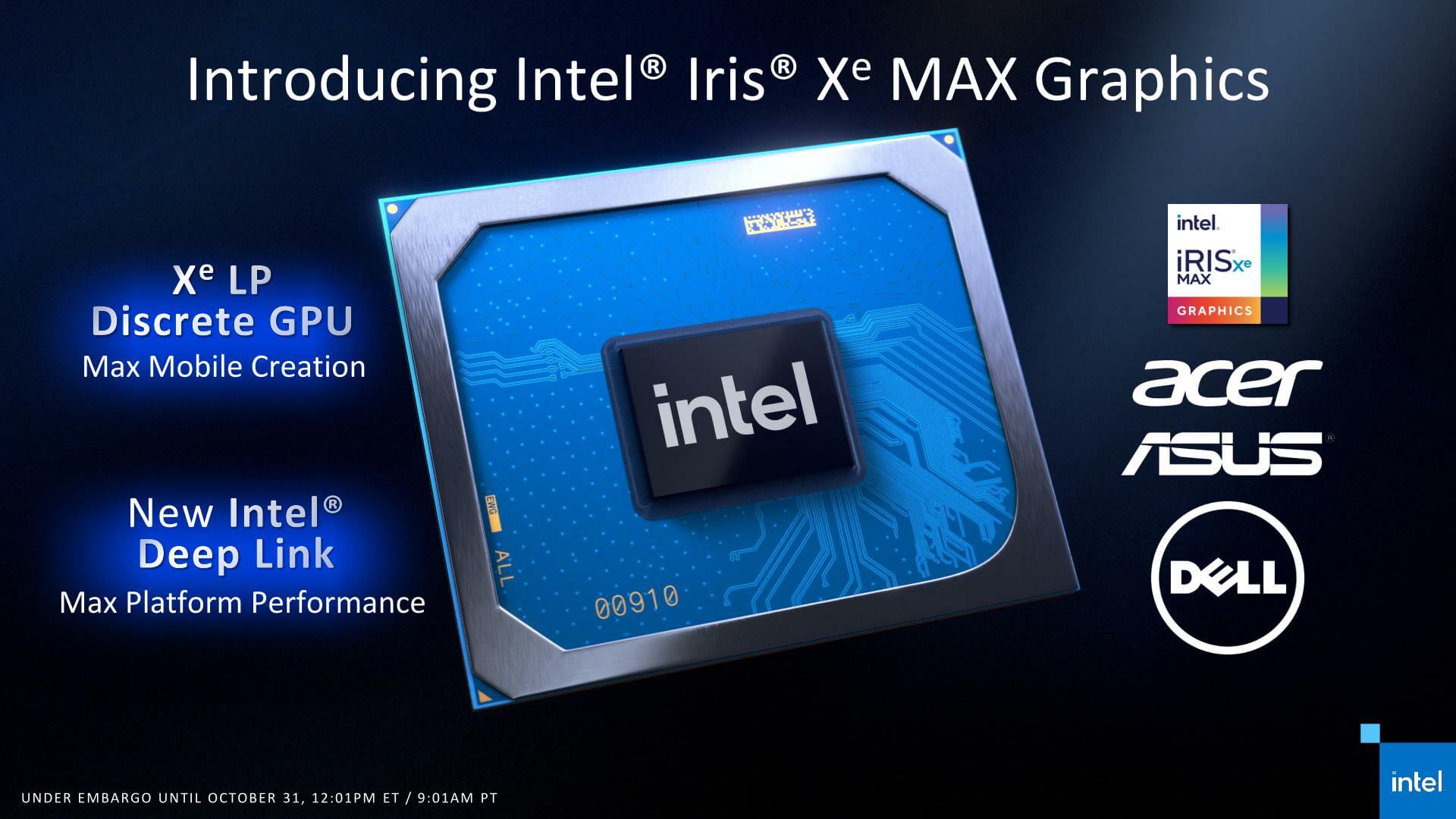

Intel unveils ARC graphics roadmap, Xe-Cores and XeSS super resolution technology - VideoCardz.com

Intel discloses details on its first discrete gaming series At Intel 2021 Architecture Day Intel confirmed some details on its new Arc GPUs, including their building blocks, architecture, and technology powering the GPUs. Xe-HPG architecture Intel DG2 GPUs will be build using Xe-Cores, those...videocardz.com

Great, now nobody will know if by XSS you're talking about Series S or Intel's upscaling tech... Anyway, I wonder if it'll have to be individually implemented into the titles just like DLSS and FSR, or available for general use on the driver level, IMO whoever get's that done first will dominate the market.

Last edited: