tommib

Member

Adorable. Thinking of getting one of those but my new leather couch!Series S beast level:

Adorable. Thinking of getting one of those but my new leather couch!Series S beast level:

Ah power consumption, I remember trolls being very concerned saying ps5 won't be able to keep up clocks or power and will have to down clock. Even though Cerny indicated it rarely ever happened and it was only at a fraction. Good concern trolling times.

I hope this and the games have finally shut that up.

Interesting, xsx versions seems unoptimised, it should not be getting such drastic dips.

We can see here Gears5 is a lot more stable and using more power, in gameplay it doesn't go below 190w and during battle its around the 200-210w.

you do know that The Coalition stepped in to help with this demo, who made Gears 5.... this is just a demo in any case

MS helped Epic optimize unreal 5. optimizations that helped both PS5 and Xbox. We can't really compare gears 5 as that was running on unreal 4.And? Is that meant to render my point null or something?

Never heard of a hairy Series S, nor any of them having cutting-edge technologies, your leather couch should be fine.Adorable. Thinking of getting one of those but my new leather couch!

Depends on who you ask:Is 220W considered high, low or medium for a console gaming? I'm not but i think PS4 was around 80W, so PS5 is above obviously but how is it perceived in general?

PS4 Slim might be 80w but the main SKU was 150 watts. PS4 Pro was also 150 watts. X1X topped out at 170. While the PS3 was around 200 watts. Both consoles are pushing the limits of a console TDP and thats a good thing.Is 220W considered high, low or medium for a console gaming? I'm not but i think PS4 was around 80W, so PS5 is above obviously but how is it perceived in general?

EDIT: Also it seems that he is using PS5 launch model (not the revision model)

to say its un-optimized is kind of nonsense when a MS owned team helped work on it..you seriously think they stepped in to help optimize to not really optimize? lol come on man work with me hereAnd? Is that meant to render my point null or something?

PS3 was 200W?? WTF that's above PS4's and way above what i though, interesting, thanks for the info.PS4 Slim might be 80w but the main SKU was 150 watts. PS4 Pro was also 150 watts. X1X topped out at 170. While the PS3 was around 200 watts. Both consoles are pushing the limits of a console TDP and thats a good thing.

PS5 launch models and all other models until the slim launches should consume the same amount of power. All they changed was the cooling solution, but the games and the GPU should continue to consume the same amount of power.

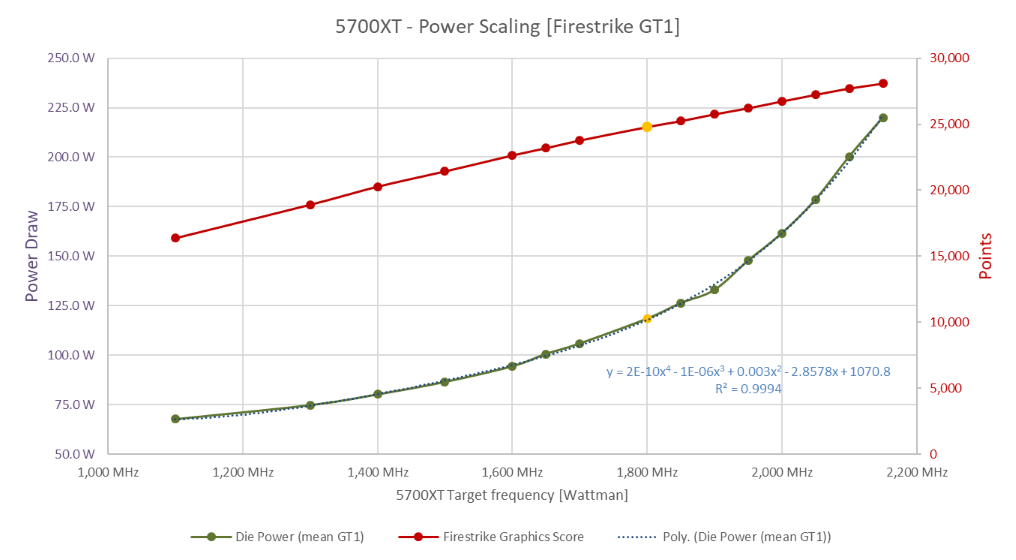

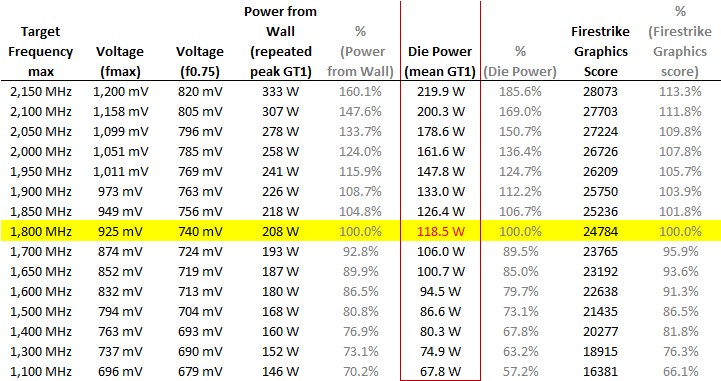

Some PS5 games go up to 230 watts! Whats probably happening here is that the demo is capped at 30 fps so the xsx and PS5 GPUs are not being maxed out. It would be interesting to see what the power consumption would be like when they are flying through the environment which is when the framerate consistently drops.1. I would have thought there would be at least a 20W to 30W difference between the PS5 and XSX but it’s closer to 10W to 15W.

I also remember people saying that smartshift would enable using less power for the same results as the XSX. It seems that's not true either.Ah power consumption, I remember trolls being very concerned saying ps5 won't be able to keep up clocks or power and will have to down clock. Even though Cerny indicated it rarely ever happened and it was only at a fraction. Good concern trolling times.

I hope this and the games have finally shut that up.

Yep. The demo runs silently on my Series X. On my PS5 the fan immediately is noticeable, which is fine I guess, but then the coil whine starts ticking like crazy, which indicates to me I should never get a UE5 game for the PS5.Crazy how well designed the xbox is on all fronts. Size, power, heat, power draw, noise levels.

Ps5 is well designed too but deffo pushes the hardware more.

I don't think anyone has that concern.However, this should put to rest any concerns over the PS5 GPU or CPU not having access to enough power

More detail here. The PS4 was a cheaper console designed for an uncertain period for gaming post recession. Gaming is in a much better state today.PS3 was 200W?? WTF that's above PS4's and way above what i though, interesting, thanks for the info.

| Model: | PS3 | PS3 Slim |

|---|---|---|

| Standby | 1.22 W | 0.36 W |

| Idle operation | 171.35 W | 75.19 W |

| Blu-ray | 172.79 W | 80.90 W |

| YouTube | 181.28 W | 85.08 W |

| Play (idle) | 200.84 W | 95.35 W |

| Play (full load) | 205.90 W | 100.04 W |

www.thehomehacksdiy.com

www.thehomehacksdiy.com

Why wouldn't people care about power consumption? It's a scarce resource and maybe points to one console being less efficient power wise.People caring about console power draw is the weirdest thing to me.

Why? For techies, stuff like this is fascinating. We have been talking about this for over two years now on this very board.People caring about console power draw is the weirdest thing to me.

This! It's fucking stupid for people to be making list wars out of power consumption.I'm a "techie", hell, I do it for a living. I still don't care about one console with different components drawing 180w vs another one with different components drawing 200w. What is interesting to me is when die shrinks occur or other optimizations of the same console. SlimySnake's comparison of PS3 to PS3 slim is cool, but the power draw of Series S to X to PS5 has zero influence of my decision to get any of those consoles.

It's quite interesting data especially on the performance side.This! It's fucking stupid for people to be making list wars out of power consumption.

That matrix demo is using hardware rt and mesh/primitive shaders already, and microsoft can add to unreal engine code base like the coalition already did with this and like nvidia did with RT and dlss. Epic are pretty good with regards to letting companies add to it.Engines need rewrites for more compute . Best thing MS do is , write their own multi platform engine called Unrealer Engine, (also for PC) include Mesh shading , VRS, SFS, Direct Storage , basically all the RDNA 2 features (or 4) and release this engine with the GDK and the next console launch in beta , so that devs can have the engine 1,5 years before releasing. They can easily take on EPIC, if they want .

At least you than know all features are used. Let the Coalition build the engine.

Microsoft should also have gone with variable clocks depending on power consumption and stayed in that 200-220w range more consistently. They could have boosted the clocks without changing the thermal designAre people still unaware nearly a year later? Microsoft went with a larger chip at lower clocks, Sony went with a smaller chip with a higher clocked GPU, the natural result is that the PS5 uses a bit more power to get where they are. 52CUs vs 36, 1.825GHz vs up to 2.23. Higher clocks increase power use more than a wider design, and the PS5 uses an instruction mix based instead of a thermal based variable clock system where the power controller looks at the instruction mix and adjusts based on the expected power use based on that, and that can just be for milliseconds within a frame and generally can always run at peak. The design is meant to avoid having to design the system for the worst case instruction mix, instead of designing the cooling and all for that, they can just drop the clocks for milliseconds when those rare worst case power situations come up.

"Using it better" and "Stressing it more" are both bad takes, this would have been exactly what we expected since we knew the specs.

Slightly different designs, but so far as I see the results are trading blows and largely very similar.

Yea Metro seems to be the only game I've seen so far draw closer to 230W. There was a brief spike in the matrix demo that hit 220W. I don't foresee any of the consoles drawing more than ~230W during normal gameplay 60fps or not. One thing Mark Cerny was pushing was for developers to optimize for power draw which is rather interesting.Some PS5 games go up to 230 watts! Whats probably happening here is that the demo is capped at 30 fps so the xsx and PS5 GPUs are not being maxed out. It would be interesting to see what the power consumption would be like when they are flying through the environment which is when the framerate consistently drops.

FTFYYou are in a Matrix thread, you should know the answer, coppertop.

I didnt even think of that. FascinaWith PSVR 2 we will see the system power draw increase because of the HMD. That 350W PSU makes sense for PS5.

Microsoft should also have gone with variable clocks depending on power consumption and stayed in that 200-220w range more consistently. They could have boosted the clocks without changing the thermal design

I also remember people saying that smartshift would enable using less power for the same results as the XSX. It seems that's not true either.

Crazy how well designed the xbox is on all fronts. Size, power, heat, power draw, noise levels.

Ps5 is well designed too but deffo pushes the hardware more.

Are people still unaware nearly a year later? Microsoft went with a larger chip at lower clocks, Sony went with a smaller chip with a higher clocked GPU, the natural result is that the PS5 uses a bit more power to get where they are. 52CUs vs 36, 1.825GHz vs up to 2.23. Higher clocks increase power use more than a wider design, and the PS5 uses an instruction mix based instead of a thermal based variable clock system where the power controller looks at the instruction mix and adjusts based on the expected power use based on that, and that can just be for milliseconds within a frame and generally can always run at peak. The design is meant to avoid having to design the system for the worst case instruction mix, instead of designing the cooling and all for that, they can just drop the clocks for milliseconds when those rare worst case power situations come up.

"Using it better" and "Stressing it more" are both bad takes, this would have been exactly what we expected since we knew the specs.

Slightly different designs, but so far as I see the results are trading blows and largely very similar.

Troll take is that there is 15 watts of performance left on the table on X, which lazy and dim developers fail to take advantage off. In all seriousness. I think both consoles seem fine hardware wise, but supposedly better yields probably help PS5 push more units.

Crazy these consoles are 220w and here in pc land gpu alive alone is 330 and it’s normal die whole system to take 600

to say its un-optimized is kind of nonsense when a MS owned team helped work on it..you seriously think they stepped in to help optimize to not really optimize? lol come on man work with me here

Yup, a single PC GPU can draw more power than the entire console system.Interesting. It's also crazy to see consoles deliver this stuff at this performance considering they consume way less power than a gaming pc. They are super well optimized and makes me wonder what is going on with PC manufacturers.

I mean, if GPU, CPU or memory is pretty similar in terms of technology and performance between these consoles and PC, why do similar PCs consume way more?

Yep, but why? I mean, let's say the PC one it's a similar Zen 2 with a similar clock. Why it draws way more power than the console one? Maybe to embed it into an APU reduces the power needed? I have no idea.Yup, a single PC GPU can draw more power than the entire console system.

The architecture is optimized differently, think of consoles like laptops, they are optimized differently because of power constraints and thermal constraints. In the case of consoles, you have a CPU and GPU combined in 1 package so they have to make sure the power draw is low.Yep, but why? I mean, let's say the PC one it's a similar Zen 2 with a similar clock. Why it draws way more power than the console one? Maybe to embed it into an APU reduces the power needed? I have no idea.

I mean, in terms of cooling, PCs have monster and noisy coolers or liquid stuff that I assume do a better job than the conole one. Or isn't the case?

It's almost as MS had time to improve the efficiency of an existed GPU and Sony would had to design a new one from the beginning

i also agree that I would have liked to see the series x gpu hit 2ghz or 2.1 but there must be a reason ms went with the 1.8 that we are unaware of.

Thanks for this. The PS3 SS I have is super quiet and does not give off much heat either. Almost like it's not even on when playing digital games.More detail here. The PS4 was a cheaper console designed for an uncertain period for gaming post recession. Gaming is in a much better state today.

Despite PlayStation 3 has a 380 W power supply it only consumes 170-200 W during game mode on 90 nm Cell CPU. The newer 40GB PlayStation 3 model that comes with 65 nm Cell CPU/90 nm RSX consumes from 120-140 W during normal use. PlayStation 3 Slim with 45 nm Cell CPU/40 nm RSX consumes from 65-84 W during normal use.

In this table you can see exactly how much power does each PS3 model consume in different mode:

Model: PS3 PS3 Slim Standby 1.22 W 0.36 W Idle operation 171.35 W 75.19 W Blu-ray 172.79 W 80.90 W YouTube 181.28 W 85.08 W Play (idle) 200.84 W 95.35 W Play (full load) 205.90 W 100.04 W

I wrote a similar article for PS4 power consumption, so if you are interested, you can read it: How Much Electricity Does a PS4 Use. If we compare PS3 power consumption with other popular consoles, we can see that Xbox 360 uses 187 watts in the gaming mode, while the Nintendo Wii uses only 40 watts in gaming mode. Let’s go a little deeper into the topic.

How Much Electricity Does a PS3 Use? - The Home Hacks DIY

How Much Electricity Does a PS3 Use? If your playing PS3 with a normal LED TV you will use approximately 230 watts (170 watts for the PS3 + 60 watts for thewww.thehomehacksdiy.com

It's almost as MS had time to improve the efficiency of an existed GPU and Sony would had to design a new one from the beginning

Interesting, xsx versions seems unoptimised, it should not be getting such drastic dips.

We can see here Gears5 is a lot more stable and using more power, in gameplay it doesn't go below 190w and during battle its around the 200-210w.