Here's the flipside: At significantly less cost, the 3080 performs almost as well. AMD has its work cut out to catch up. VII has 60CUs, scale to 80, add the IPC gain...Eh. They have to execute this very well is what I'll say, like perfectly, to have a hope to catch up. This is without considering DLSS. Nvidia may market the 3090 as an 8K card because they're going to sell it to whoever's willing to buy it, but I agree that it's more of an upgraded 3080 with more VRAM for workloads that need it, though falls short of a Titan by being gimped in several important ways. That said, with DLSS on, the 3090 is absolutely the best experience at 8K you can get today.

The Radeon VII was on the GCN architecture, essentially part of the Vega line of cards like the Vega 64 etc...

The 5700xt was the follow up on the new RDNA architecture with 40CUs and trades blows with a 2070S as it should given the price range it was a mid/mid-high range card.

The 6000 series cards that will be unveiled in October are RDNA2, which offers improvements in IPC/Perf Per Watt and clock speeds/thermals. They also include Ray Tracing.

The XSX is a 52CU RDNA2 APU with some customisations. (We don't know exactly how much will differ or not from final PC RDNA2 cards). On a small APU (which includes the CPU as well) with low power draw the XSX is said to be roughly on par with a 2080 (possibly better?).

We have seen one leaked benchmark for an unknown Radeon card that matches a 2080ti (presumably 6700? Which would compete well with a 3070).

Early in the year, a few months back we saw an open VR benchmark of an unknown Radeon card being roughly 30% more powerful than a stock 2080ti, which would put it on par roughly with a 3080. Now we have no idea what this card was or what the configuration/drivers etc... were but normally over time things improve rather than get worse when it comes to things like this.

Now with all that in mind I think it is very likely that this time RDNA2 will trade blows with the 3000 series and compete well across (most of) the Nvidia stack. Given what we know an 80CU RDNA2 card (with the IPC/Perf per watt improvements that come with RDNA2 over RDNA1) along with whatever other architectural improvements that come with RDNA2, on a larger die (500+mm2) with higher power draw (270 - 300w) at roughly PS5 clock speeds (2.2 boost clock?) then I think they cold have something quite good on their hands.

Nobody is sure how Ray Tracing performs yet and how it compares to Ampere, but given that Ampere did not live up to the hype in terms of RT increase over turing then it is very possible AMD could be quite competitive here, maybe Ampere will still have better RT performance overall but it could be closer than a lot of people thought a few weeks ago. We won't know for sure until reveal+benchmarks but I think they could perform reasonably well here.

DLSS? At the moment it seems like cool tech but only supported in around 6 games so far so I wouldn't base a purchase solely on that. Although it can be a nice bonus. Do AMD have anything to compete with it? Possibly, they have been very tight lipped so far and haven't mentioned anything so we can only wait and see.

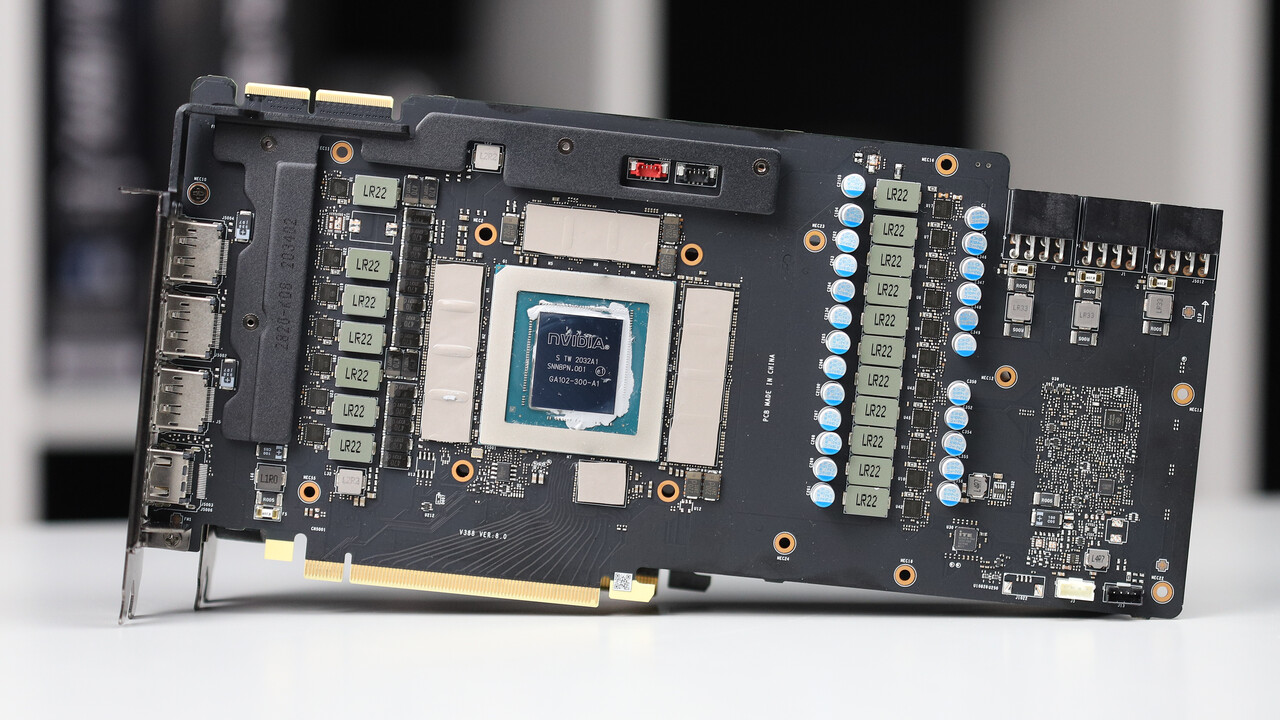

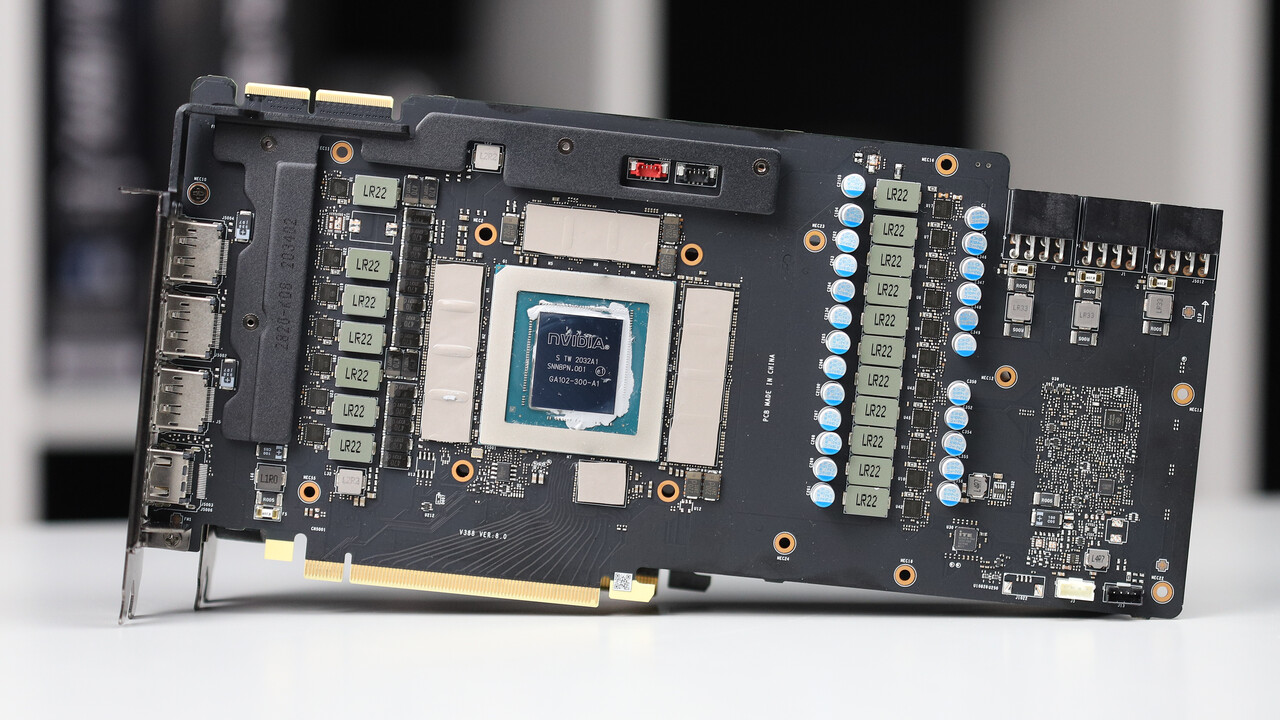

Plus Nvidia is on an inferior Samsung node, hence the huge power draw and extravagant cooling solutions. I think AMD might surprise many and compete quite well but who knows, maybe they will fuck it up somehow? Hard to say for certain but so far all evidence we have so far points to them having something good cooking.

www.computerbase.de

www.computerbase.de