If that's something you feel you have to do then I won't be able to suggest otherwise, though it is a shame if your takeaway from my comments were that negative. However even in this response there's things I can point out you're doing which are maybe clouding your sense of judgement.

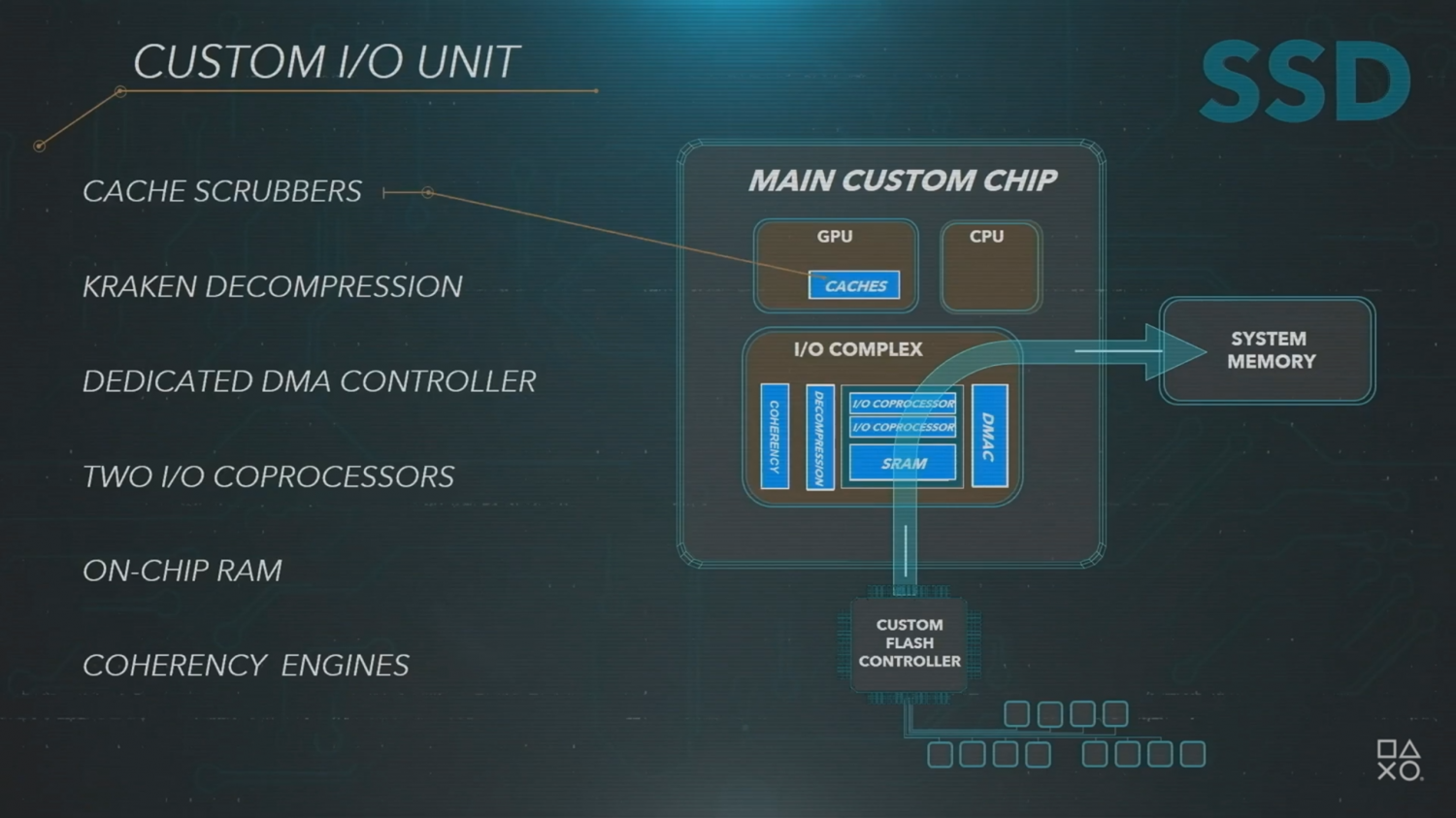

See, you're still making a critical mistake of comparing these approaches as if they are apples-to-apples. They are not. They both see all of the same problems in terms of current I/O, but have taken different, VALID approaches to solving it that suit the range of hardware implementations expected to support them. Sony's is more hardware-dependent, MS's and NV's are more hardware-agnostic.

The problem is that yourself and several other posters then take this and try objectively comparing one to the other to then make an absolute statement that one (usually Sony's) is objectively better, but then a few take it to such lengths that they essentially treat other approaches as being invalid. That is contrary to how the tech world has operated, wherein there have always been multiple valid approaches and more than a few that are within hairs-length of each other in terms of overall effectiveness for the implementations they go in.

I feel, personally, that this level of judgement on solutions that we've yet to see in real-time gameplay (yes that includes the UE5 demo too; as impressive as it was, there were little to no mechanics at work typical of a real game, simplified physics models, no NPC A.I or other game logic really occurring etc.) is ultimately unnecessary.

About the Road to PS5 stuff; I am not saying it was 100% marketing talk. Never have said that. However, if you don't think Mark Cerny wasn't embellishing small aspects of some of the features he spoke on, or did subtle downplaying on certain design features that they're aware aren't necessarily playing to the PS5's strengths, you have to be fooling yourself. The GDC presentation may've been a call to developers to get their briefing, but it also served at least in some capacity as a pseudo-advert for the PS5. After all, it was the system's first time being discussed in public in any serious official capacity of great length, and the fact they messaged the presentation to followers on Twitter further supports this.

There was some element of PR involved in that presentation, but Sony aren't the only company that does this. Microsoft's done it, Nvidia did a bit of it, AMD did a bit of it, etc. So I don't see anything to be affronted by in me stating the obvious. Encoding and latency improvements over PCIe 3.0 to 4.0 is nothing to sneeze at; you are merely (softly) disregarding it for whatever reason, though I don't see why to do so in the first place. It's a net improvement overall, and believe it or not there are methods available to companies like NV to mitigate PCIe lane latency issues so should they prove to be an issue here or there. It may not be "tightly coupled" like it is in the consoles but that doesn't mean NV haven't put a lot of R&D into this area and designed a setup which is as well integrated as they can make it.

Regarding the "band-aid" stuff, AFAIK there is almost never an instance in the tech world where someone refers to a product as a "band-aid" with positive connotations. Every reference from what I have seen has generally been with negative connotations, i.e the SEGA 32X. I also feel referring to the work Nvidia are doing here with I/O as a band-aid is, again, dismissive of the effort they've put into this, and once again it turns looking at these various solutions from a sum of great options into a needless "my preferred solution is better than these other ones!", even if those aren't the direct words used. In truth, ALL of these solutions will have their benefits and drawbacks, so it's generally best to wait until real games come out for all these various platforms utilizing the solutions to therefore see how they truly check against one another.

And even in that case, over the majority of titles I'm expecting relatively equal performance metrics. Some might have slight or more noticeable edges in very specific areas, but no one solution is going to "reign king among them all", as it were. None of these companies are sleeping at the wheel on pushing forward I/O solutions. Absolutely not a one of them.

jimbojim

jimbojim

It's okay man; Papacheeks basically said what I was trying to communicate.