Yes this happened all the time. however everybody that hates it, its better then 1,5gb at the end of the day that the card would otherwise have ended up with.

Anyway, this is what i get from the spec sheet from the microsoft website.

| Memory Bandwidth | 10 GB @ 560 GB/s, 6GB @ 336 GB/s |

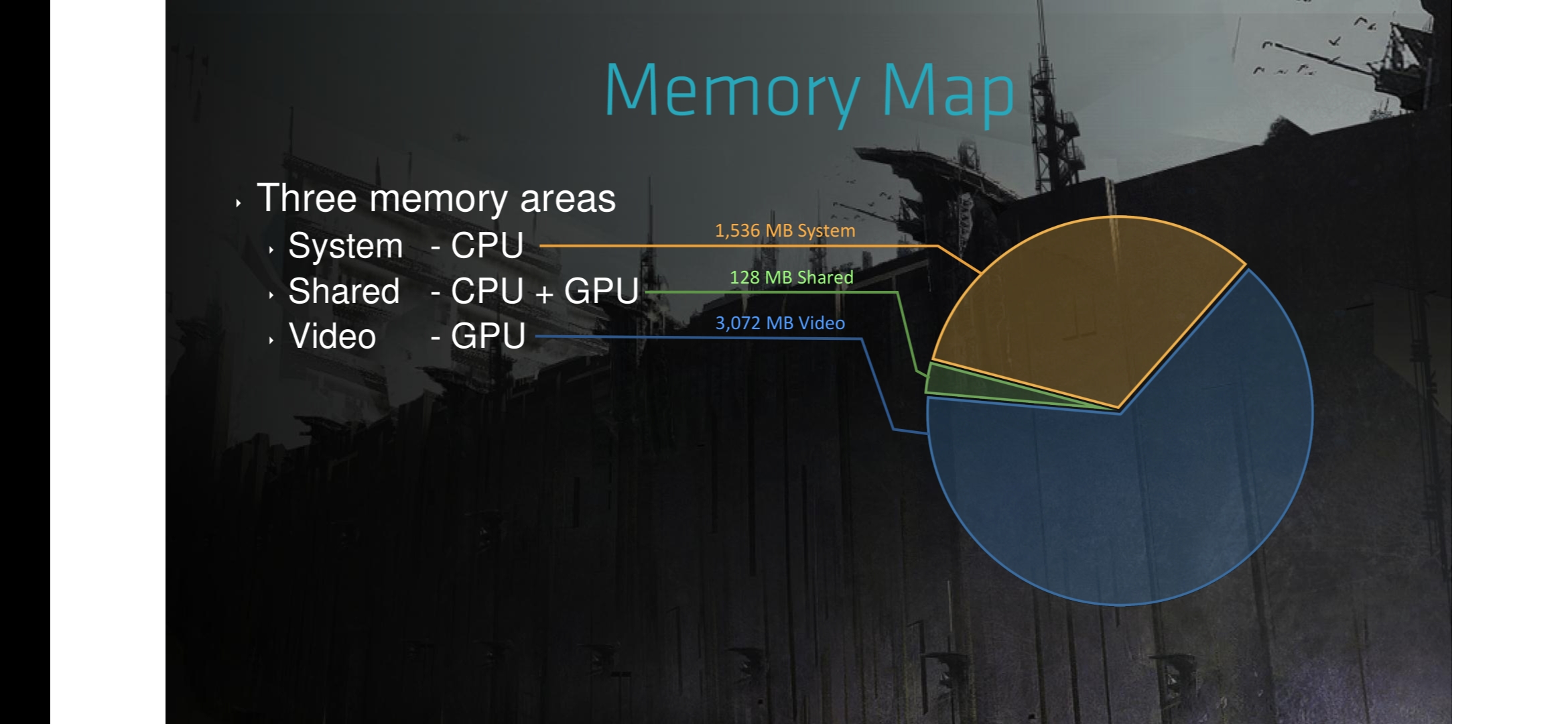

Why is it not? 16gb 336 and 10gb 560? That's what u would normally state if it was a shared memory pool.

What microsoft makes it sound like here that they have a split memory pool somehow.

I would like to see some more information from microsoft on this matter. because if that memory is designed like the 660 ti, then yea that's not looking good.

If its split, then honestly it will outperform sony loyaly, no way in hell they need more then 10gb for v-ram for next generation.

From eurogamer:

https://www.eurogamer.net/articles/digitalfoundry-2020-inside-xbox-series-x-full-specs

Seems like its a split pool out of this which makes sense then. So yea no issue there at all.