Lisa Su, why do you do this to us??

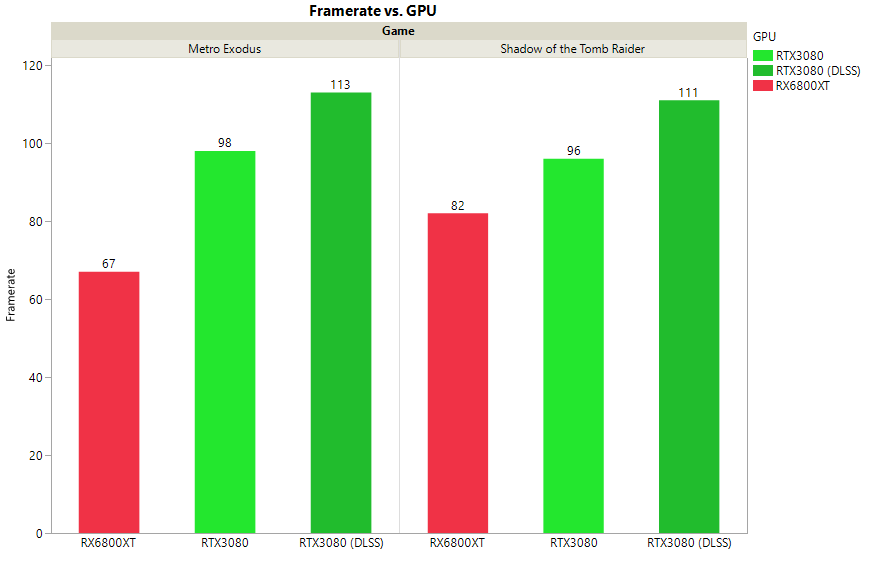

I don't get who the 6800XT is for. It's on par or maybe slightly better than the 3080 in rasterization, but way behind in Raytracing and yet it costs the same price. They don't even offer any proprietary tech that could somehow entice you going with their GPU over the competition. And their software stack is miserable compared to Nvidia's. So really who is this for? People who only play old rasterized games? Makes no sense to me at this price point.