....now if only there was an amd card with 36 CUs so that we could test this

oh snap! 5700 plain has 36 CUs, and also has 448GB/s bandwidth, just like ps5.

what do we know from 5700? game clock 1.625Ghz // boost clock 1.725Ghz

but lets say that these limits were there just to make way for 5700XT

with BIOS checking, you can unlock the restrictions and overclock as much as you like. no matter the cooling solution, can't go close to 2.2Ghz for the love of its life

lets move on to its bigger brother, 5700 XT : game clock 1.755 Ghz // boost clock 1.905

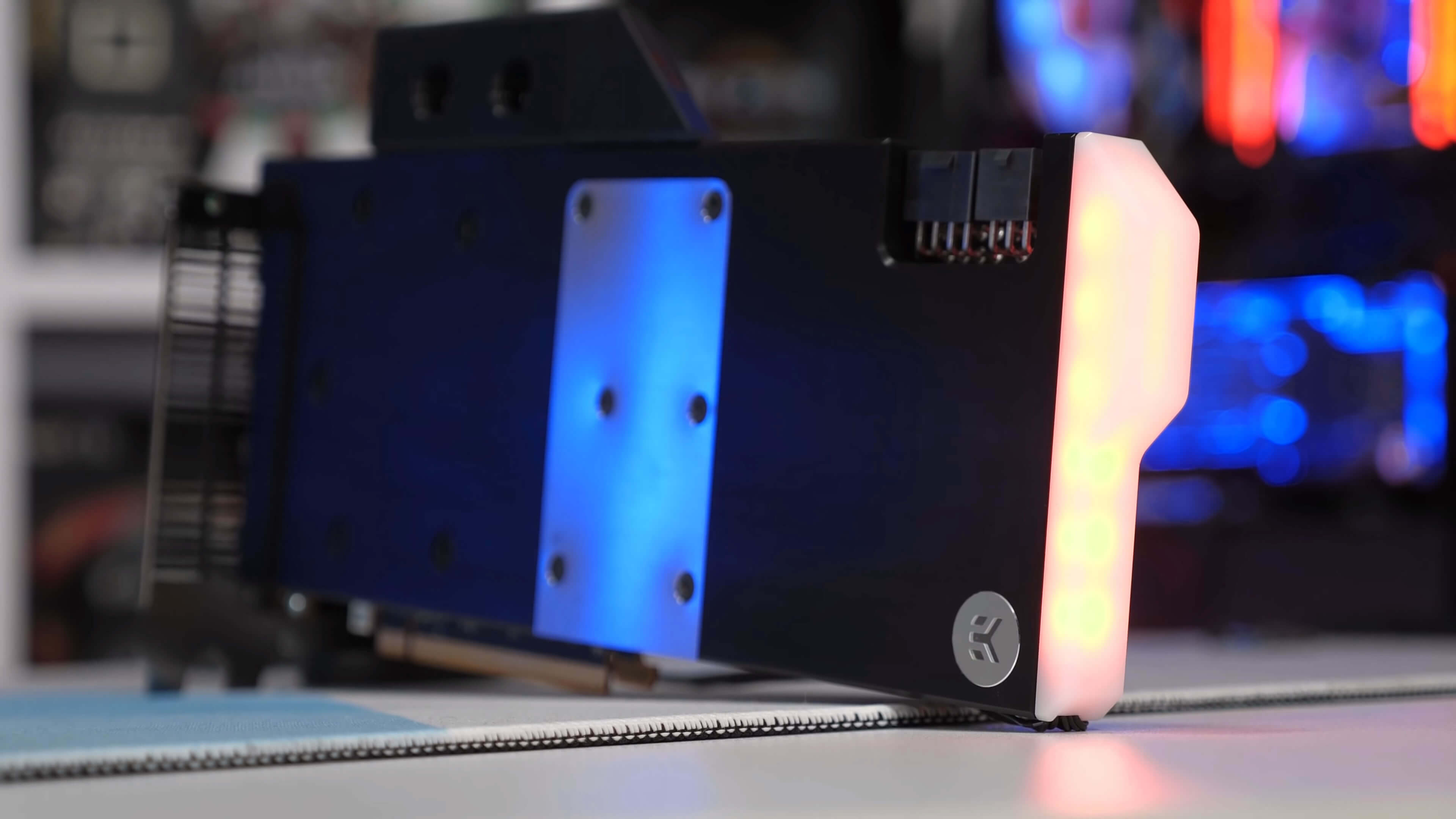

here is a "no limits" overclock test for 5700 XT, where BIOS and registry were touched, a fat liquid cooling block was attached, and card was overclocked to the absolute highest limit.

*notice that even though all barriers were uplifted and card could be clocked to 2.300Ghz, the silicon was no good for anything more than 2.100Ghz. So it was put there, along with a lock that it doesn't spike down below 2.050Ghz

After testing the AMD's new Radeon 5700 GPUs and Nvidia's RTX Super answer, we are particularly happy about the value offered by the latest Radeons. The $400...

www.techspot.com

as anyone that reads the test can see, overclocking the 5700 XT up to its hardware limits,

taking temperatures completely out of the equation,

for a 18% increase in clockspeeds there was a 40% increase in consumption, and only a ~7% average performance increase

the article calls the results "disappointing", but enlighting as to why amd has put these limits, and suggests that it makes much more sense to buy a stronger GPU that to spend money on fancy cooling so as to raise 5700XT's clocks.

now, what efficiency improvement margin do we expect for amd's latest? 5%? 8%? even if 10%, cerny's magic words do not compute.

I guess we will find out this Christmas who is the troll. could prove to be me -as I go through these numbers and draw conclusions, but I'd suggest that you don't be so hasty to rule out that cerny was the troll all along.