-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

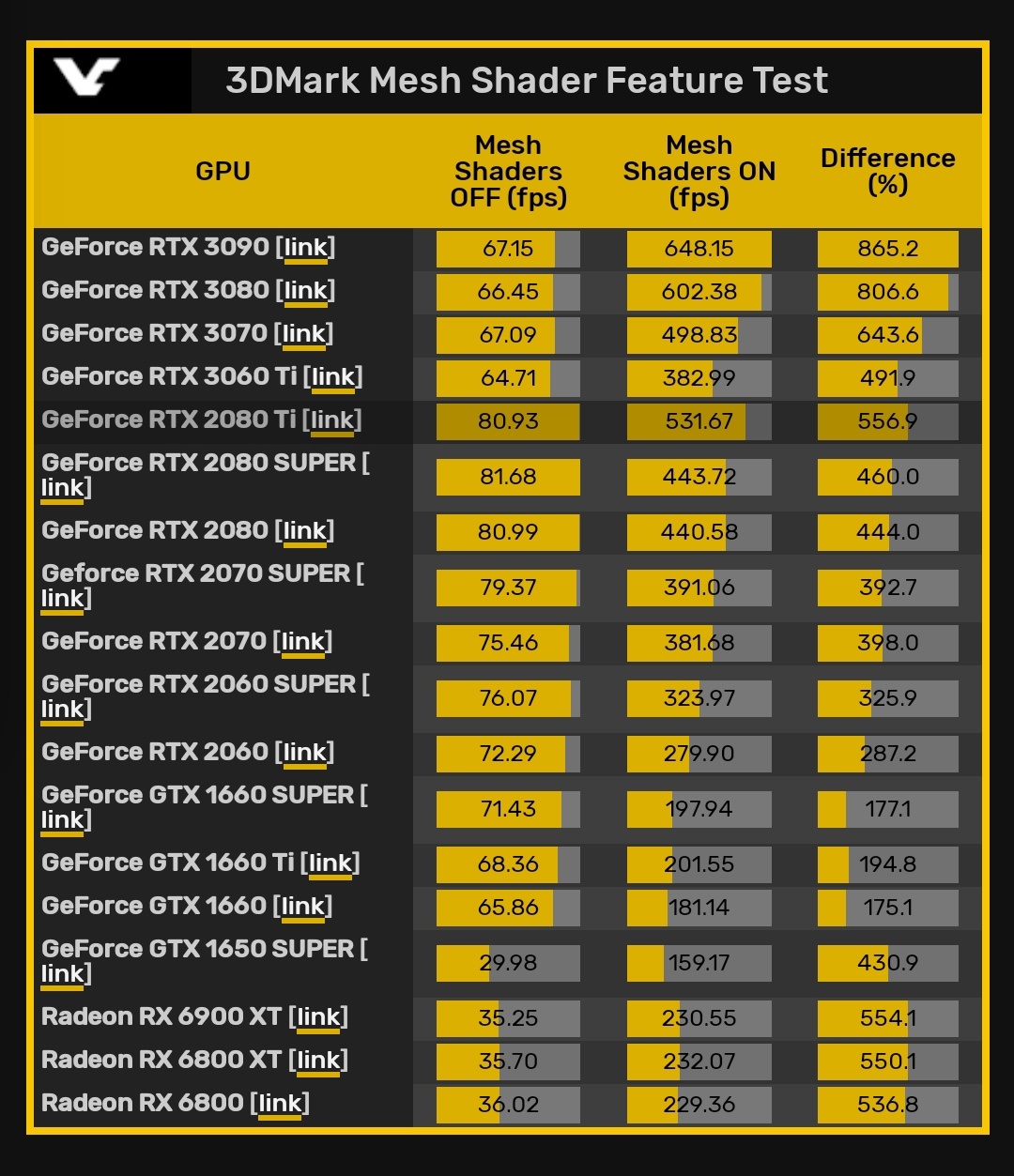

UL releases 3DMark Mesh Shaders Feature test, first results of NVIDIA Ampere and AMD RDNA2 GPUs

- Thread starter SmokSmog

- Start date

Mister Wolf

Member

Ant vs an Elephant. Buying an AMD GPU is hustling backwards.

Andodalf

Banned

Ant vs an Elephant. Buying an AMD GPU is hustling backwards.

Gniltsuh?

SmokSmog

Member

RTX 3090 has almost 3x more FPS compared to 6900XT, those percentages are to show gains for mesh shaders turned ON and OFFThe overall results are line with nVidia.

RDNA2 is in the middle of Ampere and Turing.

- NVIDIA Ampere: 702%

- AMD RDNA2: 547%

- NVIDIA Turing (RTX): 409%

- NVIDIA Turing: 244%

ethomaz

Banned

Because it does the scene at higher fps (60 vs 30).RTX 3090 has almost 3x more FPS compared to 6900XT, those percentages are to show gains for mesh shaders turned ON and OFF

If you want to compare only the Mesh Shader feature like the benchmark tries to do you need to see the results improvements with the feature on/off.

BTW why AMD is so bad at render that scene even with Mesh off?

Last edited:

Elog

Member

What? The relevant test would be AMD mesh shader implementation vs Nviidia mesh shader implementation vs Sony 'mesh shader' implementation. That test says nothing about that.But mesh shaders in SX, wow at that improvement.....it may be a bigger win than the TFLOPS difference.....

skit_data

Member

What exactly are you implying?where is our resident amd boy?

RTX > Rdna2.

But mesh shaders in SX, wow at that improvement.....it may be a bigger win than the TFLOPS difference.....

SlimySnake

Flashless at the Golden Globes

that is absolutely awful. WTF is AMD doing.

Amazing performance gains on Nvidia cards. I think a 2060 will absolutely fucking annihilate the ps5 and xsx once devs start using mesh shaders. What a disaster.

Amazing performance gains on Nvidia cards. I think a 2060 will absolutely fucking annihilate the ps5 and xsx once devs start using mesh shaders. What a disaster.

Elog

Member

Sony has their own implantation - they did not go for the AMD variant.that is absolutely awful. WTF is AMD doing.

Amazing performance gains on Nvidia cards. I think a 2060 will absolutely fucking annihilate the ps5 and xsx once devs start using mesh shaders. What a disaster.

Soulblighter31

Banned

nvidia was looking years into the future when designing Turing and Ampere. While amd was making cards that can play 2013 games very well. They practically looked backwards in their design

Kataploom

Gold Member

The poster meant regarding consoles alone, because consoles will never do better than PC due to almost unlimited resources PC has (starting by budget).What? The relevant test would be AMD mesh shader implementation vs Nviidia mesh shader implementation vs Sony 'mesh shader' implementation. That test says nothing about that.

I'm one of those saying that this gen would show less graphical differences than previous ones but with these features it'll probably not be the case... Contrary to 8th gen that was basically 7th gen++ even graphically (for PC players at least).

Kazekage1981

Member

Sony has their own implantation - they did not go for the AMD variant.

I think its geometry engine? I know that none of the vulcan, dx12 ultimate stuff applies to PS5. On top of that, PS5 is highly customized and fine tuned to Sonys own needs.

alabtrosMyster

Banned

DonJuanSchlong

Banned

Wonder if Lisa Su's sons will come in and somehow come to the conclusion that AMD won.

longdi

Banned

mesh shaders and vrs are modern gpu features that don't show up in tflops metric. now we have the numbers on their impact, im glad MS waited for full rdna2What exactly are you implying?

SlimySnake

Flashless at the Golden Globes

I guess there is a silver lining here. It seems the AMD cards have awful performance even with Mesh Shaders off. And the percentage difference is actually somewhat on par with the 20 series GPUs.

skit_data

Member

So you are suggesting its dangerous to rely on TFLOPs as an absolute indicator of performance?mesh shaders and vrs are modern gpu features that don't show up in tflops metric. now we have the numbers on their impact, im glad MS waited for full rdna2

Hmm... coudlve sworn i heard that somewhere before. Well.

Elog

Member

Yes - all the functionality of the mesh shaders (AMD and Nvidia) was implemented as part of the new geometry engine in the PS5.I think its geometry engine? I know that none of the vulcan, dx12 ultimate stuff applies to PS5. On top of that, PS5 is highly customized and fine tuned to Sonys own needs.

SenjutsuSage

Banned

Hmm does not bode well for Consoles.

You do realize Series X fully supports this, right? There's already a video demonstration from Microsoft where Series X's mesh shading is running a demo at 4K that the 2080 Ti was only running at 1440p or something like that. Don't have time to look it up.

SenjutsuSage

Banned

Yes - all the functionality of the mesh shaders (AMD and Nvidia) was implemented as part of the new geometry engine in the PS5.

Mesh Shaders aren't in the PS5's geometry engine. PS5 doesn't support Mesh Shaders. It supports Primitive Shaders, which is more limited, still involves the input assembler, and doesn't go as far as mesh shaders does. I notice people keep giving features to the PS5 that even their lead architect hasn't said it supports. Only Microsoft and AMD have confirmed these features to be inside Xbox Series S/X.

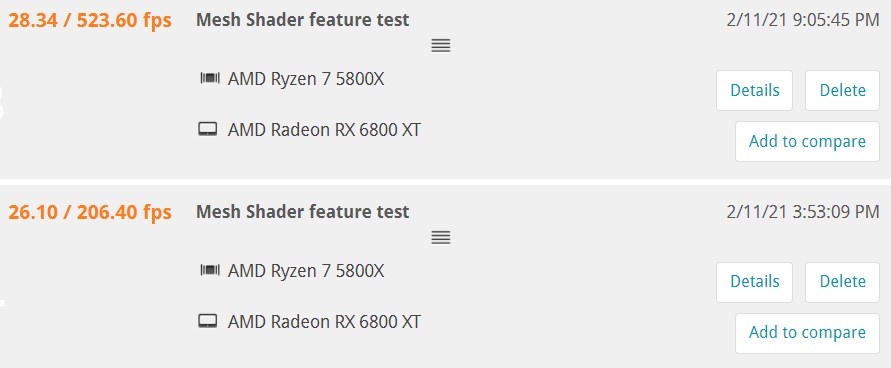

Also, this benchmark appears not even properly coded for AMD hardware, but is instead geared towards Turing architecture, which is why Ampere isn't doing even better. So these AMD results can be even better if that's the case.

Last edited:

ethomaz

Banned

So explain to me how Vulkan and OpenGL supports Mesh Shaders too?Mesh Shaders aren't in the PS5's geometry engine. PS5 doesn't support Mesh Shaders. It supports Primitive Shaders, which is more limited, still involves the input assembler, and doesn't go as far as mesh shaders does. I notice people keep giving features to the PS5 that even their lead architect hasn't said it supports. Only Microsoft and AMD have confirmed these features to be inside Xbox Series S/X.

Also, this benchmark appears not even properly coded for AMD hardware, but is instead geared towards Turing architecture, which is why Ampere isn't doing even better. So these AMD results can be even better if that's the case.

Mesh Shaders is a change in the shading pipeline... nVidia introduced it way better AMD or MS start to talk about it.

PS5 support that too... how Sony implemented it in their APU is what you need to ask.

Last edited:

Elog

Member

First of all - Mesh shaders is a hardware implementation of primitive shaders.Mesh Shaders aren't in the PS5's geometry engine. PS5 doesn't support Mesh Shaders. It supports Primitive Shaders, which is more limited, still involves the input assembler, and doesn't go as far as mesh shaders does. I notice people keep giving features to the PS5 that even their lead architect hasn't said it supports. Only Microsoft and AMD have confirmed these features to be inside Xbox Series S/X.

Secondly - you are right that the above implementation of varied degree of shader work and culling of geometry is something that the PS5 does not have.

However - culling of geometry and what parts of that geometry that gets shaders applied (and to what extent) is instead done on the geometry engine level.

I.e. PS5 has the full functionality (and according to claims - a bit more than that) of what the 'mesh shaders' are doing on Nvidia and AMD cards but the 'how' is different since it is all driven by the geometry engine instead of a hardware piece downstream of the GE.

Most people reading that PS5 does not have mesh shaders interpret that as if the PS5 does not have that function and that is incorrect. It has the full functionality but it is achieved differently.

Which hardware implementation of this functionality is better? Who knows but Cerny is good at what he is doing.

Dural

Member

Mesh Shaders aren't in the PS5's geometry engine. PS5 doesn't support Mesh Shaders. It supports Primitive Shaders, which is more limited, still involves the input assembler, and doesn't go as far as mesh shaders does. I notice people keep giving features to the PS5 that even their lead architect hasn't said it supports. Only Microsoft and AMD have confirmed these features to be inside Xbox Series S/X.

Also, this benchmark appears not even properly coded for AMD hardware, but is instead geared towards Turing architecture, which is why Ampere isn't doing even better. So these AMD results can be even better if that's the case.

Nah, God Cerny saw how shitty AMDs implementation was and pulled his own perfect mesh shader out of his ass and told AMD to put it in their APU.

edit:

Which hardware implementation of this functionality is better? Who knows but Cerny is good at what he is doing.

See, Cerny knows better than AMDs engineers.

Last edited:

SenjutsuSage

Banned

First of all - Mesh shaders is a hardware implementation of primitive shaders.

Secondly - you are right that the above implementation of varied degree of shader work and culling of geometry is something that the PS5 does not have.

However - culling of geometry and what parts of that geometry that gets shaders applied (and to what extent) is instead done on the geometry engine level.

I.e. PS5 has the full functionality (and according to claims - a bit more than that) of what the 'mesh shaders' are doing on Nvidia and AMD cards but the 'how' is different since it is all driven by the geometry engine instead of a hardware piece downstream of the GE.

Most people reading that PS5 does not have mesh shaders interpret that as if the PS5 does not have that function and that is incorrect. It has the full functionality but it is achieved differently.

Which hardware implementation of this functionality is better? Who knows but Cerny is good at what he is doing.

The PS5 method is inferior because it's not a fully reinvented/programmable geometry pipeline on the level of what Nvidia did with Turing/Ampere and what AMD did RDNA2 on PC and Xbox Series X/S. Primitive Shaders is an in-between step that AMD has moved on from because it isn't better than Mesh Shaders. Primitive Shaders was first introduced in Vega, but due to some hardware problem it was never exposed for use. It was fixed for RDNA 1st gen, and then immediately abandoned/taken to a whole other level for RDNA2 with Mesh Shaders.

Microsoft waited longer to get the full feature, not the mere point revision.

AgentP

Thinks mods influence posters politics. Promoted to QAnon Editor.

Must have been photomode, where the X excels.You do realize Series X fully supports this, right? There's already a video demonstration from Microsoft where Series X's mesh shading is running a demo at 4K that the 2080 Ti was only running at 1440p or something like that. Don't have time to look it up.

SenjutsuSage

Banned

So explain to me how Vulkan and OpenGL supports Mesh Shaders too?

Mesh Shaders is a change in the shading pipeline... nVidia introduced it way better AMD or MS start to talk about it.

PS5 support that too... how Sony implemented it in their APU is what you need to ask.

It is supported on Nvidia Turing and Ampere hardware, and now RDNA2. It isn't supported for RDNA 1st gen that only supports the same primitive shaders that's inside the PS5 via the same Geometry Engine. Series X, meanwhile has a mesh shading geometry engine. The Playstation 5 does not support Mesh Shaders. It has something that works in a similar way, but simply doesn't go nearly as far, that's primitive shaders. (And no, I'm not saying PS5 is RDNA1, it's clearly a hybrid/custom design, but it's using the RDNA 1st gen geometry engine)

Last edited:

SenjutsuSage

Banned

You'll be sorry when the Series X has the highest performing photo modes in the business.Must have been photomode, where the X excels.

Superayate

Member

Because it does the scene at higher fps (60 vs 30).

If you want to compare only the Mesh Shader feature like the benchmark tries to do you need to see the results improvements with the feature on/off.

BTW why AMD is so bad at render that scene even with Mesh off.

Seems that many does not understand what are the really interesting value in this benchmark !! As Ethomaz said, this is not the fps in the table that is interesting to read, but the increase in performance due to mesh shaders usage which need to be noticed. And in this case, the results for AMD are clearly not bad. The interest of this benchmark is to evaluate how mesh shader could increase the performances...

Edited to add his message.

Last edited:

M1chl

Currently Gif and Meme Champion

Oh you are right, that one with the dragon in the room or what it was....You do realize Series X fully supports this, right? There's already a video demonstration from Microsoft where Series X's mesh shading is running a demo at 4K that the 2080 Ti was only running at 1440p or something like that. Don't have time to look it up.

nikolino840

Member

Yeah but with high priceswhere is our resident amd boy?

RTX > Rdna2.

But mesh shaders in SX, wow at that improvement.....it may be a bigger win than the TFLOPS difference.....

ethomaz

Banned

Exactly.Seems that many does not understand what are the really interesting value in this benchmark !! As Ethomaz said, this is not the fps in the table that is interesting to read, but the increase in performance due to mesh shaders usage which need to be noticed. And in this case, the results for AMD are clearly not bad. The interest of this benchmark is to evaluate how mesh shader could increase the performances...

Edited to add his message.

People need to understand the context of the benchmark.

AMD is really having a bad render time of the scene that I can't explain... maybe it is how they said... it will get better with driver.

But the performance in the scene of the AMD card is not important for that benchmark.

What is really the point is how the cards shows gains with Mesh Shaders enabled.

RDNA 2 is showing better results than Turing... of course it is still behind Ampere but the different is not that bad how people tried to say due the lower fps... actually 6800 only showed lower results than RTX 3070, 3080 and 3090.

Last edited:

SenjutsuSage

Banned

Exactly.

People need to understand the context of the benchmark.

AMD is really having a bad render time of the scene that I can't explain... maybe it is how they said... it will get better with driver.

But the performance in the scene of the AMD card is not important for that benchmark.

What is really the point is how the cards shows gains with Mesh Shaders enabled.

RDNA 2 is showing better results than Turing... of course it is still behind Ampere but the different is not that bad how people tried to say due the lower fps... actually 6800 only showed lower results than RTX 3070, 3080 and 3090.

And the results may be skewed against Ampere and RDNA2 because this benchmark is much more geared towards the Turing architecture, so it could be even better for all we know.

YesOh you are right, that one with the dragon in the room or what it was....