The

PlayStation 5 will feature a weaker GPU, compared to the

Xbox Series X, but developers continue to praise the console. The raw specs are definitely not painting the whole picture about the new consoles, and a recent in-depth analysis suggests that the Sony next-gen console's GPU will have a better system supporting it, resulting in better overall performance.

On his blog, James Prendergast has been providing a very interesting on-going analysis of the next-generation consoles, based on what has been revealed so far. In his

latest post, he took a look at how RAM, I/O and SSD speed and function will make a difference, considering the CPU difference between the two consoles is minimal.

Taking a look at the RAM configuration of both consoles, the analysis highlights how the Xbox Series X configuration is sub-optimal, as the asymmetric configuration used for the console can lead to reduced bandwidth once the symmetrical portion is full. The PlayStation 5 configuration, on the other hand, allows a static bandwidth for the entire 16 GB of GDDR6.

Taking a look at the I/0 and SSD access, the analysis highlights how the Xbox Series X simply has a slower interface over the PlayStation 5's. The solution used by the Sony console allows for better data management within the RAM as well, allowing for less frequent reloading of data into the RAM, improving system efficiency.

Putting everything together, including how the PlayStation 5 audio hardware will take less CPU power compared to the Xbox Series X, the analysis reveals that the PlayStation 5 has the

"bandwidth and I/O silicon in place to optimise the data transfer between all the various computing and storage elements". The Xbox Series X, on the other hand, has some sub-optimal implementations that are going to perform below the specs of the PlayStation 5, despite the inclusion of smart prediction engines.

On a related note, a video released recently by Coreteks, who claims to have insider knowledge on both consoles, reaches the same conclusions. It is a very long video, but it's a very interesting watch nonetheless.

According to a recent in-depth analisys, the PlayStation 5 may have the weaker GPU of the next-gen consoles, but it will receive better support from the rest of the system

wccftech.com

-------------------------------------------------------------------------

We've been hearing for months that there's not much between the two devices from Microsoft and SONY, with "sources" on both sides of the argument claiming that each console has an advantage in specific scenarios. Incontrovertibly, Microsoft has the more performant graphics capabilites with 1.4x the physical makeup of the Playstation 5's GPU core. That's only part of the story though, with the PS5 running a minimum 1.2x faster than the Series X across the majority of workload scenarios. That narrows the advantage of the SX (in terms of pure, averaged, GPU compute power) to around 1.18x-1.2x that of the PS5.

But what about the CPU? Performing the same, simple ratio calculation, you can work out that the SX is 1.02x - 1.10x more powerful than the PS5's, depending on the scenario. Not that big a difference, really... and the CPU/GPU should sport pretty much the same feature set on both consoles.

However, everyone and their dog are talking about the GPUs and have been for a long time: It's not all that interesting to me at this point in time until more of their underlying architectures are revealed. Those three to four* things that are more interesting to me are:

- RAM

- I/O

- SSD speed and function

- Audio hardware

Unfortunately, we don't have the full information on the SX's audio hardware implementation, meaning we can't yet do a proper comparison between the two consoles for that. So let's begin with the RAM configuration.

RAM

Let me put this bluntly - the memory configuration on the Series X is sub-optimal.

I understand

there are rumours that the SX had 24 GB or 20 GB at some point early in its design process but the credible leaks have always pointed to 16 GB which means that, if this was the case, it was very early on in the development of the console. So what are we (and developers) stuck with? 16 GB of GDDR6 @ 14 GHz connected to a 320-bit bus (that's 5 x 64-bit memory controllers).

Microsoft is touting the 10 GB @ 560 GB/s and 6 GB @ 336 GB/s asymmetric configuration as a bonus but it's sort-of

not. We've had this specific situation at least once before in the form of the

NVidia GTX 650 Ti and a similar situation

in the form of the 660 Ti. Both of those cards suffered from an asymmetrical configuration, affecting memory once the "symmetrical" portion of the interface was "full".

Interleaved memory configurations for the SX's asymmetric memory configuration, an averaged value and the PS5's symmetric memory configuration... You can see that, overall, the PS5 has the edge in pure, consistent throughput...

Now, you may be asking what I mean by "full". Well, it comes down to two things: first is that,

unlike some commentators might believe, the maximum bandwidth of the interface is limited to the 320-bit controllers and the matching 10 chips x 32 bit/pin x 14 GHz/Gbps interface of the GDDR6 memory.

That means that the maximum theoretical bandwidth is 560 GB/s,

not 896 GB/s (560 + 336). Secondly, memory has to be

interleaved in order to function on a given clock timing to improve the parallelism of the configuration. Interleaving is why you don't get a single 16 GB RAM chip, instead we get multiple 1 GB or 2 GB chips because it's vastly more efficient. HBM is a different story because the dies are parallel with multiple channels per pin and multiple frequencies are possible to be run across each chip in a stack, unlike DDR/GDDR which has to have all chips run at the same frequency.

However, what this means is that you need to have address space symmetry in order have interleaving of the RAM, i.e. you need to have all your chips presenting the same "capacity" of memory in order for it to work. Looking at the diagram above, you can see the SX's configuration, the first 1 GB of each RAM chip is interleaved across the entire 320-bit memory interface, giving rise to 10 GB operating with a bandwidth of 560 GB/s but what about the other 6 GB of RAM?

Those two banks of three chips either side of the processor house 2 GB per chip. How does that extra 1 GB get accessed? It can't be accessed at the same time as the first 1 GB because the memory interface is saturated. What happens, instead, is that the memory controller must instead "switch" to the interleaved addressable space covered by those 6x 1 GB portions. This means that, for the 6 GB "slower" memory (in reality, it's not slower but less wide) the memory interface must address that on a separate clock cycle if it wants to be accessed at the full width of the available bus.

The fallout of this can be quite complicated depending on how Microsoft have worked out their memory bus architecture. It could be a complete "switch" whereby on one clock cycle the memory interface uses the interleaved 10 GB portion and on the following clock cycle it accesses the 6 GB portion. This implementation would have the effect of averaging the effective bandwidth for all the memory. If you average this access, you get 392 GB/s for the 10 GB portion and 168 GB/s for the 6 GB portion for a given time frame but individual cycles would be counted at their full bandwidth.

However, there is another scenario with memory being assigned to each portion based on availability. In this configuration, the memory bandwidth (and access) is dependent on how much RAM is in use. Below 10 GB, the RAM will always operate at 560 GB/s. Above 10 GB utilisation, the memory interface must start switching or splitting the access to the memory portions. I don't know if it's technically possible to actually access two different interleaved portions of memory simultaneously by using the two 16-bit channels of the GDDR6 chip but if it were (and

the standard appears to allow for it), you'd end up with the same memory bandwidths as the "averaged" scenario mentioned above.

If Microsoft were able to simultaneously access and decouple individual chips from the interleaved portions of memory through their memory controller then you could theoretically push the access to an asymmetric balance, being able to switch between a pure 560 GB/s for 10 GB RAM and a mixed 224 GB/s from 4 GB of that same portion and the full 336 GB/s of the 6 GB portion (also pictured above). This seems unlikely to my understanding of how things work and undesirable from a technical standpoint in terms of game memory access and also architecture design.

In comparison, the PS5 has a static 448 GB/s bandwidth for the entire 16 GB of GDDR6 (also operating at 14 GHz, across a 256-bit interface). Yes, the SX has 2.5 GB reserved for system functions and we don't know how much the PS5 reserves for that similar functionality but it doesn't matter - the Xbox SX either has only 7.5 GB of interleaved memory operating at 560 GB/s for game utilisation before it has to start "lowering" the effective bandwidth of the memory

below that of the PS5...

or the SX has an averaged mixed memory bandwidth that is

always below that of the baseline PS4. Either option puts the SX at a disadvantage to the PS5 for more memory intensive games and the latter puts it at a disadvantage all of the time.

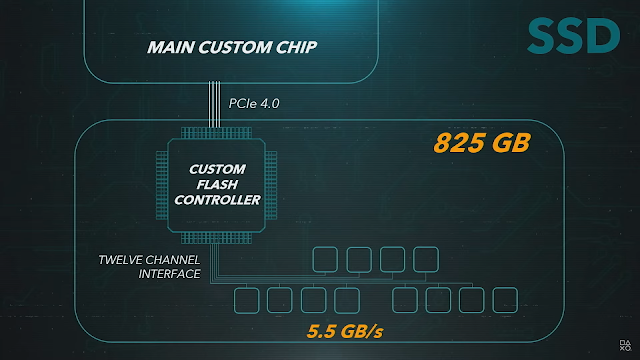

| The Xbox's custom SSD hasn't been entirely clarified yet but the majority of devices on the market for PCIe 4.0 operate on an 8 channel interface... |

I/O and Storage

Moving onto the I/O and SSD access, we're faced with a similar scenario - though Microsoft have done nothing sub-optimal here, they just have a slower interface.

14 GHz GDDR6 RAM operates at around 1.75 GB/s per pin, per chip (14 Gbps [32 data pins per chip x 10 chips gives total potential bandwidth of 560 GB/s - matching the 320-bit interface]). Originally, I was concerned that would be too close to the total bandwidth of the SSD but Microsoft have upgraded to a 2.4/4.8 GB/s read interface with their SSD which is, in theory, enough to utilise the equivalent of 1.7% of 5 GDDR6 chips uploading the decompressed data in parallel each second, leaving a lot of overhead for further operations on those chips and the remaining 6 chips free for completely separate operations. (4.8 GB/5 (1 GB) chips /1.75x32 GB/s)

In comparison, SONY can utilise the equivalent of 3.2% of the bandwidth of 6 GDDR6 chips, in parallel, per second (9 GB/5 (2 GB) chips /(1.75x32 GB/s)) due to the combination of a unified interleaved address space and unified larger RAM capacity (i.e. all the chips are 2 GB in capacity so, unlike the SX, the interface does not need to use more chips [or portion of their total bandwidth] to store the same amount of data).

Turning this around to the unified pool of memory, the SX can utilise 0.86% of the total pin bandwidth whereas the PS5 can use 2.01% of the total pin bandwidth, all of this puts the SX at just under half the theoretical performance (ratio of 0.42) of the PS5 for moving things from the system storage.

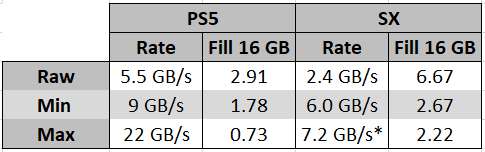

Unfortunately, we don't know the random read IOPS for either console as this number will more accurately reflect the real world performance of the drives but going on the above figures this means is that the SX can fill the RAM with raw data (2.4 GB/s) in 6.67 seconds whereas the PS5 can fill the RAM (5.5 GB/s) in 2.9 seconds, again, 2.3x the rate of the SX (this is just literally the inverse ratio of the above comparison with the decompressed data).

However, that's not the entire story. We also have to look at the custom I/O solutions and other technology that both console makers have placed on-die in order to overcome many potential bottlenecks and limitations:

The decompression capabilities and I/O management of both consoles are very impressive, but again, SONY edges out Microsoft with the equivalent of

10-11 Zen 2 CPU cores to

5 cores in pure decompression power. This optimisation on SONY's part really lifts all of the pressure off of the CPU, allowing it to be almost entirely focussed on the game programme and OS functions. That means that the PS5 can move up to 5.5 GB/s compressed data from the SSD and the decompression chip can decompress up to 22 GB/s from that 5.5 GB compressed data, depending on the compressibility of that underlying raw data (with 9 GB as a lower bound figure).

Data fill rates for the entire memory configuration of each console; the PS5 unsurprisingly outperforms the SX... *I used the "bonus" 20% figure for the SX's BCPack compression algorithm.

Meanwhile, the SX can move up to 4.8 GB/s of compressed data from the SSD and the decompression chip can decompress up to 6 GB/s of compressed data. However, Microsoft also have a specific decompression algorithm for texture data* called

BCPack (an evolution of

BCn formats) which can potentially add another 20% compression on top of that achieved by the PS5's Kraken algorithm (which this engineer estimates at a 20-30% compression factor). However, that's not an apples-to-apples comparison because this in on uncompressed data, whereas the PS5 should be using a form of

RDO which the same specialist reckons will bridge the gap in compression of texture data when combined with Kraken. So, in the name of fairness and lack of information, I'm going to leave only the confirmed stats from the hardware manufacturers and not speculate about further potential compression advantages.

While the SFS won't help with data loading from the SSD, it will help with data management within the RAM, potentially allowing for less frequent re-loading of data into RAM - which will improve the efficiency of the system, overall - something which is impossible to even measure at this point in time - especially because the PS5 will also have systems in place to manage data more intelligently.

This capability, combined with the consistent access to the entirety of the system memory, enables the PS5 to have more detailed level design in the form of geometry, models and meshes.

It's been said by Alexander Battaglia that this increased speed won't lead to more detailed open worlds because most open worlds are based on variation achieved through procedural methods. However, in my opinion, this isn't entirely true or accurate.

The majority of open world games utilise procedural content

on top of static geometry and meshes. Think of Assassin's Creed Odyssey/Origins,

Batman Arkham City/Origins/Knight,

Red Dead Redemption 2,

GTA 5 or

Subnautica. All of them open worlds, all of their "variations" are small aspects drawn from a standard pre-made piece of art - whether that's just a palette swap or model compositing. The only open world game that is heavily procedurally generated that I can think of is

No Man's Sky. Even games such as

Factorio or

Satisfactory do not go the route of No Man's Sky...

In the majority of games, procedural generation is still a vast minority of the content generation. Texture and geometry draws are the vast majority of data required from the disk. Even in games such as No Man's Sky, there are meshes that are composited or even just entirely draw from disk.

The Series X's SSD actually looks like it can be replaced... although you'd have to disassemble the entire console to be able to do so...

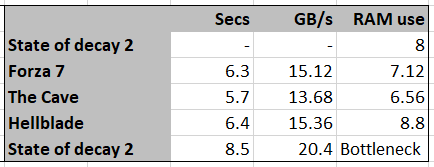

Looking at the performance of the two consoles on last-gen games, you'll see that it takes 830 milliseconds on PS5 compared to 8,100 milliseconds on PS4 Pro for Spiderman to load whereas it takes State of Decay 2 an average of 9775 milliseconds to load on the SX compared to 45,250 milliseconds on One X. (Videos here) That's an improvement of 9.76x on the PS5 and 4.62x on the SX... and that's for last gen games which don't even fill up as much RAM as I would expect for next generation titles.

Here I attempted to estimate the RAM usage of each game based on the time it took to swap out RAM contents and thus game session. We can see that State of Decay 2 has some overhead issues - perhaps it's not entirely optimised for this scenario... this is a simple model and not accurate to actual system RAM contents since I'm just dividing by 2 but it gives us a look at potential bottlenecks in the I/O system of the SX.

Now, this really isn't a fair test and isn't necessarily a "true" indication of either console's performance but these are the examples that both companies are putting out there for us to consume and understand. Why is it perhaps not a true indication of their performance? Well, combining the numbers above for the SSD performance you would get either (2.4 GB/s) x 9.78 secs = 23.4 GB of raw data or (4.8 GB/s) x 9.78 secs = 46.9 GB of compressed data... which are both impossible. State of Decay 2 does not (and cannot) ship that much data into memory for the game to load. Not to mention that swapping games on the SX takes approximately the same amount of time... Therefore, it's only logical to assume there are some inherent load buffers in the game that delay or prolong the loading times which do not port over well to the next generation.

In comparison, the Spiderman demo is either (5.5 GB/s) x 0.83 secs = 4.6 GB or (9 GB/s) x 0.83 secs = 7.47 GB, both of which are plausible. However, since I don't know the real memory footprint of Spiderman I don't know which number is accurate.

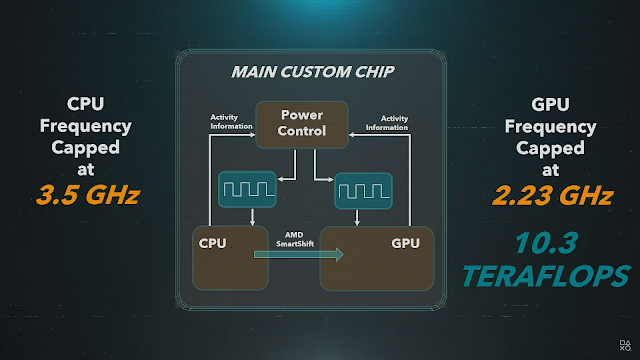

| This is a really interesting implementation of using a power envelope to determine the activity across the die... |

Audio Hardware

In my opinion, the "pixel" is well and truly dead. The majority of PC players in the world play at the 1080p resolution. The majority of TVs in peoples' houses are 720-1080p. 4K is a vast minority - yes, of course it's gaining ground as people replace their screens but the point is that most people are happy with their current setup and don't see the added bonus of upgrading the resolution or size of the screen setup unnecessarily.

Unfortunately, Microsoft have pushed their audio features much less than SONY have - I presume because it was not a huge focus of the console, instead they decided to focus on raytracing, graphics throughput, variable refresh rate, auto low latency mode and HDR. If you're not going to use the added rasterisation power through targetting a higher resolution, instead opting for optimisations that allow you to render at lower resolutions and scale up, why bother modelling the console around that processing power in the first place?

In comparison, SONY hasn't even name-checked HDR output like Microsoft have with 3D audio.

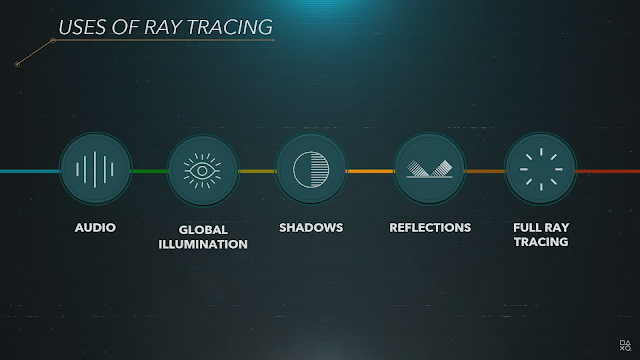

What we do know about the SX's audio solution is that it is a custom audio hardware block which will output compatible signals in Dolby Atmos, DTS:X and Windows Sonic codecs. This hardware will handle wave propagation and diffraction but has not officially (as far as I can find) linked this with the ray tracing engine on the GPU.

SONY, on the other hand, have gone all-in on their audio implementation. I had speculated previously that the audio solution might be based on AMD's TrueAudioNext and their GPU CU cores. Thinking that, I had presumed that the console designers would provide a subset of of their total CU count on the GPU for this function. Instead, SONY have actually

modified the CU units from AMD's design to make them more like the SPUs in the PS3's Cell architecture (no SRAM cache, direct data access from the DMA controller through the CPU/GPU and back out again to the system memory). We don't know how many altered CUs are present in this Tempest engine but SONY have said that the

SIMD computational power is equivalent to the entire 8 core Jaguar CPU that was in the PS4.

Essentially, SONY decided to reduce the amount of fully fledged CUs available to the GPU in order to provide this audio solution. This also means that the PS5's sound processing will take less CPU power from the system compared to the SX - which, again, counts against the SX in terms of resources available to run games.

I guess that I'll have more on this as the features are fully revealed.

SONY's implementation of RT is able to be spread across many different systems...

Conclusion

The numbers are clear - the PS5 has the bandwidth and I/O silicon in place to optimise the data transfer between all the various computing and storage elements whereas the SX has some sub-optimal implementations combined with really smart prediction engines but these, according to what has been announced by Microsoft, perform below the specs of the PS5. Sure, the GPU might be much larger in the SX but the system itself can't supply as much data for that computation to take place.

Yes, the PS5 has a narrower GPU but the system supporting that GPU is much stronger and more in-line with what the GPU is expecting to be handed to it.

Added to this, the audio solution in the PS5 also alleviates processing overhead from the CPU, allowing it to focus on the game executable. I'm sure the SX has ways of offloading audio processing to its own custom hardware but I seriously doubt that it has a) the same latency as this solution, b) equal capabilities or c) the ability to be altered through code updates afterwards.

In contrast, the SX has the bigger and wider GPU but, given all the technical solutions that are being implemented to render games at lower than the final output resolution and have them look as good, does pushing more pixels

really matter?

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...

hole-in-my-head.blogspot.com

wccftech.com

hole-in-my-head.blogspot.com