Fucking hell this was a bunch of work....

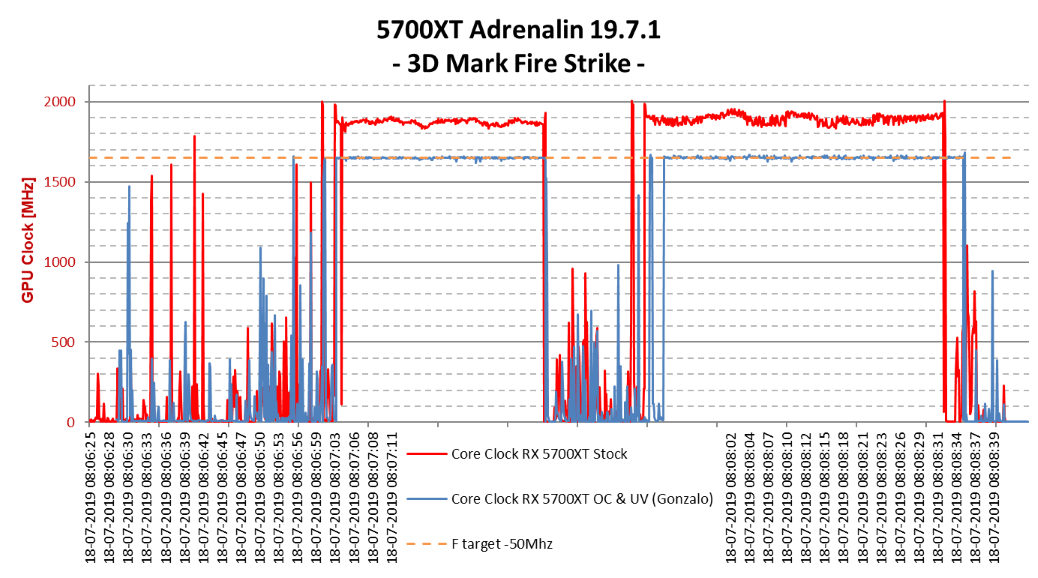

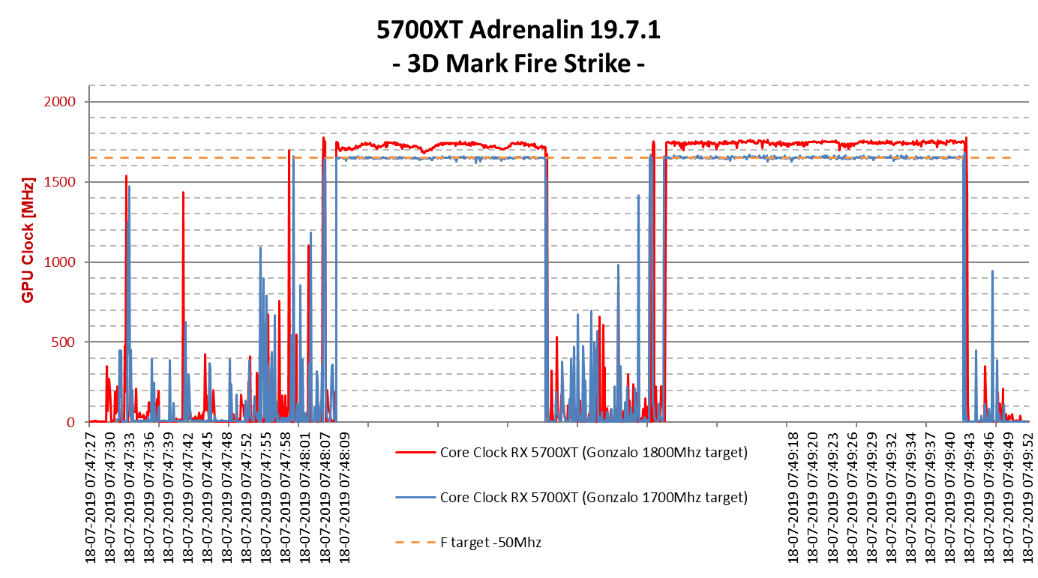

So first results for the power scaling (respectively frequency/voltage scaling) profile of the 5700XT. I tried to undervolt every datapoint, but i didn't go through the hassle of finding the lowest stable undervolt for each one.

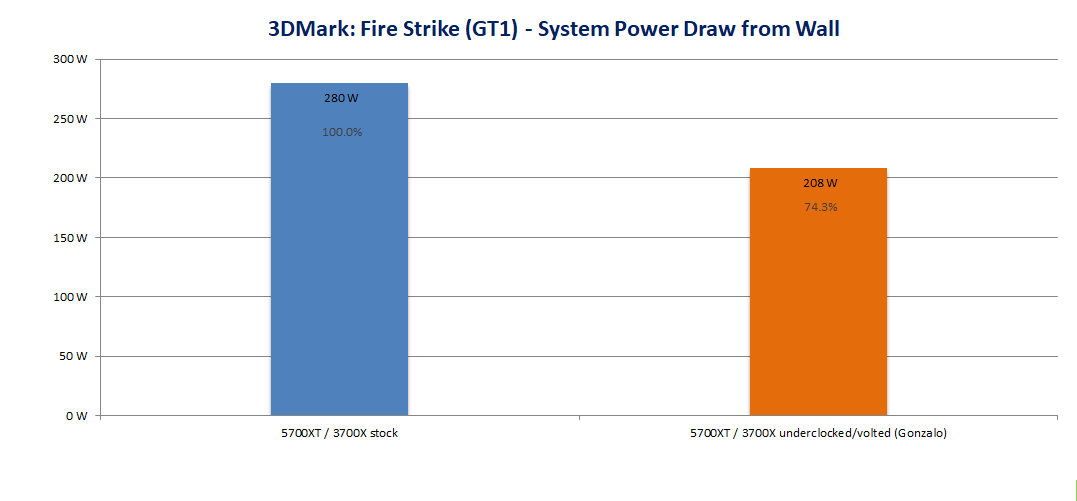

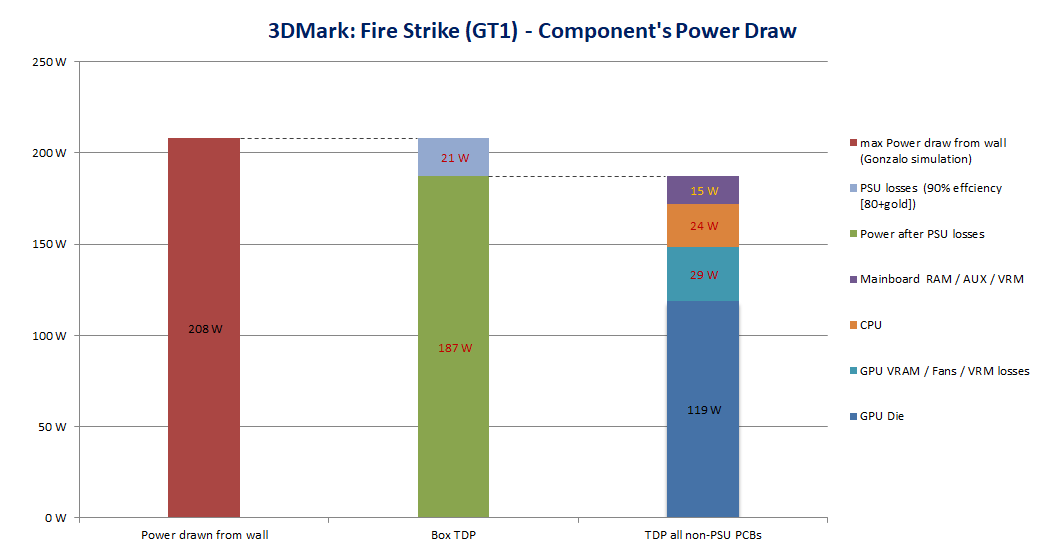

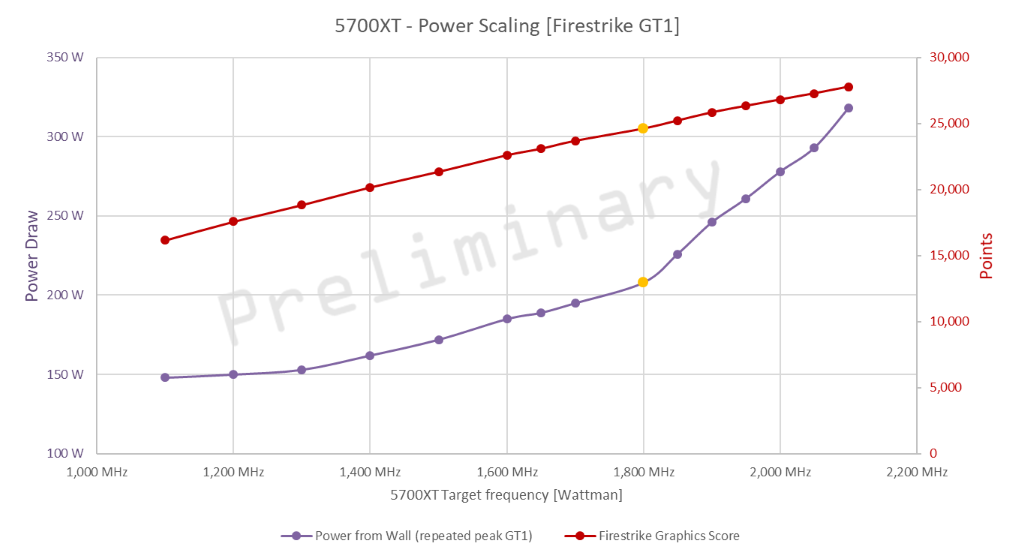

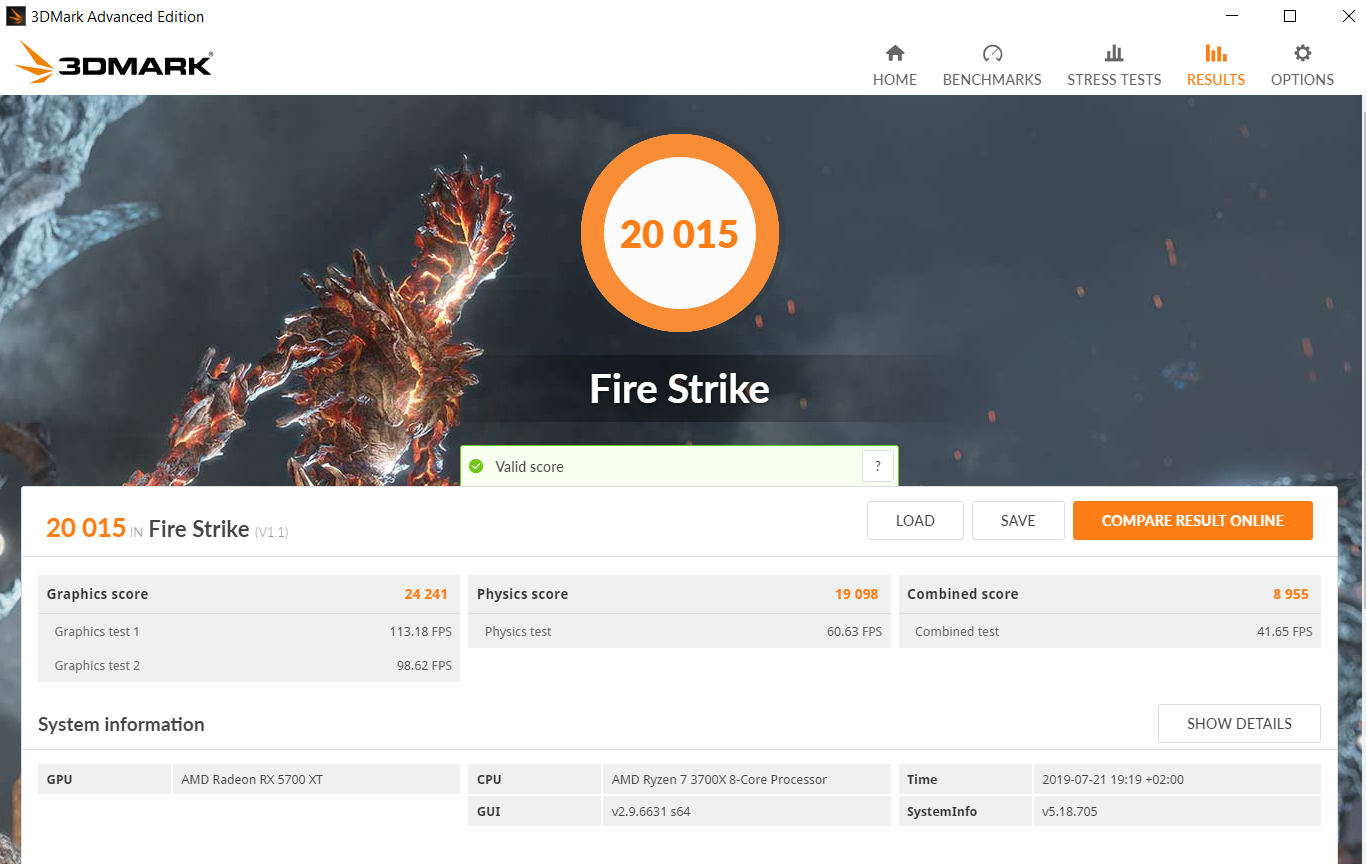

So here's system power drawn from the wall over target frequency in Wattman in relation to graphics score scaling in Fire Strike (All is done with the 3700X @3200Mhz & 1000mV):

As you can see, power draw looks exponential and really starts firering up above 1.8 Ghz target frequency. All that while the Fire Strike score raises not even quite linearly. The yellow dot is the Gonzalo simulation.

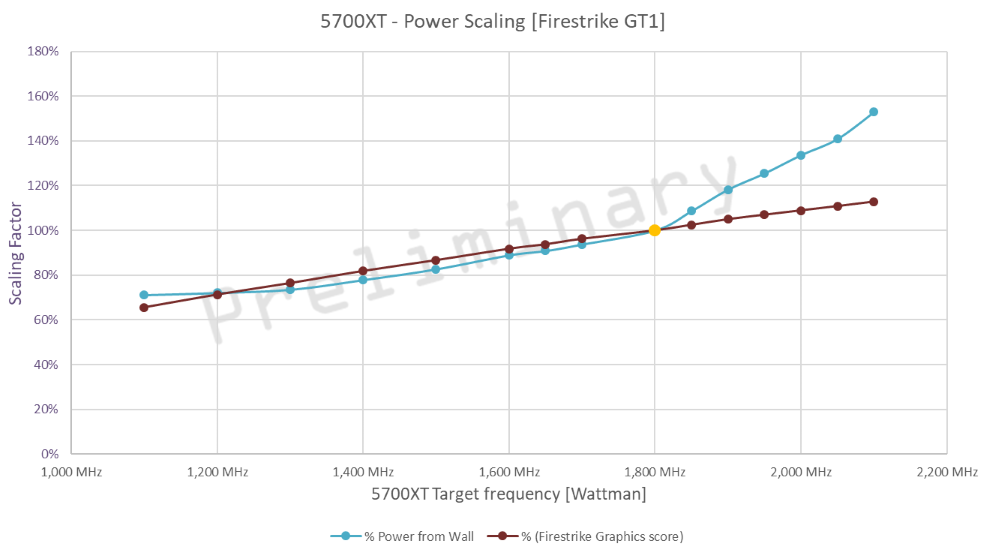

Another way to show this, is to compare scaling factors of Fire Strike and Wallpower with the Gonzalo simulation as a base (100%):

Like i said, there is still some room left for further undervolting, but the result shouldn't look to different from that.

to

, PC to Sony.