DemonCleaner

Member

This thread is work in progress

First things first:

So what‘s Gonzalo?

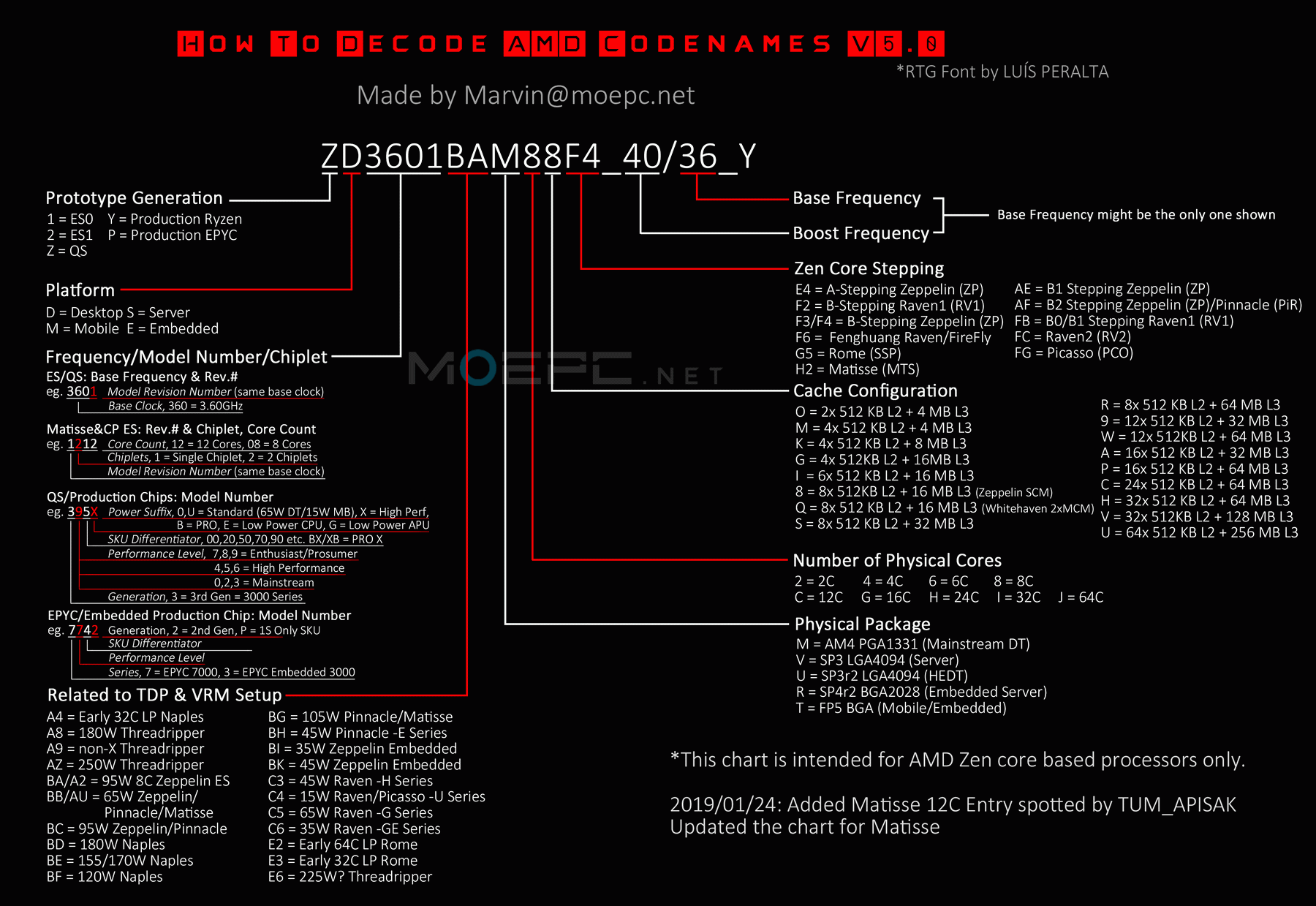

"Gonzalo" was a leak from 3Dmark database earlier this year that hinted to a semicustom (not PC) APU wich through a AMD intern product code told us that it uses a CPU boost clock of 3.2Ghz and a GPU clock of 1.8Ghz.

You can read a summary of all that here:

https://www.eurogamer.net/articles/digitalfoundry-2019-is-amd-gonzalo-the-ps5-processor-in-theory

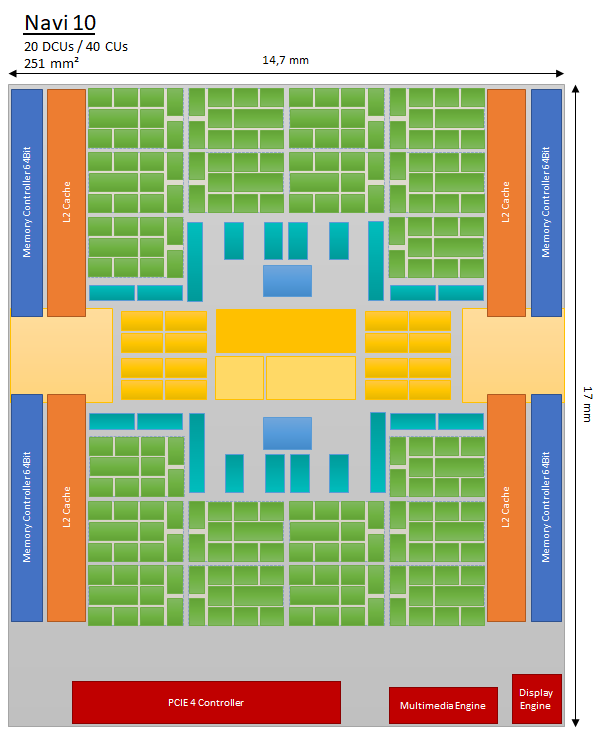

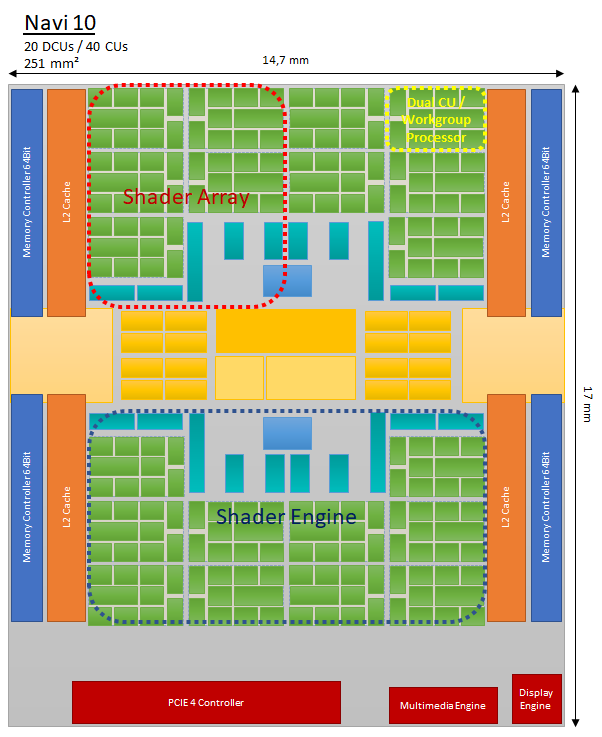

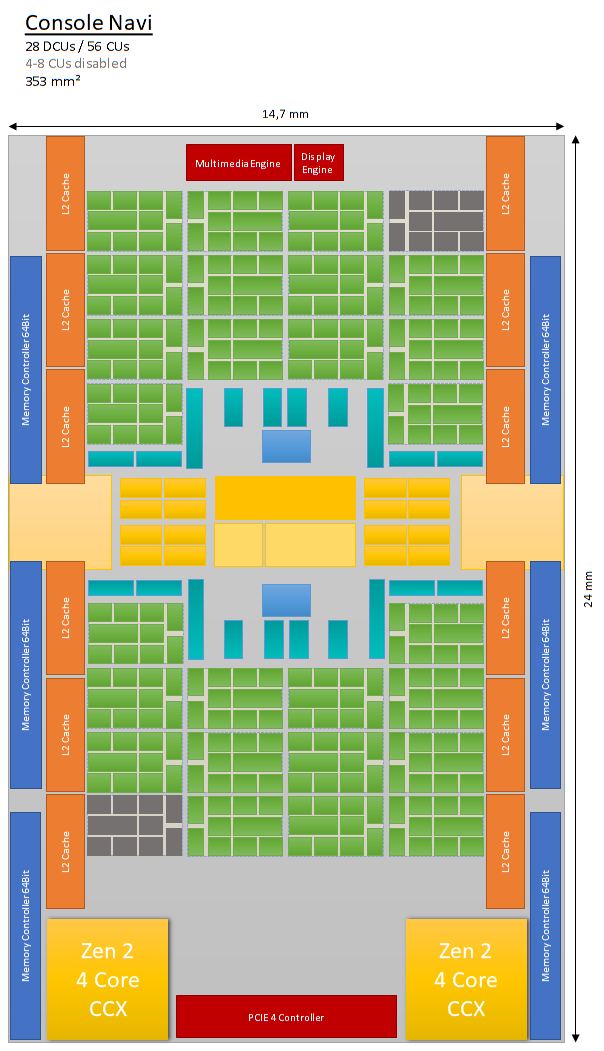

so the string „13E9“ at the end of the code refers to a driver entry for Navi10 lite. Navi10 is also the architecture name of the recent rx 5700 series of AMDs desktop GPUs which use up to 40 Compute Units.

So confronted with these numbers the question must be allowed to ask: Would the power requirements of such a chip even fit in a console sized box with it’s thermal and power constraints?

Simulating Gonzalo:

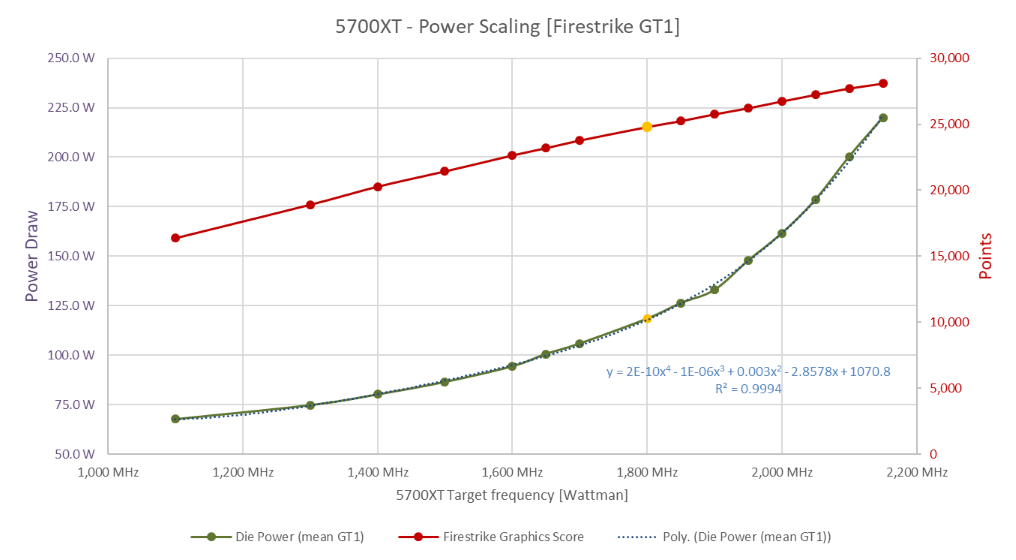

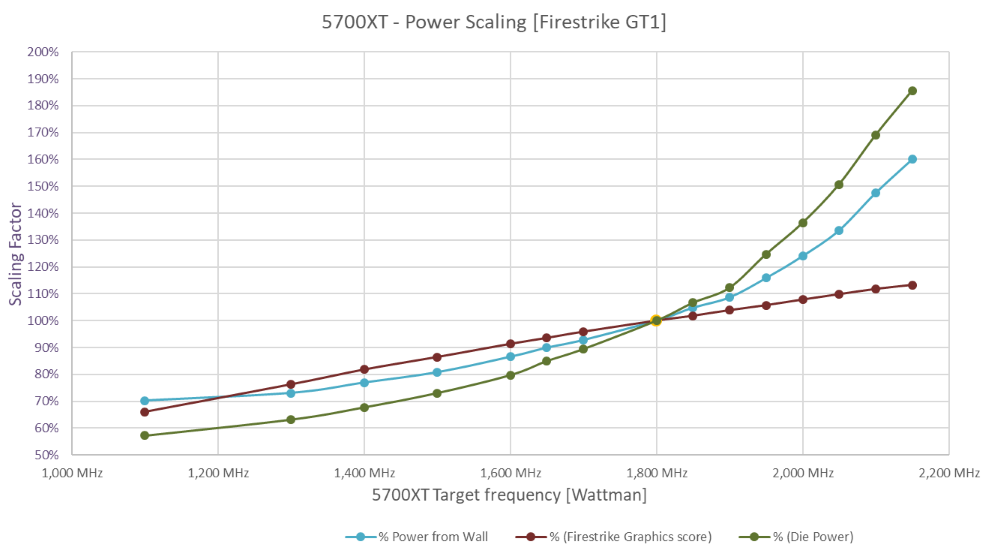

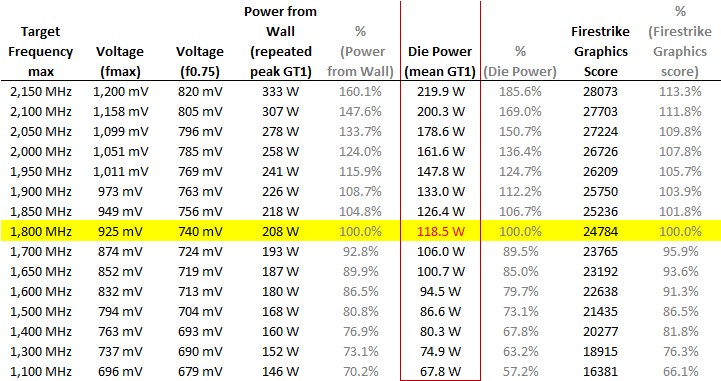

So here we have the spec sheet of the 5700xt vs the data we can derive from the leaks:

For the CPU part of the APU we expect a 8 core variant. on 7nm the nearest AMD prozessors would be the 3700X or 3800X. i snapped up the former.

As for the testing conditions: I put the 5700xt and 3700X with 16GB DDR4 on a B350 motherboard in a well ventilated case. As you can see from the spec sheet, the first problem we are confronted with, is that the GPU alone is capable of drawing 225W.

So how do we get this combination of gear to a comparable to consoles set up?

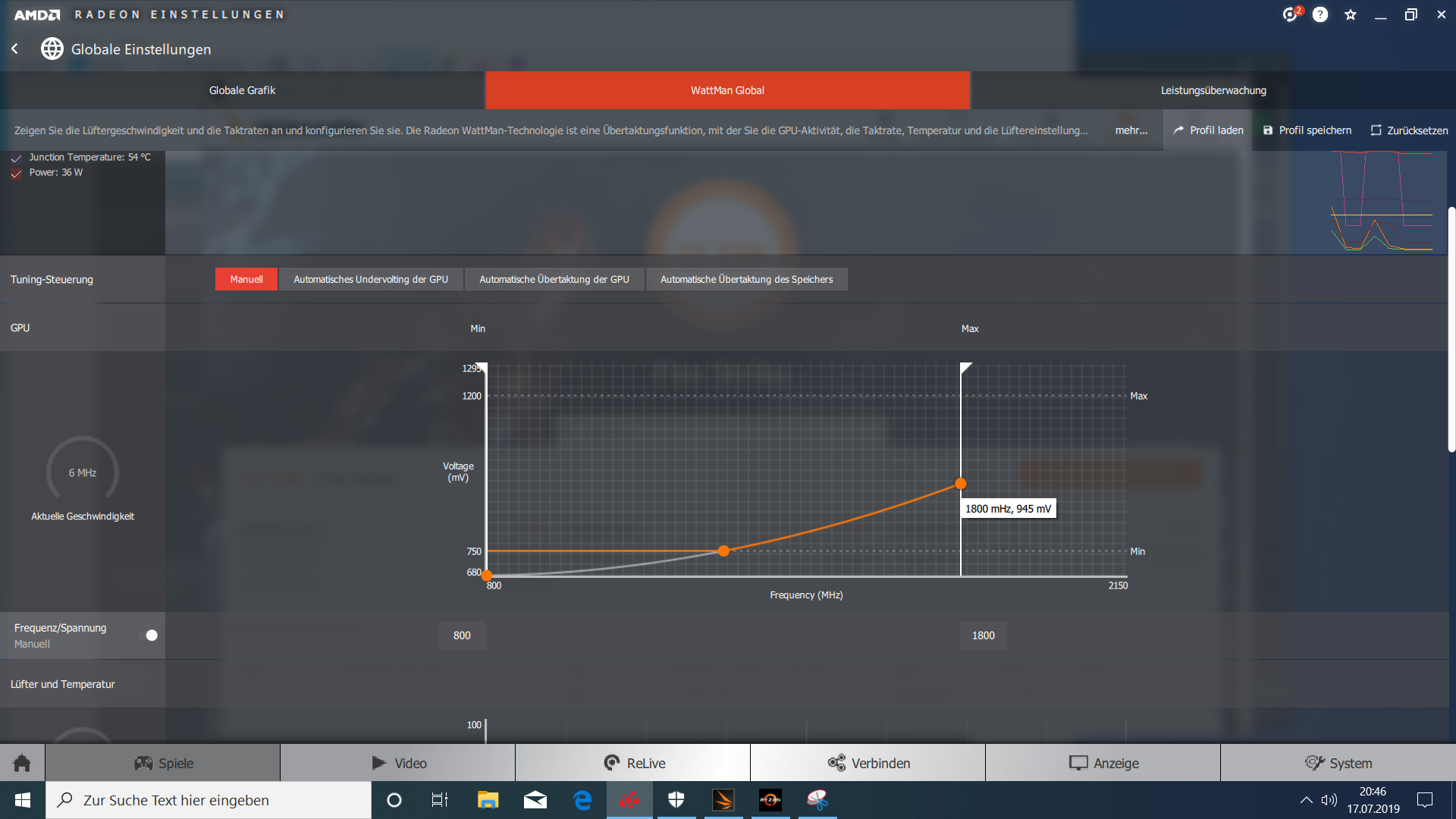

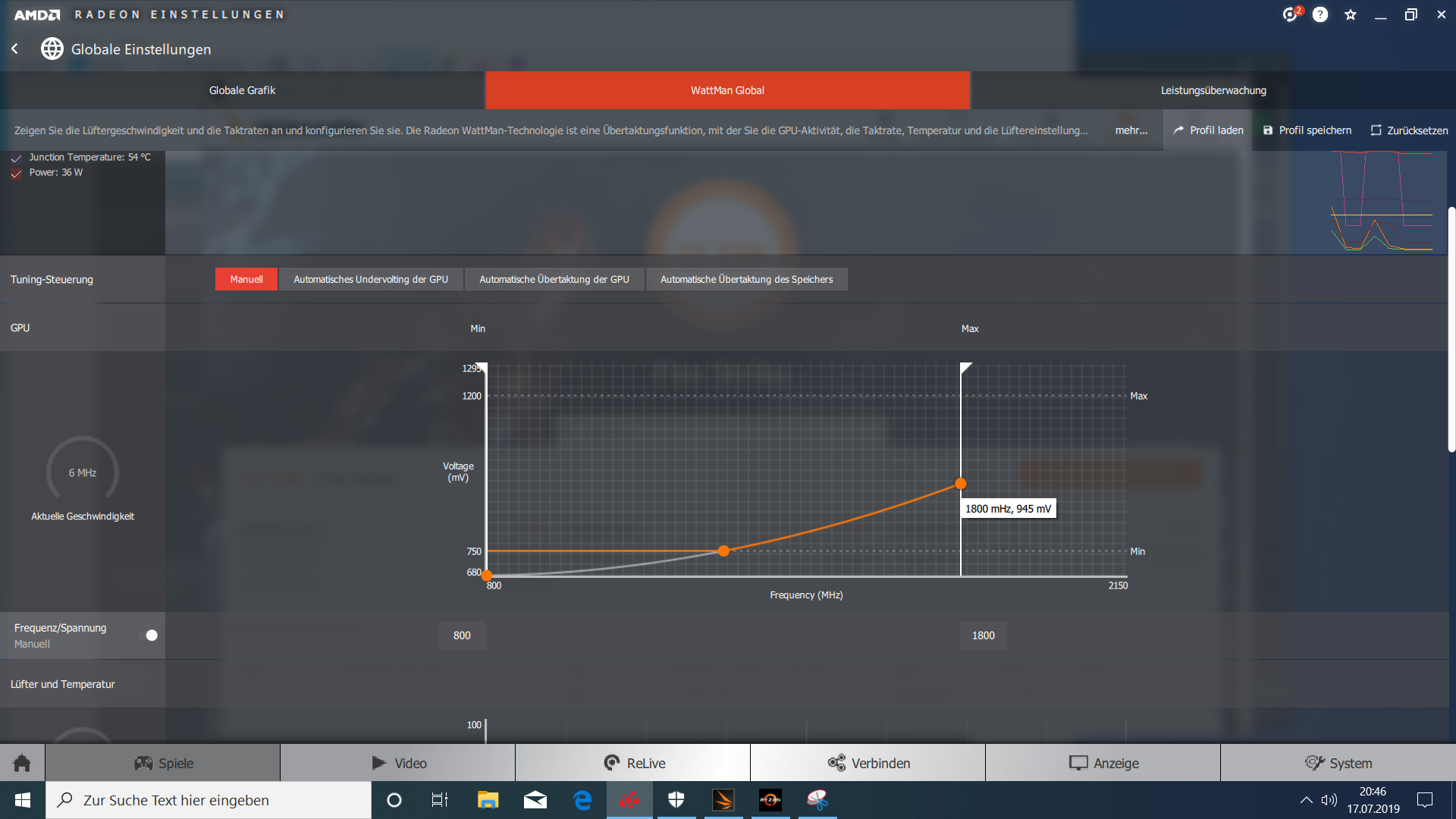

first we underclock and undervolt the GPU to what the Gonzalo leak suggests. You can archieve that by changing the parameters in AMDs Wattman:

We start by setting the power limit to -30% which gives us a physical limit of current drawn which from my pretesting equates to around 125W GPU die power (only die without vram and aux)

After that we limit the voltage frequency curve to just 1800MHz at 975mV (stock is 2032Mhz @ 1198mV):

Secondly we underclock and undervolt the CPU via AMDs ryzen master:

We max the clock to 3.2Ghz and undervolt to 1000mV (stock iirc 1.4V)

Benchmarks and Testing

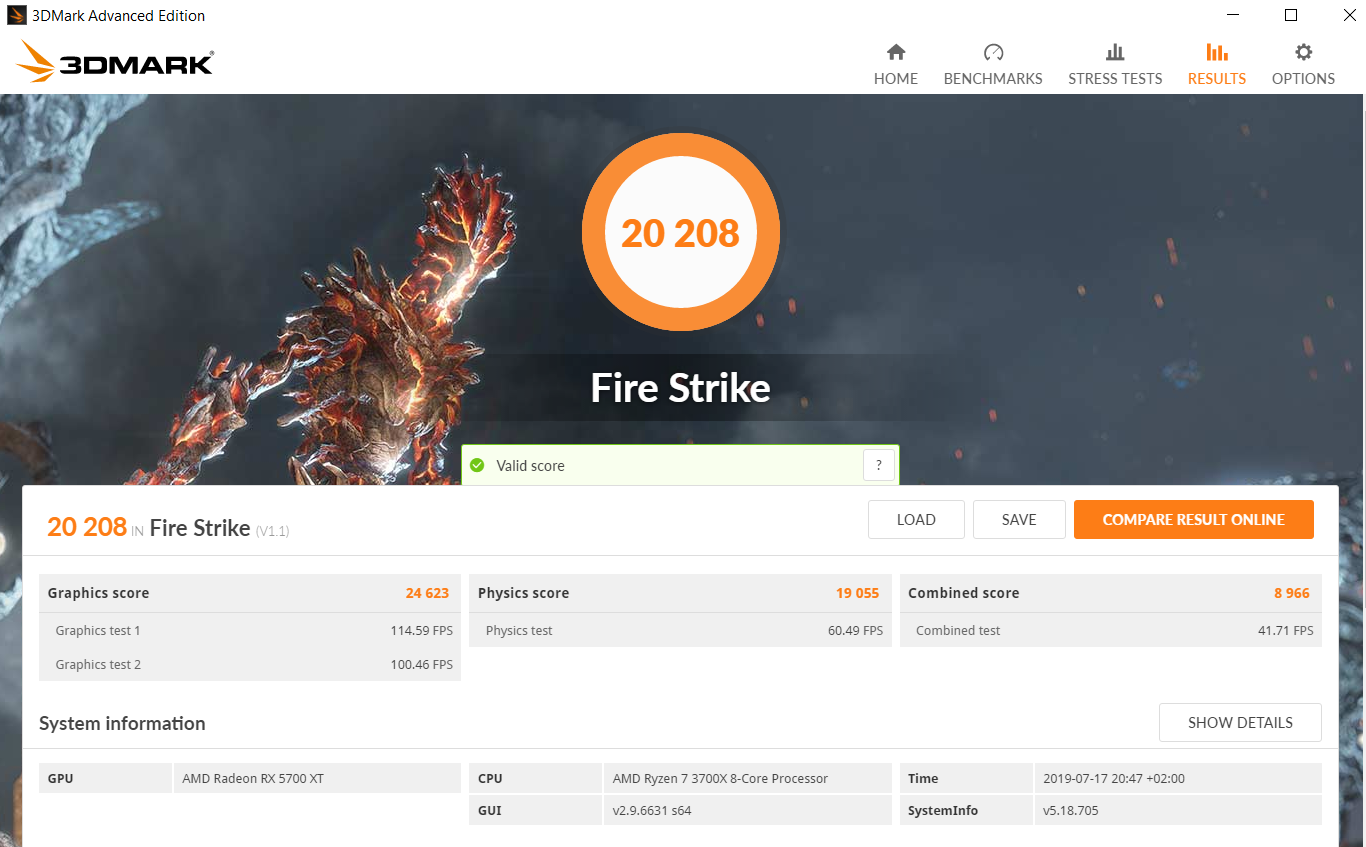

3D Mark - Fire Strike:

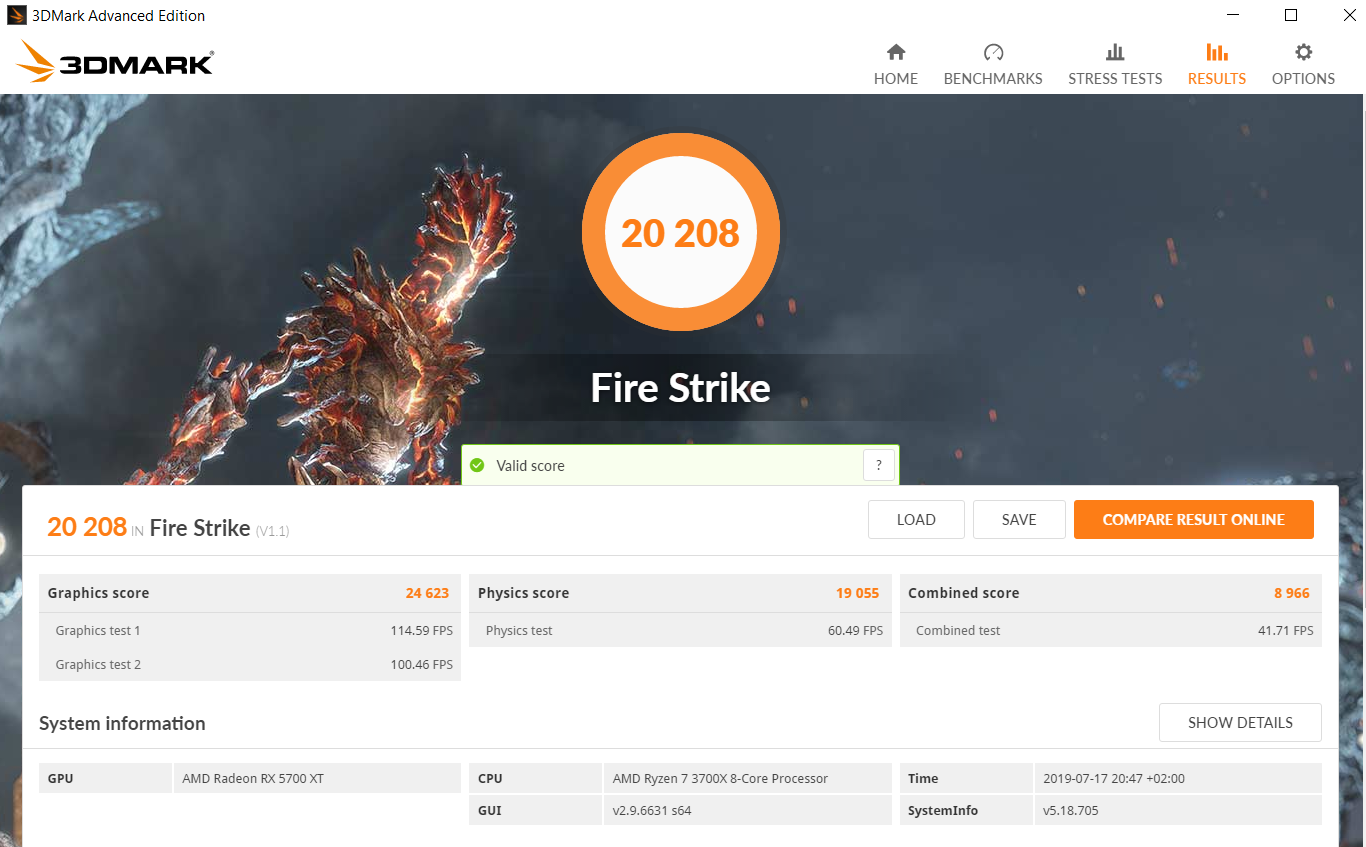

drumroll

.

.

.

Exactly what the last leak of TumApisak was suggesting.

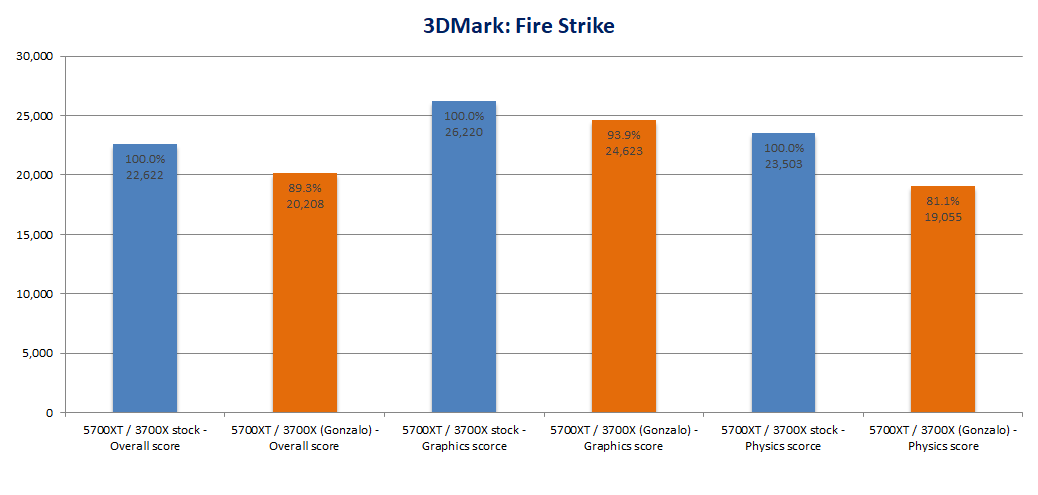

So how does that acutally compare?

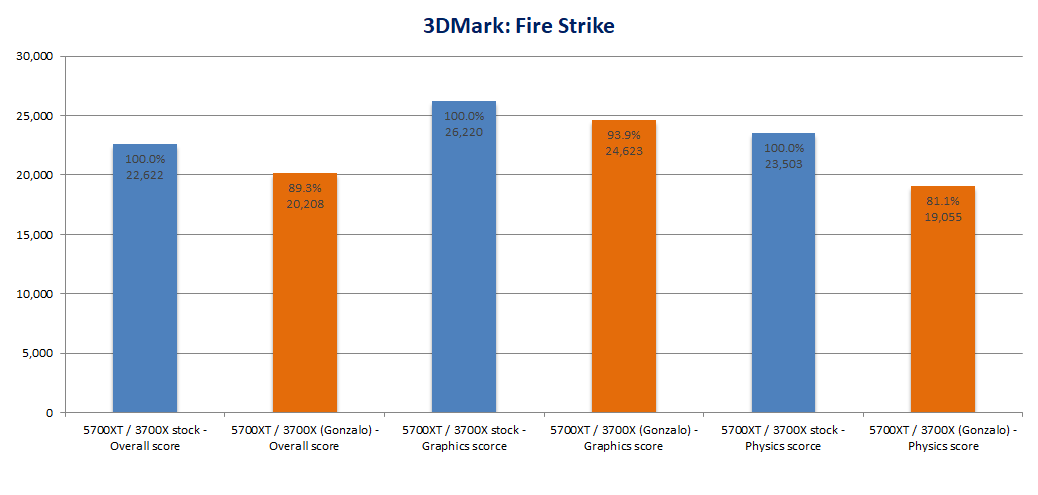

First wow, the 3700x is a beast. With the 1600 i used before you couldn't dare to dream to come close to 20K overall at the 5700XT's stock settings. The overall score of the Gonzalo configuration is around 10% down from the stock settings. Graphics score is down just 6% but CPU dependend Physics score takes a hit with the limitations and is down nearly 20%.

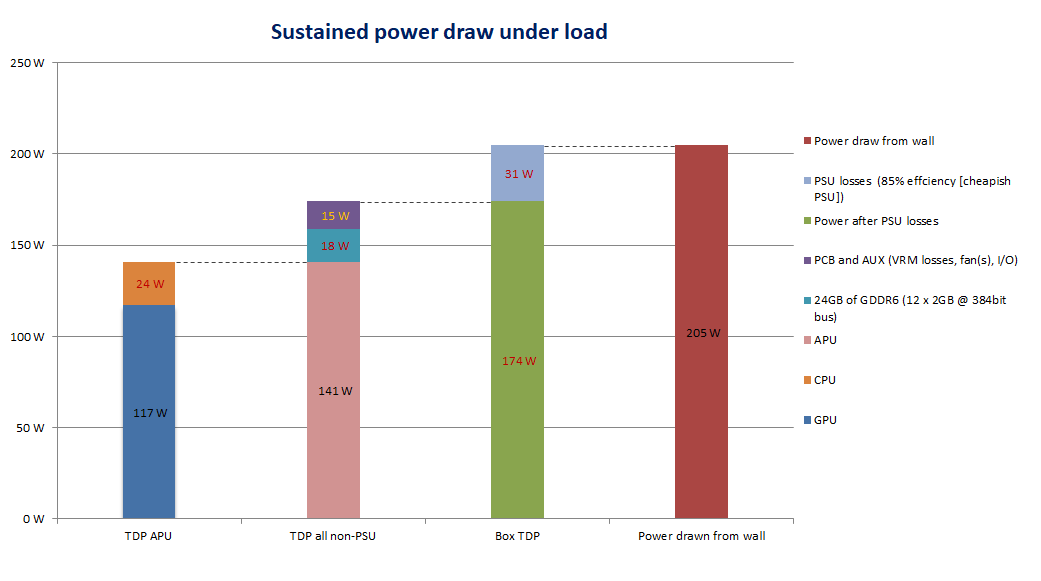

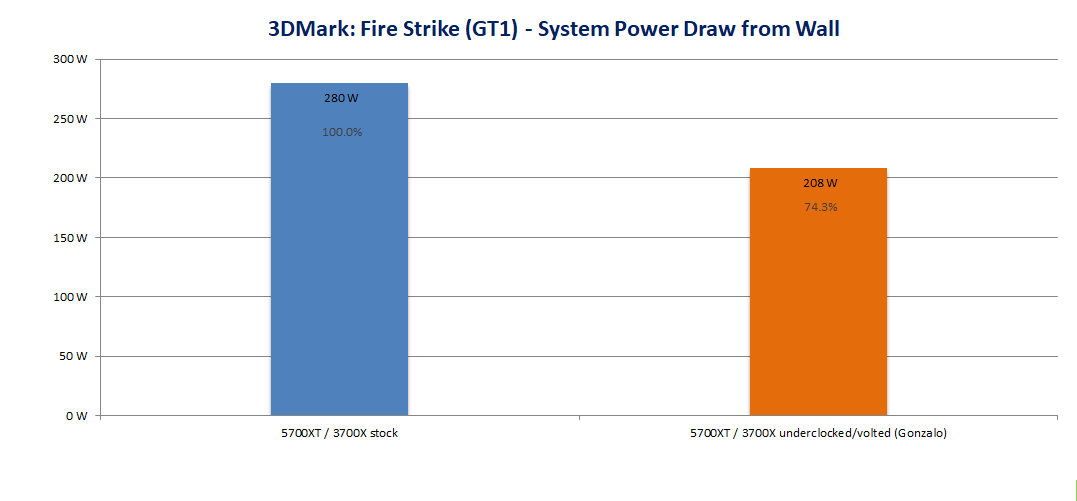

But what does it take to get there?

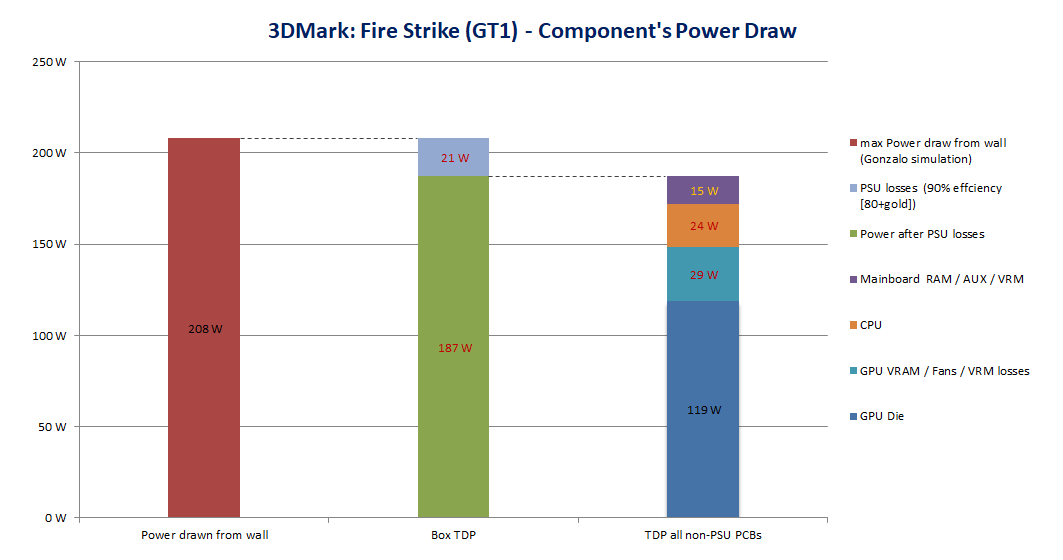

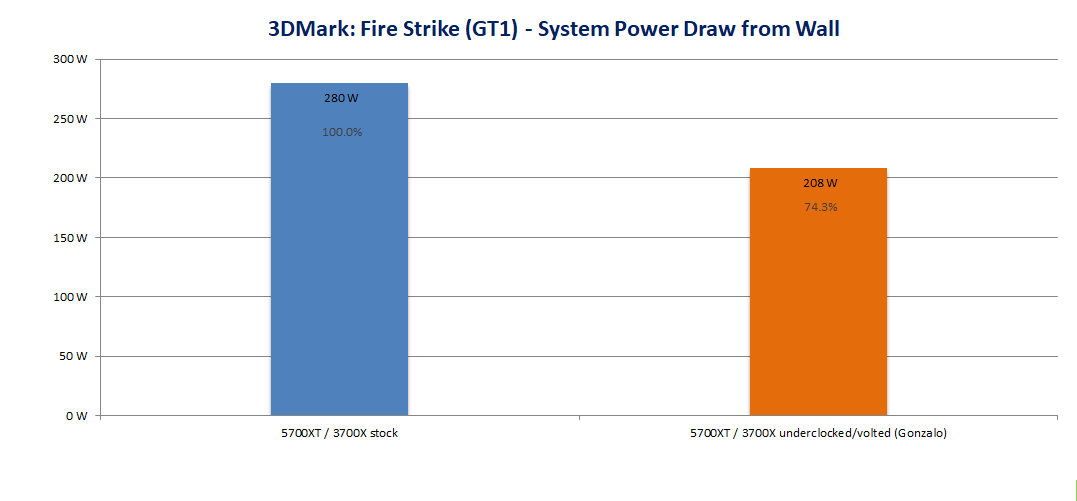

So here is the system power drawn from the wall. That's repeated peak power and not the mean. A wallside TDP equivalent would probably be around 10W lower:

That's in Fire Strike's graphics test 1 representing the maximal power load. Graphics test 2 which stresses other aspects of the graphics pipeline more is around 10 watts lower.

Here are the GPU clock rates stock and gonzalo:

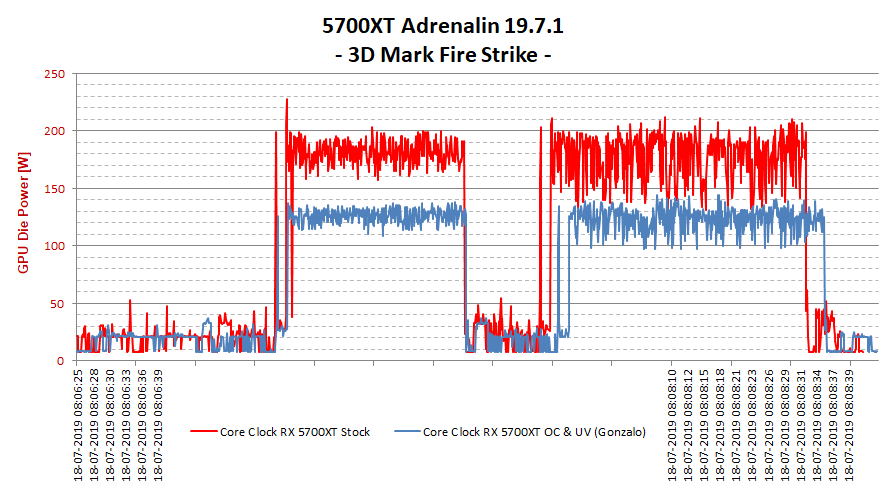

As we can see, we don't hit the 1800MHz in this load due to reaching the power limit. In GT2 where power constraints are lower we reach steadier and higher clocks.

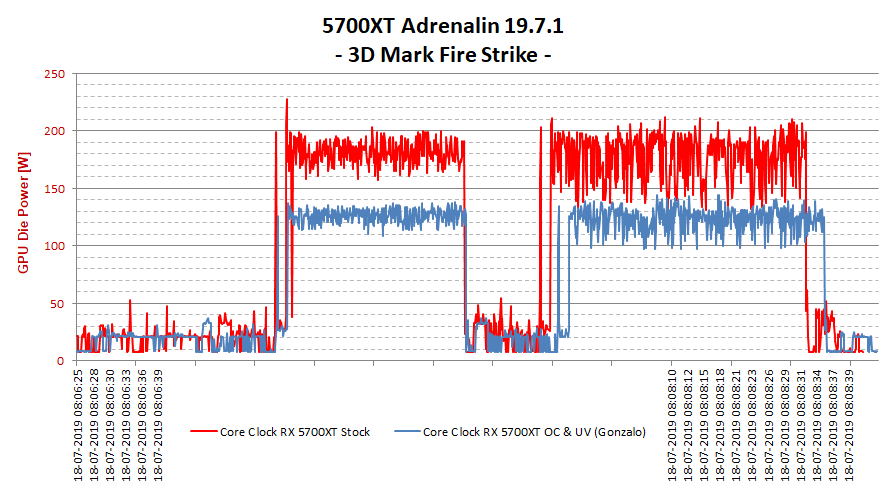

Here's the over time chart of the GPU die power:

Disclamer: I did this in quite a hury. The settings used seem to run stable. Because of said time constraints i haven't done due dilligence on everything (e.g. if the undervolt of the cpu did register corretly). So take all results with a huge grain of salt.

Got to go to work now. More to follow...

First things first:

- I'm not saying Gonzalo is PS5, it might be!

- I'm not suggesting this simulated Gonzalo is equivalent to PS5's power in games, this thread is about how much computational power you can put in a console size box and not how efficient you can use that power.

So what‘s Gonzalo?

"Gonzalo" was a leak from 3Dmark database earlier this year that hinted to a semicustom (not PC) APU wich through a AMD intern product code told us that it uses a CPU boost clock of 3.2Ghz and a GPU clock of 1.8Ghz.

You can read a summary of all that here:

https://www.eurogamer.net/articles/digitalfoundry-2019-is-amd-gonzalo-the-ps5-processor-in-theory

so the string „13E9“ at the end of the code refers to a driver entry for Navi10 lite. Navi10 is also the architecture name of the recent rx 5700 series of AMDs desktop GPUs which use up to 40 Compute Units.

So confronted with these numbers the question must be allowed to ask: Would the power requirements of such a chip even fit in a console sized box with it’s thermal and power constraints?

Simulating Gonzalo:

So here we have the spec sheet of the 5700xt vs the data we can derive from the leaks:

For the CPU part of the APU we expect a 8 core variant. on 7nm the nearest AMD prozessors would be the 3700X or 3800X. i snapped up the former.

As for the testing conditions: I put the 5700xt and 3700X with 16GB DDR4 on a B350 motherboard in a well ventilated case. As you can see from the spec sheet, the first problem we are confronted with, is that the GPU alone is capable of drawing 225W.

So how do we get this combination of gear to a comparable to consoles set up?

first we underclock and undervolt the GPU to what the Gonzalo leak suggests. You can archieve that by changing the parameters in AMDs Wattman:

We start by setting the power limit to -30% which gives us a physical limit of current drawn which from my pretesting equates to around 125W GPU die power (only die without vram and aux)

After that we limit the voltage frequency curve to just 1800MHz at 975mV (stock is 2032Mhz @ 1198mV):

Secondly we underclock and undervolt the CPU via AMDs ryzen master:

We max the clock to 3.2Ghz and undervolt to 1000mV (stock iirc 1.4V)

Benchmarks and Testing

3D Mark - Fire Strike:

drumroll

.

.

.

Exactly what the last leak of TumApisak was suggesting.

So how does that acutally compare?

First wow, the 3700x is a beast. With the 1600 i used before you couldn't dare to dream to come close to 20K overall at the 5700XT's stock settings. The overall score of the Gonzalo configuration is around 10% down from the stock settings. Graphics score is down just 6% but CPU dependend Physics score takes a hit with the limitations and is down nearly 20%.

But what does it take to get there?

So here is the system power drawn from the wall. That's repeated peak power and not the mean. A wallside TDP equivalent would probably be around 10W lower:

That's in Fire Strike's graphics test 1 representing the maximal power load. Graphics test 2 which stresses other aspects of the graphics pipeline more is around 10 watts lower.

Here are the GPU clock rates stock and gonzalo:

As we can see, we don't hit the 1800MHz in this load due to reaching the power limit. In GT2 where power constraints are lower we reach steadier and higher clocks.

Here's the over time chart of the GPU die power:

Disclamer: I did this in quite a hury. The settings used seem to run stable. Because of said time constraints i haven't done due dilligence on everything (e.g. if the undervolt of the cpu did register corretly). So take all results with a huge grain of salt.

Got to go to work now. More to follow...

Last edited: