My gamble was that the next radeon card would push 2x 5700xt performance as 5700xt didn't really push limits for AMD. which would result in about 50-60% above a 2080ti aka 3080 performance wise. This is also the reason i think nvidia pushed there top core in the 3080. I also think the GPU was going to be planned to launch at 700 bucks much like there radeon 7 and compete against nvidia's top end gpu as result.

There is absolute no reason nvidia would push these heaters + massive cards and there top core for 700 bucks. it's because AMD is going to drop a actual next gen card.

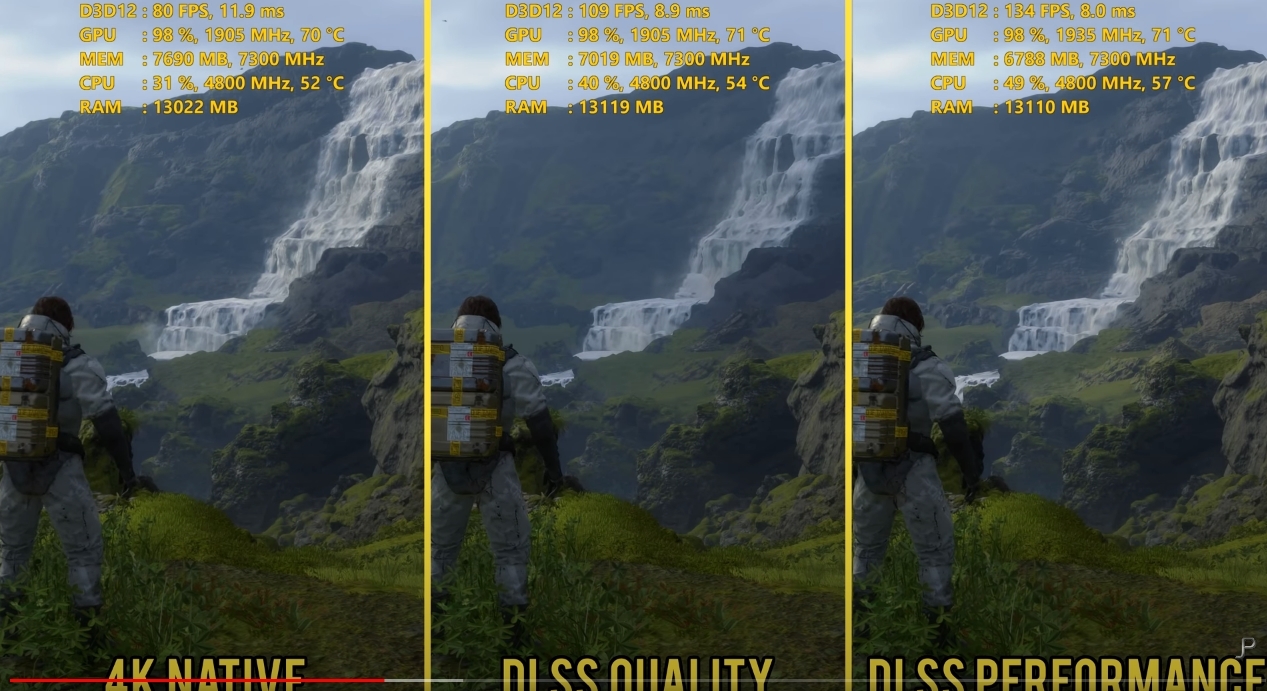

Now obviously this could have all been changed the moment dlss2.0 got released by nvidia. Because frankly look at this stuff, 67% performance increase.. its no joke.

This means AMD could be in a real pickle against nvidia as raw performance of the GPU no longer is going to carry it. If they have no DLSS alternative to offer. They will never beat the 3080 or 3090 as result and probably evne struggle against a 3070 with DLSS 2.0 active. It's straight up pushes them into a budget bracket and 400 price point. However they could ignore this by simple offering more v-ram and only bench in demonstrations games that do not support DLSS to give them a favorable look.

However it could explain AMD's memory setup. it could very well be possible they are aiming for a cheap price point instead of chasing the top again.

About AMD cpu's.

AMD CPU's where already up with the 3000 series ryzens. CPU prices went up on intel with 60% over the last 2 generations. AMD prices went up probably 40-50%. I am not a big fan of this outcome and frankly as AMD follows intel suit on this solution prices are not coming down but both are going up. AMD will do the exact same shit with there GPU department.