seems like there are quite a few shills forgetting 4-5years ago mid range gpus were 300-400. a 40-50 price increase every few years is fair but paying 600 for rtx 4070 isnt inflation. Its a ripoff

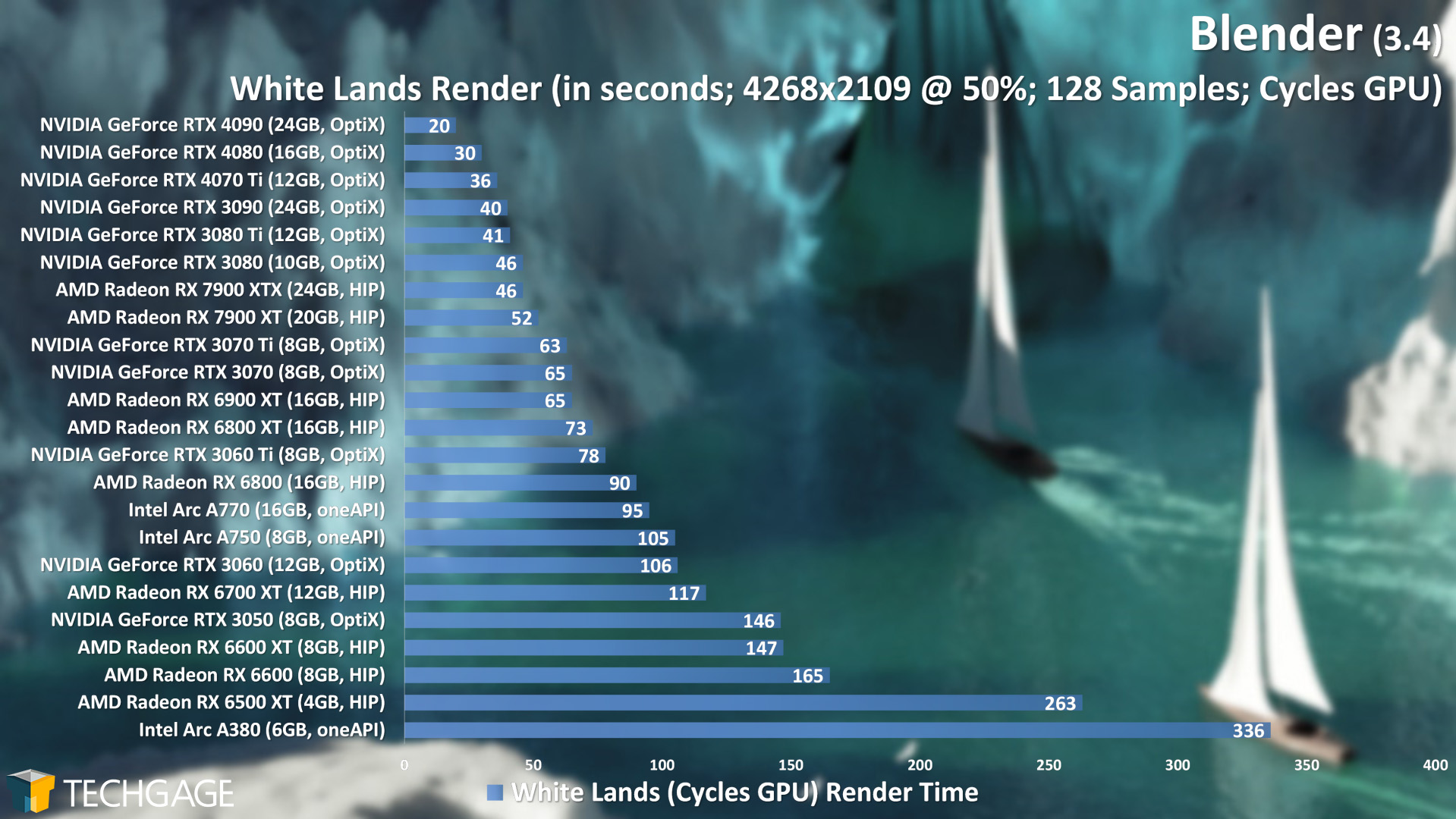

It all depends on its actual performance, if its on avg just as good as 3070ti then its big rip off, if its similar or even slighty better from 10gigs 3080, its worth its price and will sell quickly. especially considering it will have 12gigs of vram so 50% more than 3070ti and 20% more vs 3080.

https://www.techpowerup.com/gpu-specs/geforce-rtx-3070-ti.c3675 here 3070ti, 600$ msrp, 8gigs vram, launched end of may 2021 so durning crypto boom, aka fake msrp, street price was around 2x bigger.

But lets just compare value vs value, if 2 years later we getting actual 3080 12gigs effectively for same msrp and it wont be fake msrp but actual street price i count it as progress, 20% faster with 50% more vram for same price.

Inflation matters and is really big even in the US but progress in the tech should be bigger vs inflation, we will know in under 2 weeks how it looks.

Here inflation calculator

https://www.bls.gov/data/inflation_calculator.htm quick math 499$ in may 2021( so launch of 3070) is 558$ in february 2023, so if 4070 costs 599 we paying only 40bucks over inflation price, thats not some crazy mark up.

In the end we gotta see independend benchmarks to be sure how good of a value this card gonna be, ofc we can tell its ballpark performance( 3070ti to 3080) but here actually specifics matter.

Remember in the end its perceived value is decided by overall market, 1600$ rtx 4090 was considered too expensive by many, just the thing is, those "many" werent market aka target audience of 4090, actual target adience happily bough those cards w/o any complains.

Same gonna happen here, some1 who is customer in 350-400$ budget range gonna be super vocal on forums/twitter/whenever his bubble is but actual target aka ppl who are willing to spend 600$ will decide on their own, using independend benchmarks and comparing cards value to other ones in same bracket- thats why rtx 4080 sells extremly badly at 1200$ and thats why rx 7900xt had to lower its price from 900 to 800$ msrp- actual market decides if product is good, not one person or bunch of ppl who wouldnt buy it anyways.