lukilladog

Member

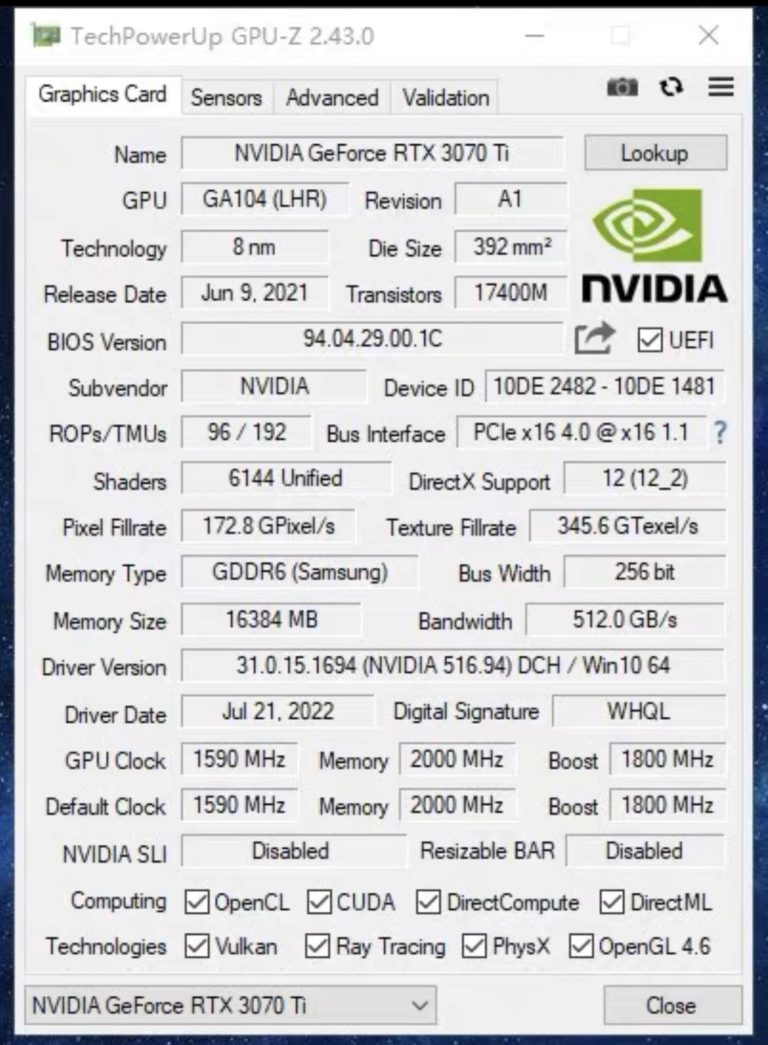

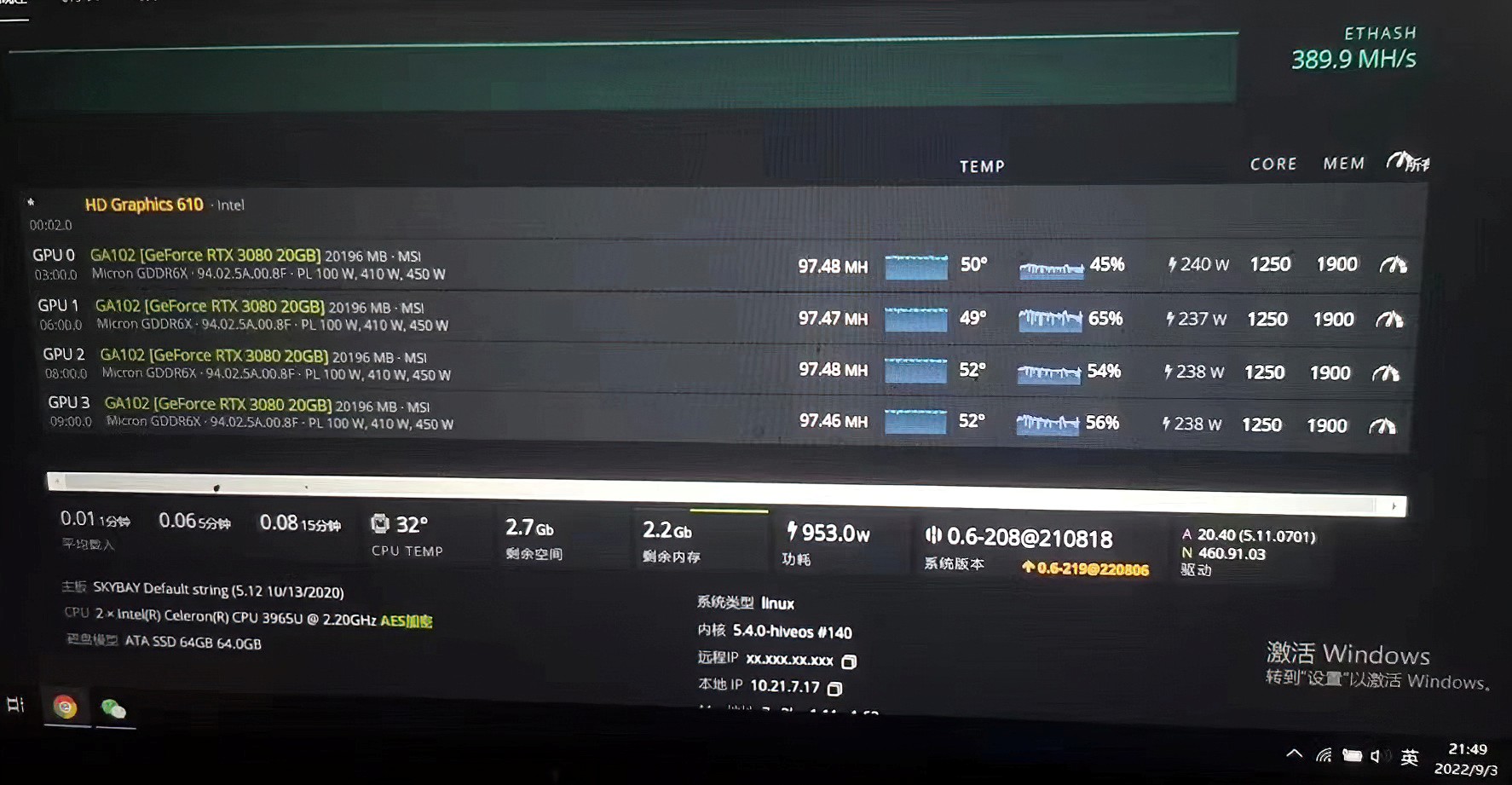

Considering Nvidia had RTX3080'20G in the wings and RTX 3070Ti'16G all but ready im not surprised more people are doing this mod now.

Two years ago we saw it was possible:

And Nvidia didnt allow AIBs to sell these Protos to the public........so they sold them to Miners.

To selected miners, even miners were pissed off because nvidia´s scumbaggery.

Last edited: