Yeah but on the bright side devs will have the hardware to push far above the old standards and eventually say F*** them kidsMost likely but it could be a while before it becomes the standard. I guess we just have to be patient.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GhostRunner PS5/XSX/XSS DF Performance Analysis

- Thread starter Mr. PlayStation

- Start date

MasterCornholio

Member

This is ridiculous, they did ban me in the past and now they insult me for talking about it...

BTW: Alex was right, he mad a 5 hours show when I was banned, he explained how the lizard people colluded with Bill Gates and the interdimentional aliens to get rid of me.

These people need to understand that stating that Microsoft's console is not destroying the competition is not "console warring".

Neither is making fun of people who think that one day a game that runs well on a 12tf GPU will be impossible on a 10tf one without very minor tweaks.

mod edit: to respond to this user in particular, here is the sequence of events. The user was originally banned for a week in September. This is their input into that thread. This was while still having an active warning for attempting to start a bait thread about gamepass which is located here and was closed.

After this, the user returned from the ban, subsequent to when the sterner direction the moderator's would be taking due to the amount of console warring was announced. The users ban expired on 16th September. The sticky was added to the forum on 25th September. The user again tried to make an antagonsitic and bait type of post here - again directed at gamepass. Previous warnings (which stand at five) and behaviour in line with the new policy about ambiguity escalated this to another week ban which expired on 9th October.

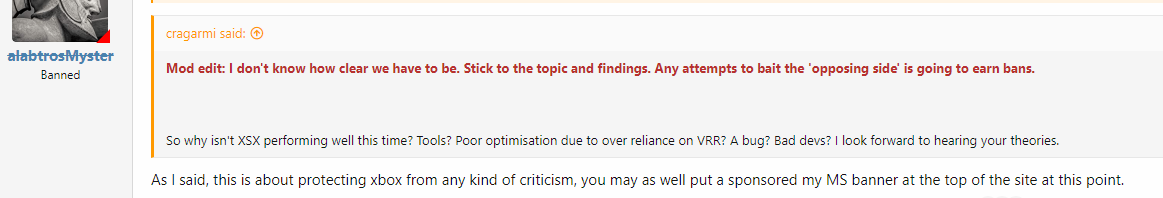

This episode started with the poster attempting to derail this thread with this post - the quoted user was banned for ignoring a mod warning the same day as described on the ban page, and trying to incite console wars. It is pretty clear this post is not interested in opening a discussion and is exactly the type of post the sticky warned would be moderated:

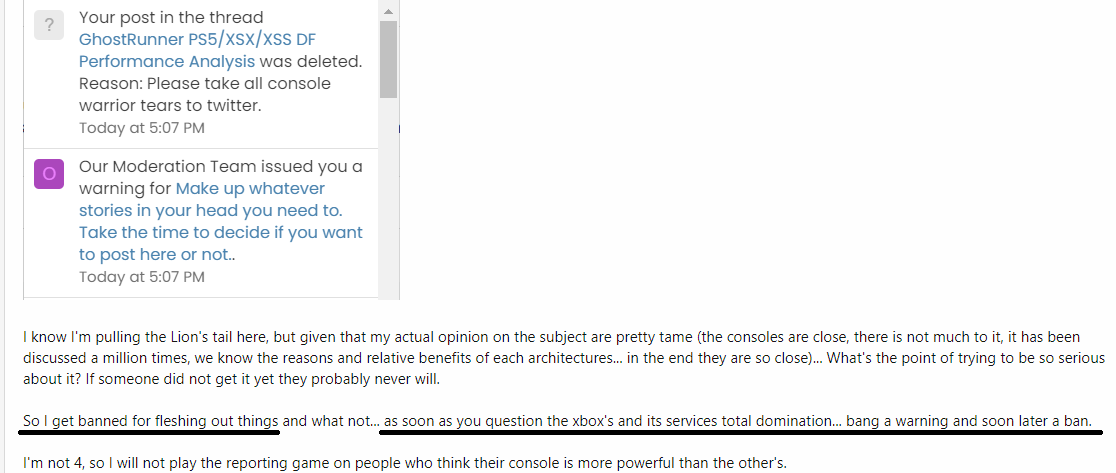

The post was deleted and the user warned about trying to misrepresent why moderation actions were taken:

The user was not banned as they claimed here in the first part. As you can see from above, the post was deleted and they were given a warning and were still posting - there was also no reply ban issued. We will leave you to decide whether the user previously was 'questioning xbox and its services' legitimately from the previously linked posts. The above post was deleted because of this misrepresentation/conspiracy theory and the user notified why (in the spoilered original post). This user makes no reference to the zero tolerance policy surrounding the TF rating or RDNA 1.5 arguments on the board against the PS5 because it does not align with their made up stories.

To clarify (and evidenced above) the user has never been banned for:

You need to relax.

Also love the transparency on this site.

DenchDeckard

Moderated wildly

Hey guys, can we all agree that if in some point in the future ghost runner gets patched on series x, and if those patches remove, or drastically reduce these issues then we can all just accept the reality that there be occasions where something ain't right with one version over the other in these comparisons and we should wait until we spend ages going back on forth on what some of us believe is the hardware causing the difference?

I'm sure, to be honest some indie dev with 30 employees might not even bother to revisit it so I maybe wasting my time saying this lol.

I wonder if they've commented on the performance difference yet.

I'm sure, to be honest some indie dev with 30 employees might not even bother to revisit it so I maybe wasting my time saying this lol.

I wonder if they've commented on the performance difference yet.

Gameplaylover

Member

Wow, the mods here really do have patience and passion. I wouldn't have spent so much time and simply banned.This is ridiculous, they did ban me in the past and now they insult me for talking about it...

BTW: Alex was right, he mad a 5 hours show when I was banned, he explained how the lizard people colluded with Bill Gates and the interdimentional aliens to get rid of me.

These people need to understand that stating that Microsoft's console is not destroying the competition is not "console warring".

Neither is making fun of people who think that one day a game that runs well on a 12tf GPU will be impossible on a 10tf one without very minor tweaks.

mod edit: to respond to this user in particular, here is the sequence of events. The user was originally banned for a week in September. This is their input into that thread. This was while still having an active warning for attempting to start a bait thread about gamepass which is located here and was closed.

After this, the user returned from the ban, subsequent to when the sterner direction the moderator's would be taking due to the amount of console warring was announced. The users ban expired on 16th September. The sticky was added to the forum on 25th September. The user again tried to make an antagonsitic and bait type of post here - again directed at gamepass. Previous warnings (which stand at five) and behaviour in line with the new policy about ambiguity escalated this to another week ban which expired on 9th October.

This episode started with the poster attempting to derail this thread with this post - the quoted user was banned for ignoring a mod warning the same day as described on the ban page, and trying to incite console wars. It is pretty clear this post is not interested in opening a discussion and is exactly the type of post the sticky warned would be moderated:

The post was deleted and the user warned about trying to misrepresent why moderation actions were taken:

The user was not banned as they claimed here in the first part. As you can see from above, the post was deleted and they were given a warning and were still posting - there was also no reply ban issued. We will leave you to decide whether the user previously was 'questioning xbox and its services' legitimately from the previously linked posts. The above post was deleted because of this misrepresentation/conspiracy theory and the user notified why (in the spoilered original post). This user makes no reference to the zero tolerance policy surrounding the TF rating or RDNA 1.5 arguments on the board against the PS5 because it does not align with their made up stories.

To clarify (and evidenced above) the user has never been banned for:

DenchDeckard

Moderated wildly

I just searched Google for their twitter, and it turns out from reading their tweets that there's issues with the console versions and they didnt even port it. They just keep responding we have passed your issue onto our partner responsible for the console versions.

Yeah, this ain't no clean decent port job by the studio that made the game.

Yeah, this ain't no clean decent port job by the studio that made the game.

Die Namek Ability

Member

Yes it would be logical to change your stance when new evidence is presented. Otherwise its just being intellectually dishonest.Hey guys, can we all agree that if in some point in the future ghost runner gets patched on series x, and if those patches remove, or drastically reduce these issues then we can all just accept the reality that there be occasions where something ain't right with one version over the other in these comparisons and we should wait until we spend ages going back on forth on what some of us believe is the hardware causing the difference?

I'm sure, to be honest some indie dev with 30 employees might not even bother to revisit it so I maybe wasting my time saying this lol.

I wonder if they've commented on the performance difference yet.

MasterCornholio

Member

Hey guys, can we all agree that if in some point in the future ghost runner gets patched on series x, and if those patches remove, or drastically reduce these issues then we can all just accept the reality that there be occasions where something ain't right with one version over the other in these comparisons and we should wait until we spend ages going back on forth on what some of us believe is the hardware causing the difference?

I'm sure, to be honest some indie dev with 30 employees might not even bother to revisit it so I maybe wasting my time saying this lol.

I wonder if they've commented on the performance difference yet.

It's not like the XSX is guaranteed to win every performance comparison. Each system has their own advantages and disadvantages that can lead to these differences.

The two are pretty close so any small thing can lead to a win. It's not like we are comparing the XSS to either the PS5 or the XSX.

DenchDeckard

Moderated wildly

It's not like the XSX is guaranteed to win every performance comparison. Each system has their own advantages and disadvantages that can lead to these differences.

The two are pretty close so any small thing can lead to a win. It's not like we are comparing the XSS to either the PS5 or the XSX.

This is absolutely my point and I couldn't agree more. What I was trying to convey is that it doesn't make sense for either console to show a what I would consider large framerate delta of like 20 to 30 percent in favour of one over the other.

Turns out, its a third party that did the console versions and I can't be bothered to sherlock Holmes to find out who or if the same companies even did the ps5 and xbox versions. This could be the cause of the larger than expected difference here.

MasterCornholio

Member

This is absolutely my point and I couldn't agree more. What I was trying to convey is that it doesn't make sense for either console to show a what I would consider large framerate delta of like 20 to 30 percent in favour of one over the other.

Turns out, its a third party that did the console versions and I can't be bothered to sherlock Holmes to find out who or if the same companies even did the ps5 and xbox versions. This could be the cause of the larger than expected difference here.

Sometimes you have extreme cases where the differences are larger than expected (Hitman 3 and The Touryst) and it could just be done to how the engine interacts with the hardware. But not every game is going to use the hardware in that manner which is why those cases are few.

It's true that the developers could have messed up both versions of the game but it's also true that it isn't odd if the PS5 has an advantage with some titles given the differences between the systems.

Last edited:

Snake29

RSI Employee of the Year

It's not like the XSX is guaranteed to win every performance comparison. Each system has their own advantages and disadvantages that can lead to these differences.

The two are pretty close so any small thing can lead to a win. It's not like we are comparing the XSS to either the PS5 or the XSX.

It's what a lot of devs already have stated far before the release. But a large group just didn't wanted to listen, and were only blind by what Microsoft presented to them.

Last edited:

Vognerful

Member

I think they had too.Wow, the mods here really do have patience and passion. I wouldn't have spent so much time and simply banned.

I don't mean about banning him, but about the long explanation for the ban. There have been many posts in other threads accusing mods of banning people who favor playstation while letting Xbox fanboys of the hook without justification.

Gameplaylover

Member

Peole should just learn to behave and nobody is being banned. Stupid fighting over plastic toys.I think they had too.

I don't mean about banning him, but about the long explanation for the ban. There have been many posts in other threads accusing mods of banning people who favor playstation while letting Xbox fanboys of the hook without justification.

DarkMage619

$MSFT

Coming from the guy trying to advocate that GhostRunner is just too much for the Xbox to handle shows a significant lack of self awareness. To believe that the PS5 is demonstrating some sort of technical magic vs it just receiving more optimization speaks volumes. There is nothing special about this game that would keep it from running well on all current generation consoles unless the dev didn't optimize it for all consoles. They did so on PS5 hopefully they will on Xbox too.SMH it's like you refuse to take in information & just keep repeating silly stuff.

Iced Arcade

Member

mod truth bombs

DenchDeckard

Moderated wildly

Coming from the guy trying to advocate that GhostRunner is just too much for the Xbox to handle shows a significant lack of self awareness. To believe that the PS5 is demonstrating some sort of technical magic vs it just receiving more optimization speaks volumes. There is nothing special about this game that would keep it from running well on all current generation consoles unless the dev didn't optimize it for all consoles. They did so on PS5 hopefully they will on Xbox too.

You only have to glance at the studios 2.5K twitter account to see that the console versions of the game are outsourced. Each console version could have been done by a different studio, or perhaps the outsourcing studio did the absolute bare minimum on each version to get the cheque. We don't know but we know that it's outsourced, so even if we reached out to the studio to say "Are you aware of the performance issues on Xbox" they would just respond with "We have passed this onto our partner who looked after the xbox version" and that could be it.

DarkMage619

$MSFT

This makes complete sense. There is no magic it's simply a matter of where and who put in the effort. It's totally possible the outsourced studio didn't put in the effort on the Xbox version as a different studio did with the PS5 version. The proof in the video.You only have to glance at the studios 2.5K twitter account to see that the console versions of the game are outsourced. Each console version could have been done by a different studio, or perhaps the outsourcing studio did the absolute bare minimum on each version to get the cheque. We don't know but we know that it's outsourced, so even if we reached out to the studio to say "Are you aware of the performance issues on Xbox" they would just respond with "We have passed this onto our partner who looked after the xbox version" and that could be it.

Loxus

Member

This makes complete sense. There is no magic it's simply a matter of where and who put in the effort. It's totally possible the outsourced studio didn't put in the effort on the Xbox version as a different studio did with the PS5 version. The proof in the video.

When did I say this game is too much for Xbox Series X to handle? The game is 4k 60fps on Xbox Series XComing from the guy trying to advocate that GhostRunner is just too much for the Xbox to handle shows a significant lack of self awareness. To believe that the PS5 is demonstrating some sort of technical magic vs it just receiving more optimization speaks volumes. There is nothing special about this game that would keep it from running well on all current generation consoles unless the dev didn't optimize it for all consoles. They did so on PS5 hopefully they will on Xbox too.

MasterCornholio

Member

When did I say this game is too much for Xbox Series X to handle? The game is 4k 60fps on Xbox Series X

I guess it's a repeat of dox gate for him.

dcmk7

Banned

Just reading the last page.. and trying to keep up. So the new narrative seems to be that the console versions have been outsourced to not one but two different studios. One for each platform.. and the Xbox studio couldn't be arsed hence the poor performance.

That's quite an extraordinary set of events! And quite far fetched.

Any one got any evidence? For it even being outsourced first of all..

That's quite an extraordinary set of events! And quite far fetched.

Any one got any evidence? For it even being outsourced first of all..

Last edited:

dcmk7

Banned

Were he accused half the forum of doxxing some YouTuber and then was caught lying about it.I guess it's a repeat of dox gate for him.

Don't think this is quite that bad like

Sosokrates

Report me if I continue to console war

Some of you all are reaching to much.

Implementing DualSense features could be as simple as button mapping. With additional vibration API presets.

As far as PS5's performance goes.

It could be just the GPU being more efficient. Especially with the assistance of the I/O Complex.

Teraflops isn't everything either.

"If you just calculate teraflops you get the same number, but actually the performance is noticeably different because teraflops is defined as the computational capability of the vector ALU.

That's just one part of the GPU, there are a lot of other units and those other units all run faster when the GPU frequency is higher. At 33% higher frequency, rasterization goes 33% faster. Processing the command buffer goes that much faster. The L2 and other caches have that much higher bandwidth and so on."

Higher clocks also play a role.

I think it's about time we accept some games will perform better on PS5, while others on XBSX and stop this back and forth bickering.

It would of been useful if Cerny provided some demos showing the better performance of the higher clocked gpu.

Because what does he mean by "noticeably different"? 45fps and 43fps is noticeably different but it is also negligible.

If you're going to make a statement like this where it has never been the case on pc GPUs people are going to be skeptical.

MasterCornholio

Member

Were he accused half the forum of doxxing some YouTuber and then was caught lying about it.

Don't think this is quite that bad like

But yes it's why I don't pay too much attention to what he says. Haven't ignored him yet because I don't think he's that bad.

Anyways sorry for the derail.

Werewolfgrandma

Banned

Hey

chilichote

what's so funny?

chilichote

what's so funny?

Duchess

Member

Actually, it's because the PS5 has AMD Smart ShiftThere is no magic it's simply a matter of where and who put in the effort.

Loxus

Member

All you have to do is just look up the things Cerny was talking about.It would of been useful if Cerny provided some demos showing the better performance of the higher clocked gpu.

Because what does he mean by "noticeably different"? 45fps and 43fps is noticeably different but it is also negligible.

If you're going to make a statement like this where it has never been the case on pc GPUs people are going to be skeptical.

Rasterisation for example.

Rasterization (or rasterisation) is the task of taking an image described in a vector graphics format (shapes) and converting it into a raster image (a series of pixels, dots or lines, which, when displayed together, create the image which was represented via shapes). The rasterized image may then be displayed on a computer display, video display or printer, or stored in a bitmap file format.

The main advantage of rasterization is its speed, especially compared with ray tracing. The GPU will tell the game to create a 3D image out of small shapes, most often triangles. These triangles are turned into individual pixels and then put through a shader to create the image you see on screen.

Vector ALUs are the base unit that actually performs the mathematical operations called for as part of a shader program.

From my understanding, it sounds like faster converting it into a raster image translates into higher frames per second when Teraflops are the same and clocked higher. While the ALU mathematical operations are the same.

Like I said before, some games will perform better on PS5 depending on clocks while others on XBSX depending on teraflops.

How do you know this isn't the case in PC GPUs?

AMD’s Flagship Navi 31 GPU Based on Next-Gen RDNA 3 Architecture Has Reportedly Been Taped Out

There have been several rumors stating that the upcoming RDNA 3 GPUs are going to outperform whatever NVIDIA has to offer in terms of rasterization performance.

DarkMage619

$MSFT

It's runs yes. Runs poorly. I'm hoping the Xbox versions get the PS5 treatment.When did I say this game is too much for Xbox Series X to handle? The game is 4k 60fps on Xbox Series X

Sosokrates

Report me if I continue to console war

We know this from pc gpus because if you get two pc gpus with same architecture and tflops but different cu and clockspeed, the one with the higher clockspeed does not see an advantage.All you have to do is just look up the things Cerny was talking about.

Rasterisation for example.

Rasterization (or rasterisation) is the task of taking an image described in a vector graphics format (shapes) and converting it into a raster image (a series of pixels, dots or lines, which, when displayed together, create the image which was represented via shapes). The rasterized image may then be displayed on a computer display, video display or printer, or stored in a bitmap file format.

The main advantage of rasterization is its speed, especially compared with ray tracing. The GPU will tell the game to create a 3D image out of small shapes, most often triangles. These triangles are turned into individual pixels and then put through a shader to create the image you see on screen.

Vector ALUs are the base unit that actually performs the mathematical operations called for as part of a shader program.

From my understanding, it sounds like faster converting it into a raster image translates into higher frames per second when Teraflops are the same and clocked higher. While the ALU mathematical operations are the same.

Like I said before, some games will perform better on PS5 depending on clocks while others on XBSX depending on teraflops.

How do you know this isn't the case in PC GPUs?

AMD’s Flagship Navi 31 GPU Based on Next-Gen RDNA 3 Architecture Has Reportedly Been Taped Out

There have been several rumors stating that the upcoming RDNA 3 GPUs are going to outperform whatever NVIDIA has to offer in terms of rasterization performance.

If you wish for examples you can google and search YouTube, i cant be bothered to look for you.

Your speculation is interesting though, but it is speculation, if cerny had demos and explained in more detail there would be no need to speculate.

Last edited:

Wait one minute!It's runs yes. Runs poorly. I'm hoping the Xbox versions get the PS5 treatment.

Where is your point of reference other than the fact that it runs better on PS5?

The game run native 4k 60fps on Xbox Series X the problem comes in when the game is giving you bonus features Ray Tracing or 120fps .

What other games run 4k 60fps with Ray tracing on Xbox Series X?

None that I can think of at the moment so why do you feel that it should be 4k lock 60fps with Ray tracing other than the fact PS5 did it?

Shmunter

Member

All I have to say is that this game is awesome. Nearing the end now I'm sure.

Love the old school difficulty. It's as much a puzzler as it is a a game of reflexes - how you approach each scenario, and perfect your run.

Where it shines is the instant restarts with short checkpoints - that's where roguelikes leave me - going back to the beginning is no fun. Not an issue here.

Go grab it!

Love the old school difficulty. It's as much a puzzler as it is a a game of reflexes - how you approach each scenario, and perfect your run.

Where it shines is the instant restarts with short checkpoints - that's where roguelikes leave me - going back to the beginning is no fun. Not an issue here.

Go grab it!

Mr. PlayStation

Member

I am waiting on a sale for the game, will be checking it out on PS5 on that 120fps mode

Md Ray

Member

False.We know this from pc gpus because if you get two pc gpus with same architecture and tflops but different cu and clockspeed, the one with the higher clockspeed does not see an advantage.

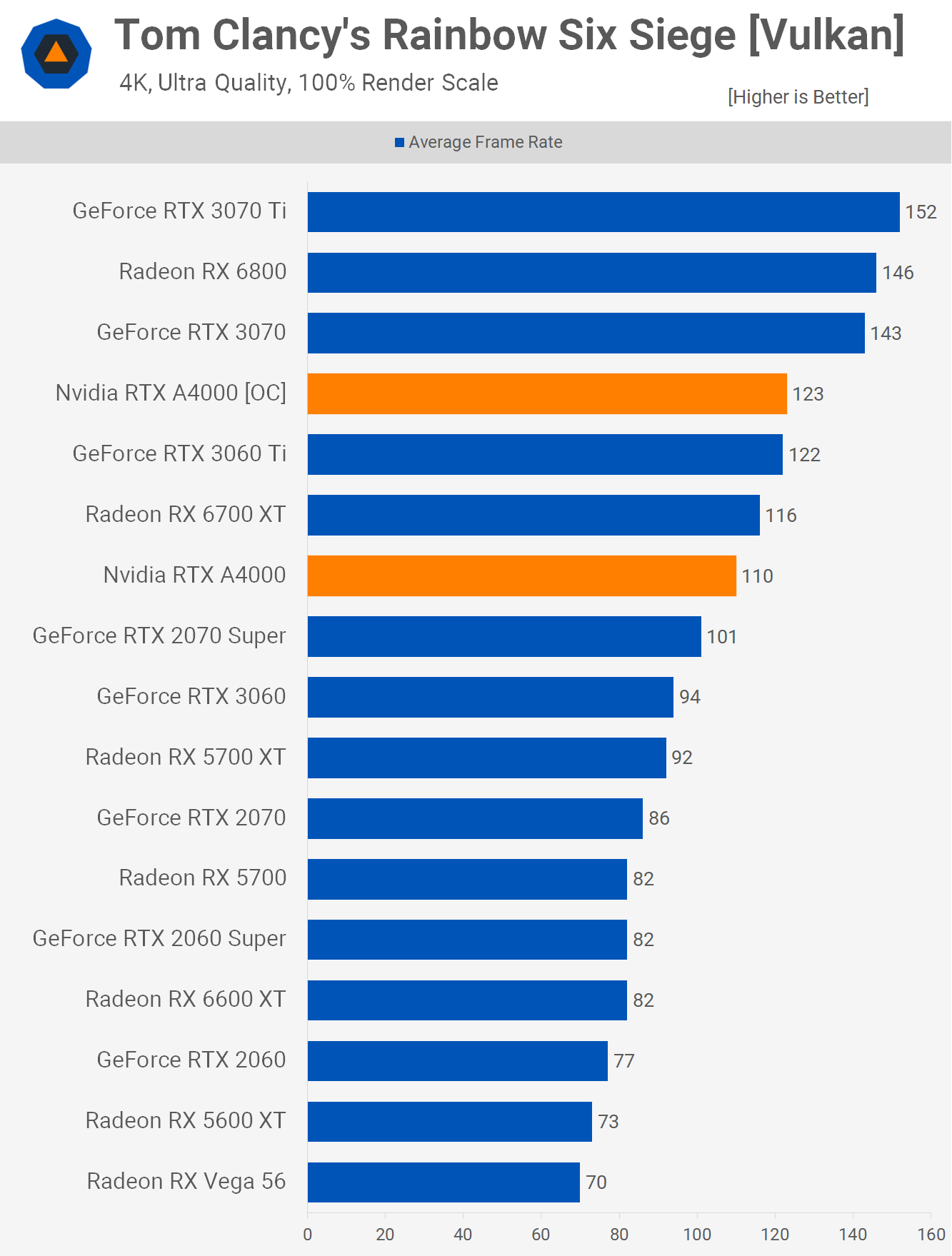

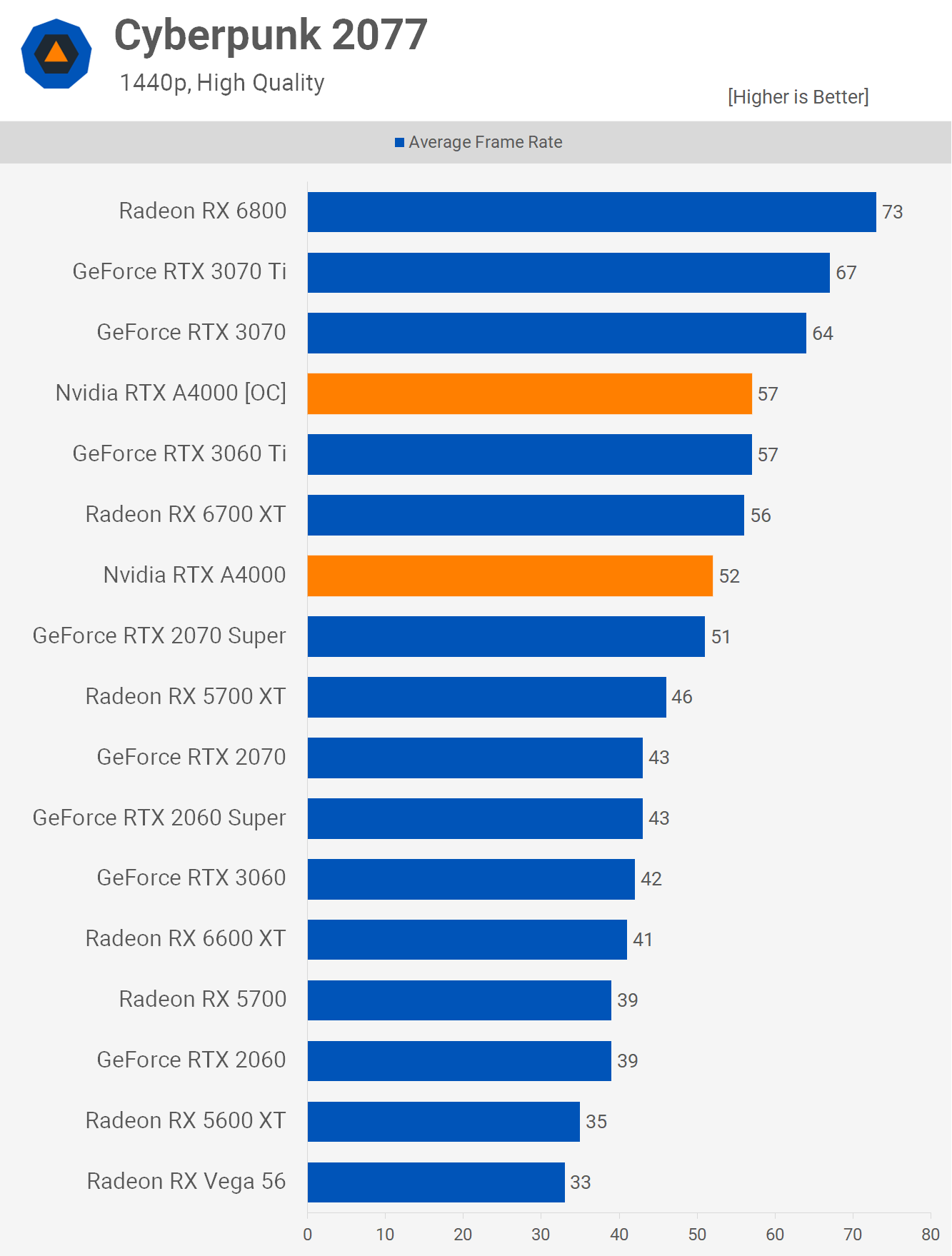

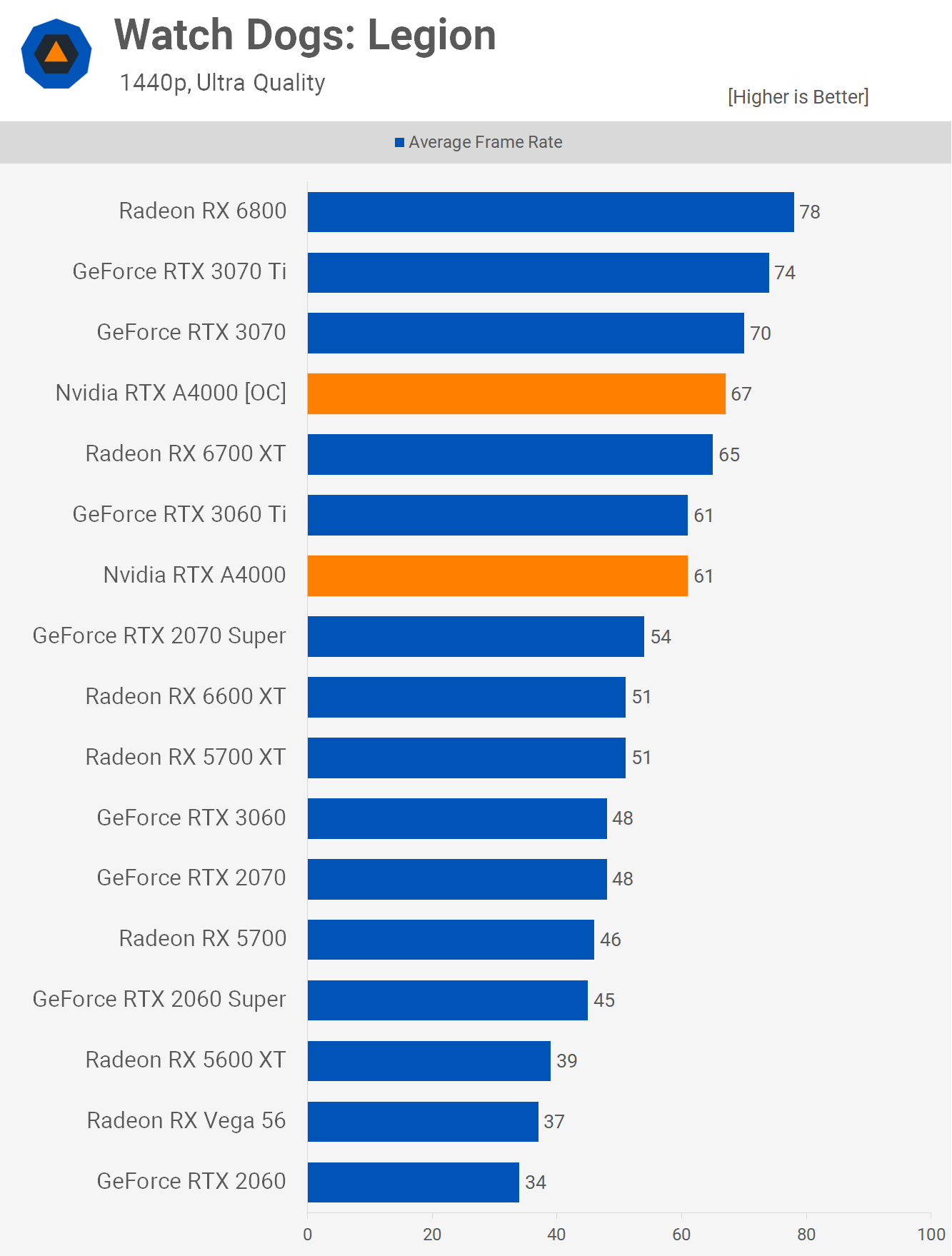

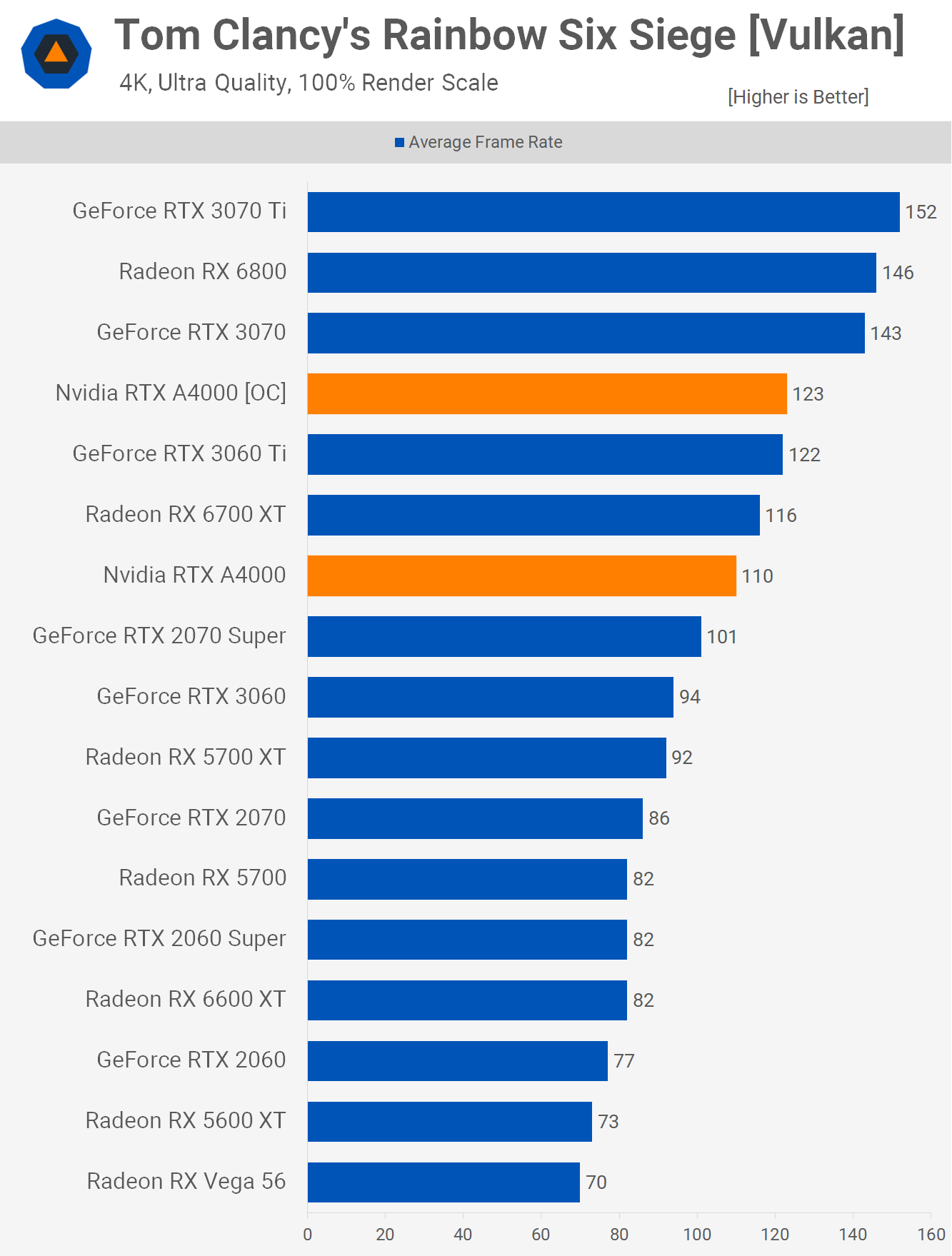

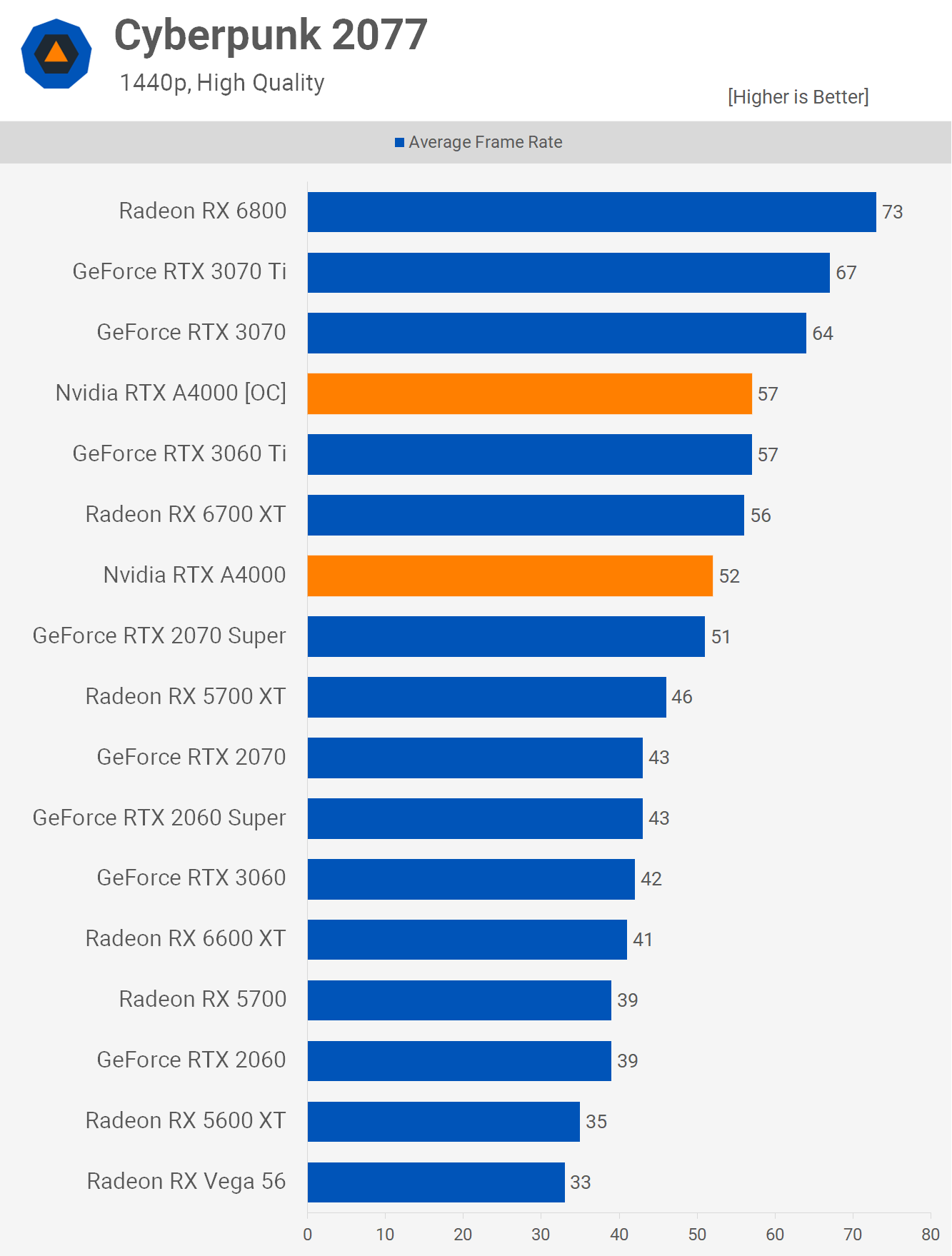

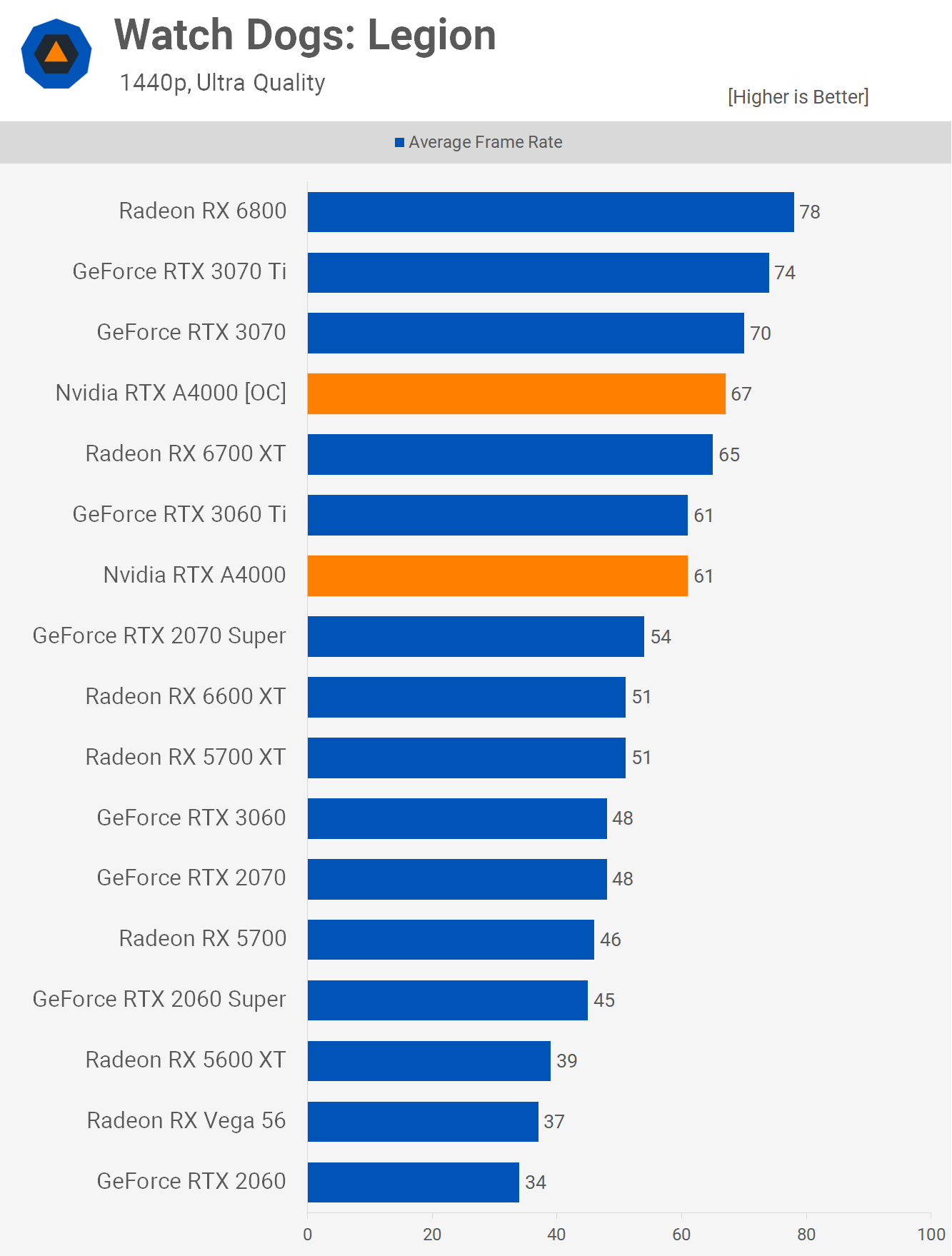

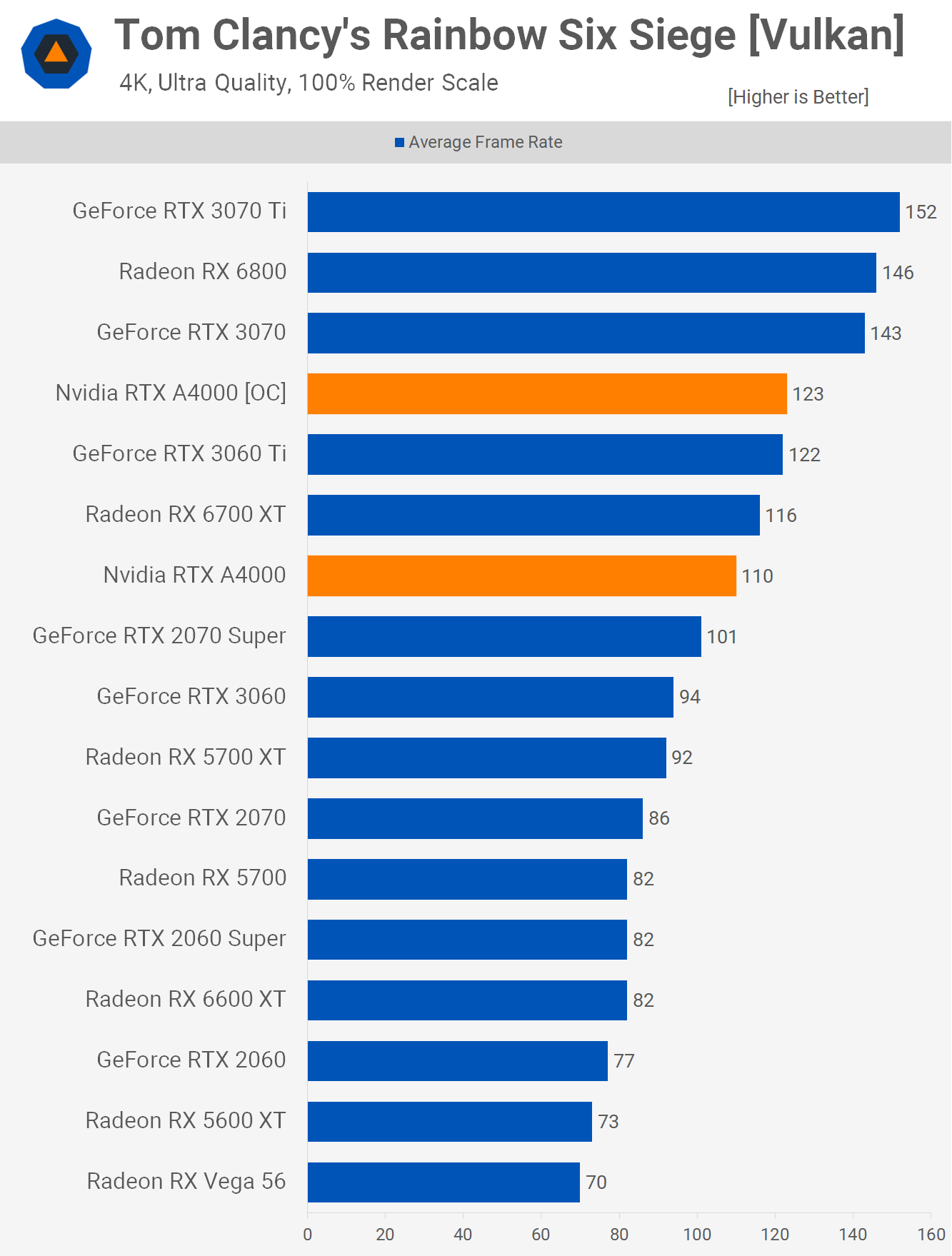

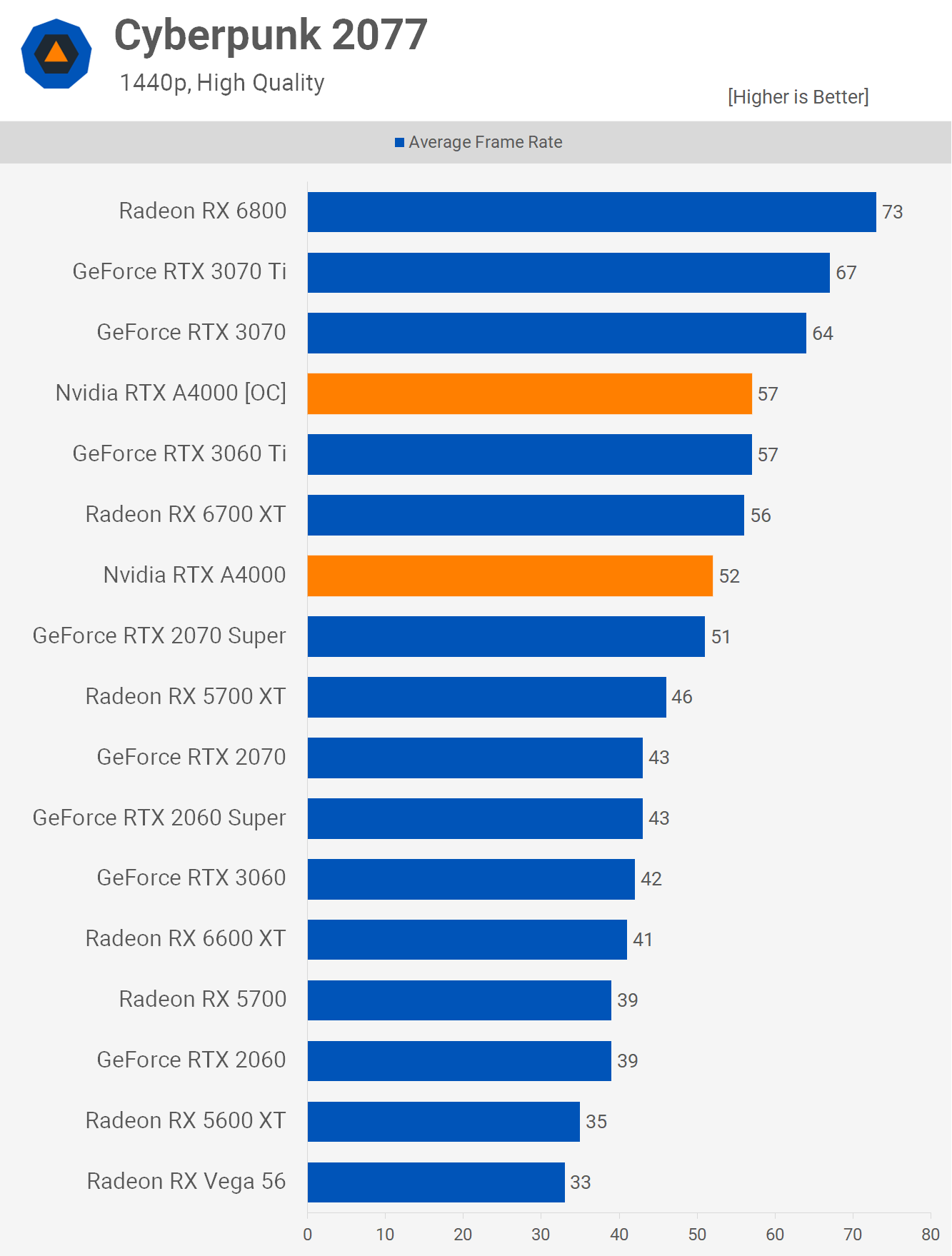

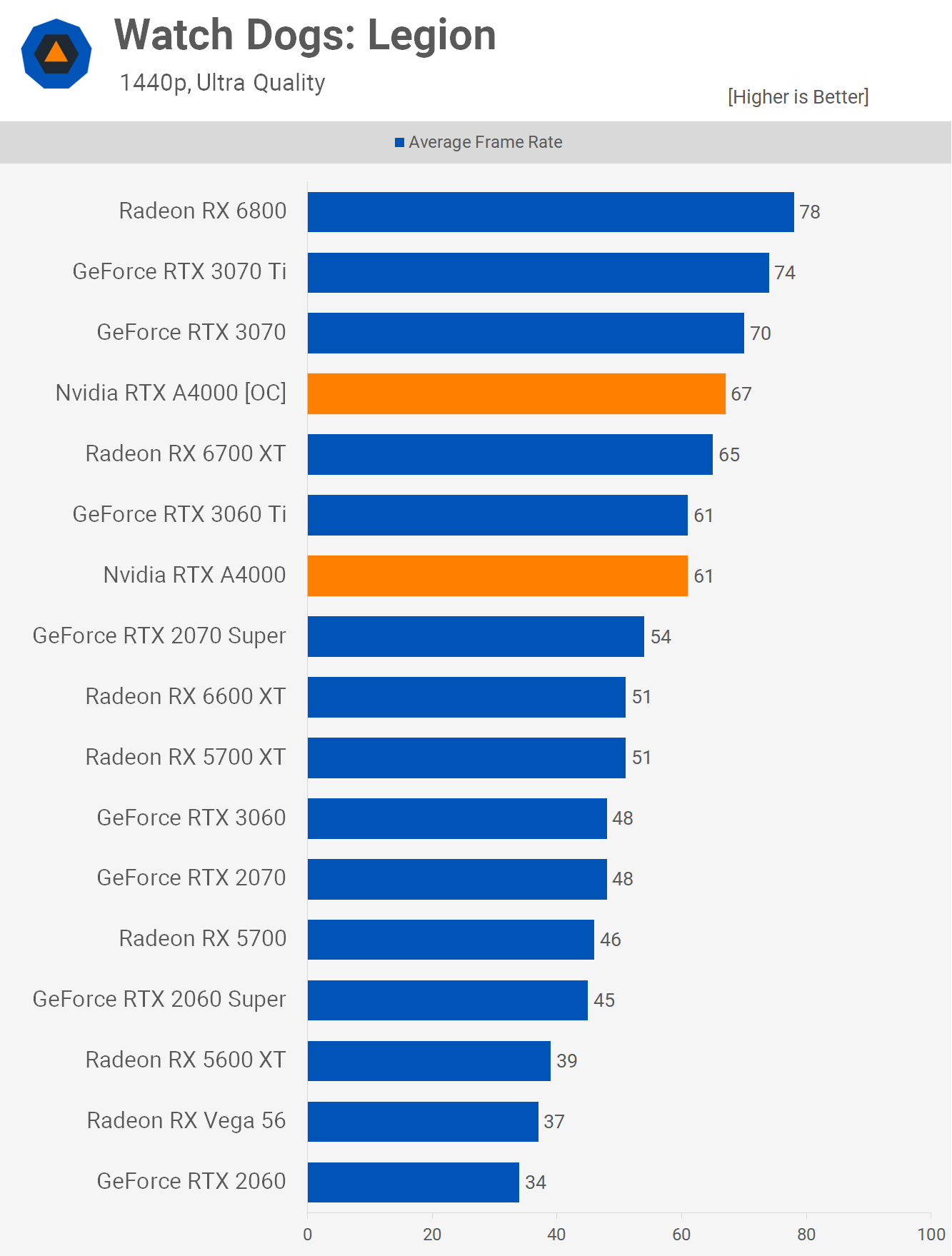

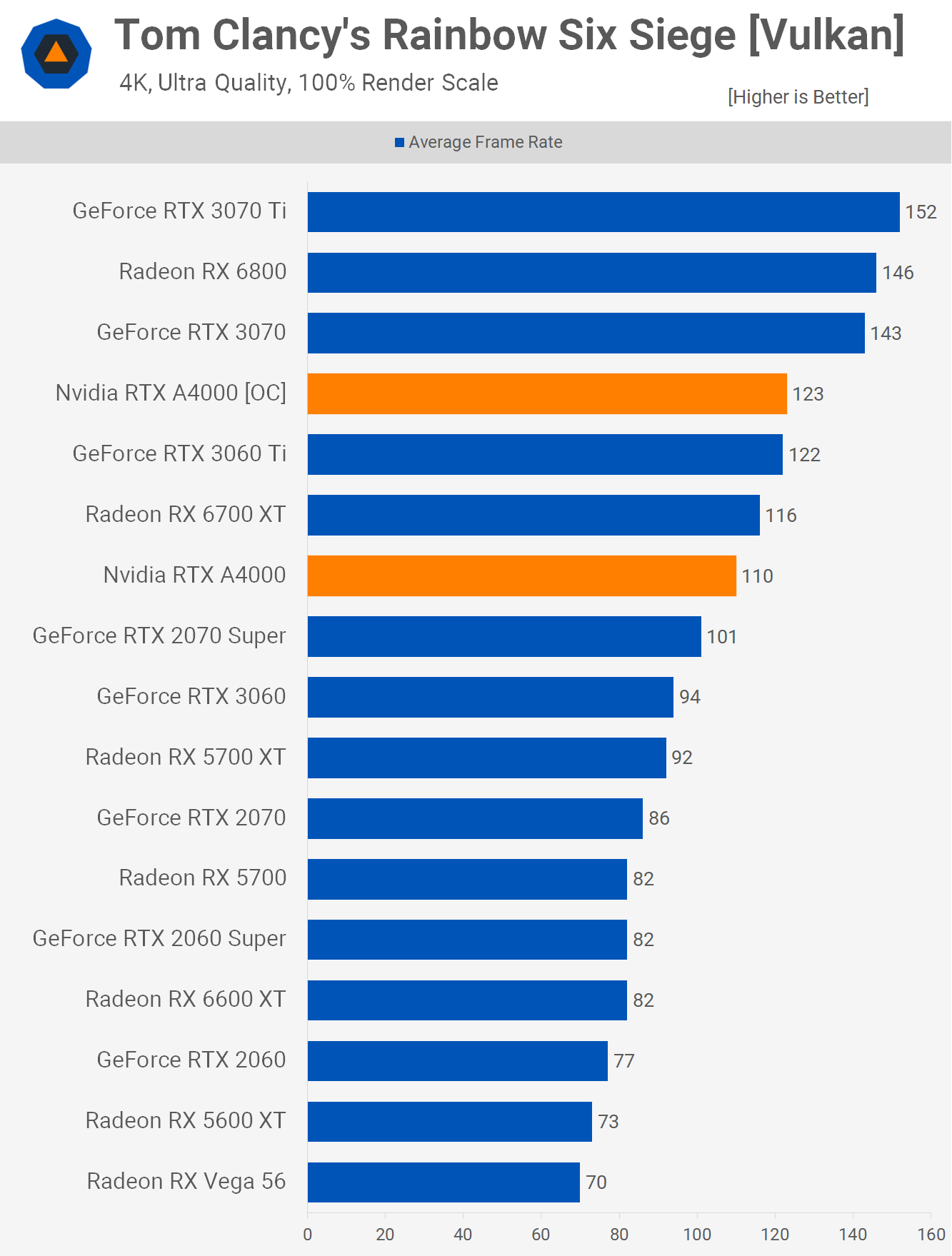

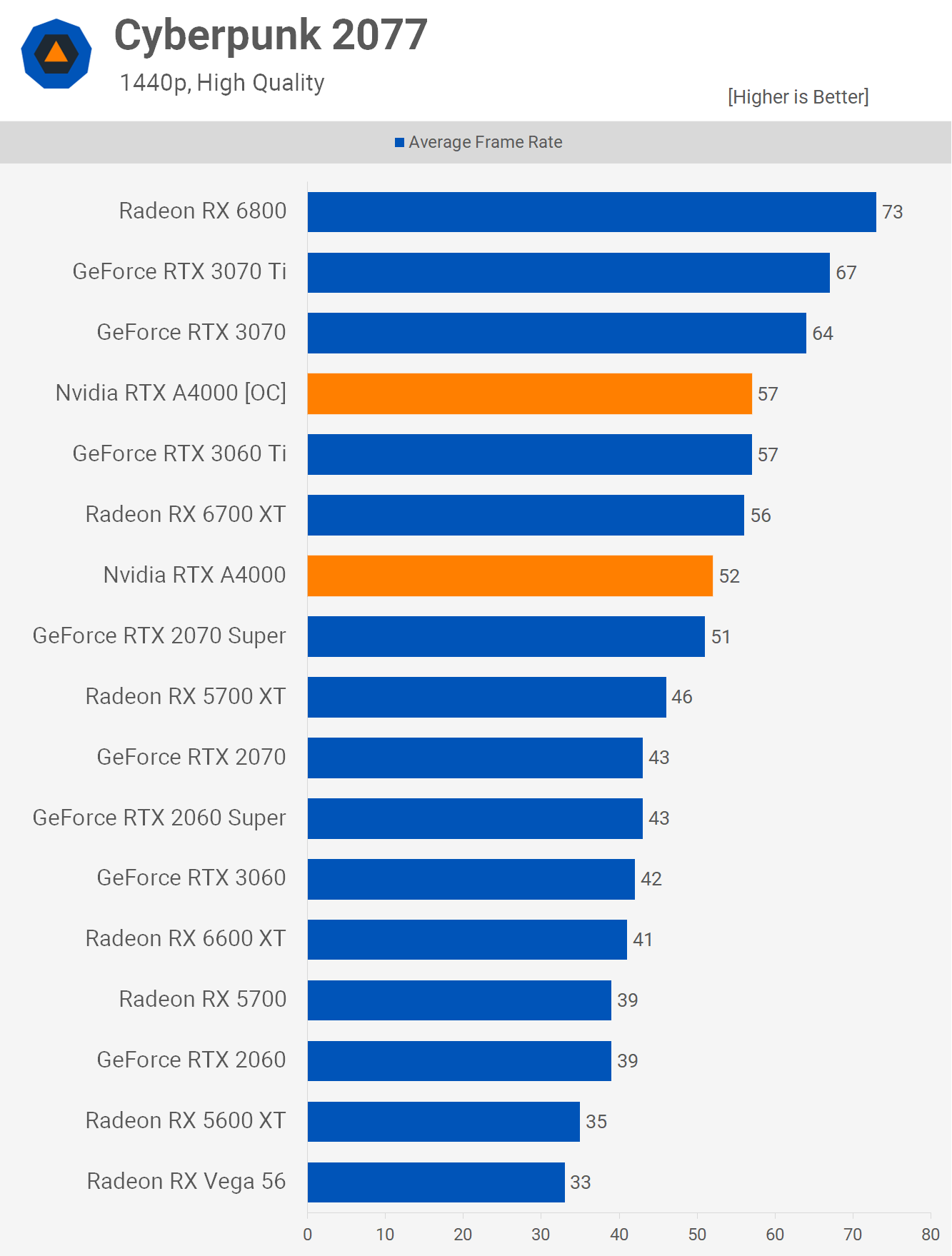

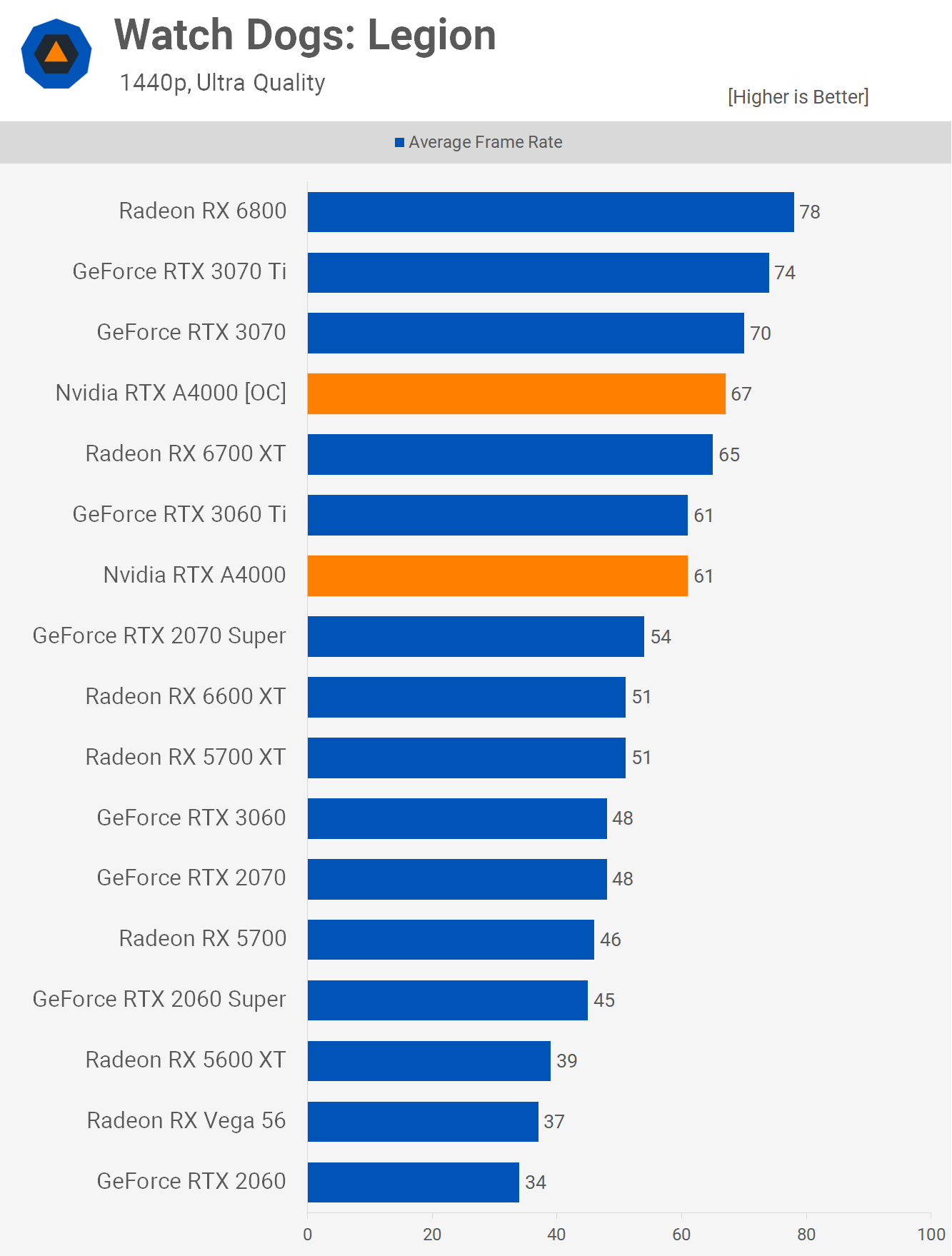

Here's a reference RTX 3060 Ti w/ 38 SM and higher clock speed beating 48 SM lower clocked RTX A4000. Both have similar TFLOPS (~18TF) and exactly the same GDDR6-14000 memory, so 448 GB/s bandwidth.

OC'd A4000 gets an additional +10% on core clock and +14% on memory (512GB/s). A4000 has the same Ampere architecture and in terms of its silicon and size, it's basically a 3070 Ti (48 SM) paired with 16GB VRAM. You're essentially seeing an RTX 3070 Ti silicon getting beat by GPUs (3060 Ti, 3070) with fewer SMs running at higher clk speeds.

52fps vs 57fps. One is much closer to 60fps than the other. So I'd say that's a "noticeably different" performance.

Of course, not every game sees the same advantage as it depends from game to game, engine to engine like we've been seeing with XSX and PS5. Here both stock 3060 Ti and A4000 are like-for-like as their TFLOPS would suggest:

There are 3060 Tis with even bigger coolers hitting even higher clock speeds like MSI's 3 slot Gaming X Trio which can match and sometimes even exceed RTX 3070 Founders Edition. IIRC, W Werewolfgrandma probably has the Gaming X Trio model, correct me if I'm wrong. His 3060 Ti pretty much matches my 3070 FE at stock in Death Stranding.

So as you can see it's not speculation, you just choose to ignore facts.Your speculation is interesting though, but it is speculation, if cerny had demos and explained in more detail there would be no need to speculate.

Last edited:

Lysandros

Member

This is what i dislike the most about the current situation as a console hardware enthusiast. It will certainly impact the quest to reach the full potential of the machine to 'some' degree, no way around it.And frankly with Sony swinging around cross gen and Multiplatform pc development they can no longer be depended on to push the envelope of their own hardware.

Werewolfgrandma

Banned

Yeah it's the gaming x trio. Big OC at around 50% fan it doesn't go over 55c. Don't forget it beat the stock 3070 in ds and actually re8 as well.False.

Here's a reference RTX 3060 Ti w/ 38 SM and higher clock speed beating 48 SM lower clocked RTX A4000. Both have similar TFLOPS (~18TF) and exactly the same GDDR6-14000 memory, so 448 GB/s bandwidth.

OC'd A4000 gets an additional +10% on core clock and +14% on memory (512GB/s). A4000 has the same Ampere architecture and in terms of its silicon and size, it's basically a 3070 Ti (48 SM) paired with 16GB VRAM. You're essentially seeing an RTX 3070 Ti silicon getting beat by GPUs (3060 Ti, 3070) with fewer SMs running at higher clk speeds.

52fps vs 57fps. One is much closer to 60fps than the other. So that's a "noticeably different" performance.

Of course, not every game sees the same advantage as it depends from game to game, engine to engine like we've been seeing with XSX and PS5. Here both stock 3060 Ti and A4000 are like-for-like as their TFLOPS would suggest:

There are 3060 Tis with even bigger coolers hitting even higher clock speeds like MSI's 3 slot Gaming X Trio which can match and sometimes even exceed RTX 3070 Founders Edition. IIRC, W Werewolfgrandma probably has the Gaming X Trio model, correct me if I'm wrong. His 3060 Ti pretty much matches my 3070 FE at stock in Death Stranding.

So as you can see it's not speculation, you just choose to ignore facts.

Yeah for clocks vs cu in PC some games go high others lower but AVG was around 6% better for higher clocks.

I still think regardless of any of the vs stuff for this game. They should have put the sx at 1800p or something to lock that FPS.

Md Ray

Member

Both consoles have 3 modes. 2 of which have issues and 1 of them is indeed locked 60fps on SX as they've dropped RT rather than dropping resolution.I still think regardless of any of the vs stuff for this game. They should have put the sx at 1800p or something to lock that FPS.

Werewolfgrandma

Banned

I know. For the RT mode to really be playable in specifically this game(lots of games don't benefit from locked fps like this one) they should have prioritized fps.Both consoles have 3 modes. 2 of which have issues and 1 of them is indeed locked 60fps on SX as they've dropped RT rather than dropping resolution.

Md Ray

Member

Is the resolution in RT mode static? I thought it was dynamic with 1296p being the lowest.I know. For the RT mode to really be playable in specifically this game(lots of games don't benefit from locked fps like this one) they should have prioritized fps.

Last edited:

Werewolfgrandma

Banned

I thought that was the 120fps mode? Maybe I'm not remembering correctly?Is the resolution in RT mode static? I thought it was dynamic with 1296p being the lowest.

If it is. Drop the lower bound then.

I played this for about an hour at 4k over 60 and it was fun. Played about 15 minutes at 1440p 170fps and it was sooooo much better. Super twitch games need solid fps

Lysandros

Member

Furthermore, XSX' CUs have to struggle a bit more for internal bandwidth/cache amount compared to those examples due its architecture (52 CUs/2SE). Thus i think the gain from the of higher frequency with 36 CUs/2SE will be greater in PS5's case comparatively.False.

Here's a reference RTX 3060 Ti w/ 38 SM and higher clock speed beating 48 SM lower clocked RTX A4000. Both have similar TFLOPS (~18TF) and exactly the same GDDR6-14000 memory, so 448 GB/s bandwidth.

OC'd A4000 gets an additional +10% on core clock and +14% on memory (512GB/s). A4000 has the same Ampere architecture and in terms of its silicon and size, it's basically a 3070 Ti (48 SM) paired with 16GB VRAM. You're essentially seeing an RTX 3070 Ti silicon getting beat by GPUs (3060 Ti, 3070) with fewer SMs running at higher clk speeds.

52fps vs 57fps. One is much closer to 60fps than the other. So I'd say that's a "noticeably different" performance.

Of course, not every game sees the same advantage as it depends from game to game, engine to engine like we've been seeing with XSX and PS5. Here both stock 3060 Ti and A4000 are like-for-like as their TFLOPS would suggest:

There are 3060 Tis with even bigger coolers hitting even higher clock speeds like MSI's 3 slot Gaming X Trio which can match and sometimes even exceed RTX 3070 Founders Edition. IIRC, W Werewolfgrandma probably has the Gaming X Trio model, correct me if I'm wrong. His 3060 Ti pretty much matches my 3070 FE at stock in Death Stranding.

So as you can see it's not speculation, you just choose to ignore facts.

Loxus

Member

Can you show me two RDNA 2 GPUs with the same teraflops with at least a 250MHz difference in clocks with benchmarks?We know this from pc gpus because if you get two pc gpus with same architecture and tflops but different cu and clockspeed, the one with the higher clockspeed does not see an advantage.

If you wish for examples you can google and search YouTube, i cant be bothered to look for you.

Your speculation is interesting though, but it is speculation, if cerny had demos and explained in more detail there would be no need to speculate.

Example:

Reason why XBSX is questionable, is because it's clocked vastly lower than PS5. A 408 MHz difference.

That's why we get games performing better on one console and vice versa.

PS5 has faster rasterization performance and a higher pixel fill-rate. Which is my guess, one of the reasons why it runs 8k in The Touryst. A not so heavy computational game.

Bernd Lauert

Banned

I assume ECC wasn't turned off for the A4000 benchmarks, which means it could have a negative impact on performance. Not exactly a perfect comparison.False.

Here's a reference RTX 3060 Ti w/ 38 SM and higher clock speed beating 48 SM lower clocked RTX A4000. Both have similar TFLOPS (~18TF) and exactly the same GDDR6-14000 memory, so 448 GB/s bandwidth.

OC'd A4000 gets an additional +10% on core clock and +14% on memory (512GB/s). A4000 has the same Ampere architecture and in terms of its silicon and size, it's basically a 3070 Ti (48 SM) paired with 16GB VRAM. You're essentially seeing an RTX 3070 Ti silicon getting beat by GPUs (3060 Ti, 3070) with fewer SMs running at higher clk speeds.

52fps vs 57fps. One is much closer to 60fps than the other. So I'd say that's a "noticeably different" performance.

Of course, not every game sees the same advantage as it depends from game to game, engine to engine like we've been seeing with XSX and PS5. Here both stock 3060 Ti and A4000 are like-for-like as their TFLOPS would suggest:

There are 3060 Tis with even bigger coolers hitting even higher clock speeds like MSI's 3 slot Gaming X Trio which can match and sometimes even exceed RTX 3070 Founders Edition. IIRC, W Werewolfgrandma probably has the Gaming X Trio model, correct me if I'm wrong. His 3060 Ti pretty much matches my 3070 FE at stock in Death Stranding.

So as you can see it's not speculation, you just choose to ignore facts.

Md Ray

Member

Can you show me the perf impact of GDDR6 ECC on vs off in gaming?I assume ECC wasn't turned off for the A4000 benchmarks, which means it could have a negative impact on performance. Not exactly a perfect comparison.

Last edited:

Zathalus

Member

The 6600XT vs the 5700XT, similar TFLOP numbers (slight edge to the 6600XT), with the 6600XT having a vastly higher average clock (about 600Mhz or so) and yet they only have a few % performance difference on average despite the 6600XT having a massive rasterisation and pixel fill-rate advantage.Can you show me two RDNA 2 GPUs with the same teraflops with at least a 250MHz difference in clocks with benchmarks?

Example:

Reason why XBSX is questionable, is because it's clocked vastly lower than PS5. A 408 MHz difference.

That's why we get games performing better on one console and vice versa.

PS5 has faster rasterization performance and a higher pixel fill-rate. Which is my guess, one of the reasons why it runs 8k in The Touryst. A not so heavy computational game.

Werewolfgrandma

Banned

1080p comparisons would be better since the 6600xt might be a bit bw starved at 1440p, but that shouldn't be an issue 99% of the time at 1080pThe 6600XT vs the 5700XT, similar TFLOP numbers (slight edge to the 6600XT), with the 6600XT having a vastly higher average clock (about 600Mhz or so) and yet they only have a few % performance difference on average despite the 6600XT having a massive rasterisation and pixel fill-rate advantage.

Md Ray

Member

Their bandwidth isn't like-for-like.The 6600XT vs the 5700XT, similar TFLOP numbers (slight edge to the 6600XT), with the 6600XT having a vastly higher average clock (about 600Mhz or so) and yet they only have a few % performance difference on average despite the 6600XT having a massive rasterisation and pixel fill-rate advantage.

Last edited:

sircaw

Banned

I think some people in this thread just have to admit the Tflops are not everything.

There are going to be games that are going to be better on the ps5 and vice versa on the Xbox.

At the end of the day, stop the bickering and just enjoy the games, no matter where they are coming from or where they are going.

There are going to be games that are going to be better on the ps5 and vice versa on the Xbox.

At the end of the day, stop the bickering and just enjoy the games, no matter where they are coming from or where they are going.

Zathalus

Member

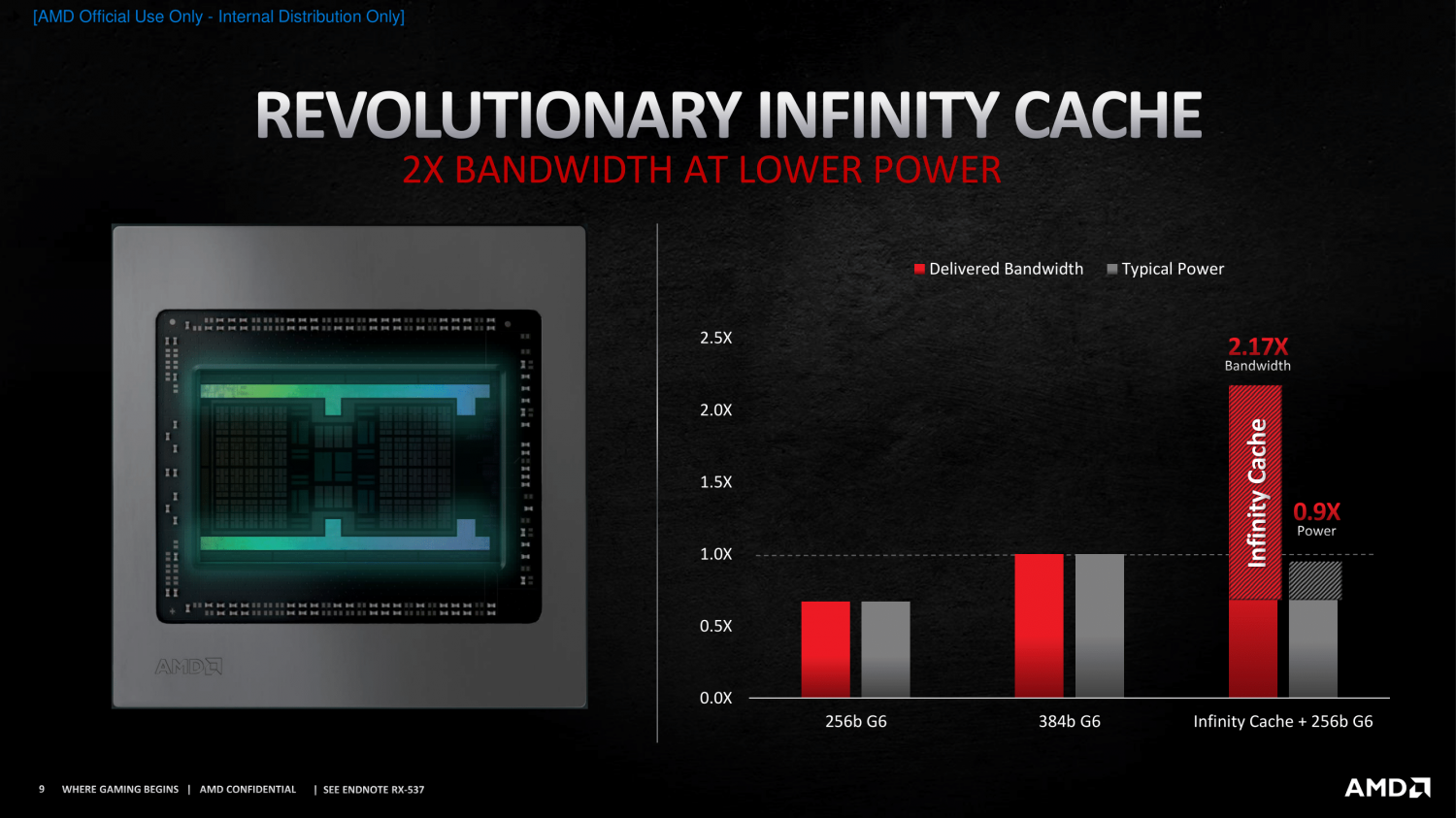

The 6600XT has infinity cache to make up the difference:Their bandwidth isn't like-for-like.

But we see the same performance at 1080p, where the bandwidth difference between the two (especially with infinity cache) should have minimal impact:

7% performance difference in favour of the 6600XT. Overall TFLOP difference? 7% in favour of the 6600XT. Clock speed advantage, over 600Mhz in favour of the 6600XT. Rasterisation and pixel fill-rate? Massive advantage for the 6600XT again.

Last edited:

Bernd Lauert

Banned

No, that's what a benchmark would've been great for.Can you show me the perf impact of GDDR6 ECC on vs off in gaming?

Md Ray

Member

You said it wasn't a perfect comparison and that ECC "could have negative imp on perf". I'm asking you based on what exactly it could have negative perf impact in gaming? Do you have any prior GDDR5/6 ECC vs non-ECC benchmark data?No, that's what a benchmark would've been great for.

Or is it just feelings/assumptions?

Last edited:

PS5 has a 22% advantage in geometry , color ROPS fillrate & internal bandwidth which matches up with the around 22% advantage it has in the 4k 60fps RT mode.

50fps + 22% = 61fps.

PS5 depth ROPS advantage is like 2X the Xbox Series X which could explain it having up to a 30% advantage at times in 120fps mode in this game or close to a 2X advantage in The Touryst.

Seems that when pushed to extremes like 8k or 4k 60fps with RT Xbox Series X get bottlenecked by ROPS or internal bandwidth.

50fps + 22% = 61fps.

PS5 depth ROPS advantage is like 2X the Xbox Series X which could explain it having up to a 30% advantage at times in 120fps mode in this game or close to a 2X advantage in The Touryst.

Seems that when pushed to extremes like 8k or 4k 60fps with RT Xbox Series X get bottlenecked by ROPS or internal bandwidth.

Last edited:

Similar threads

- 441

- 18K

yamaci17

replied