RoadHazard

Gold Member

No, they will release a specific PS5 version for this game.

Yes, which will be exactly the same thing as the patched PS4 version.

No, they will release a specific PS5 version for this game.

I think choppiness is because of motion blur. It's old and not really useful implementation. However it's maybe subject to change.

As for raytracing it will make a picture "right and easy" to the eye and not disturb some people (like me). I personally hate bad shadows, disappearing reflections, pop-in, etc.

What game does not drop during chaos? Framerate is actually very stable here 99.99% of the time and the RT shadows are really nice to have at 60fps. I just think the PS5 game at launch was a very good deal at 10$ (and I don't even like the game that much, just played a few hours).At least than 1440p, with no texture enhancements, dropping to low 40's during chaos, and low anisotropic filtering, and using FSR1.0 with blur trails.

It's a native PS5 game.Yes, which will be exactly the same thing as the patched PS4 version.

It's a native PS5 game.

Conflicted on where to play. Have it for both PC and PS5. Gonna have to test them both out

Oh, then yeah I misunderstood what you were saying lol.Yes? And you will get that version whether you buy the new PS5 version or upgrade your PS4 version for free.

I don't think you quite know how these PS4/PS5 versions work. Yes, we will get the PS5 version for free but that doesn't mean the PS4 will receive a 60fps patch when playing it on PS5. The PS4 version will be patched with the Netflix stuff but that might be it. Also a native PS5 version of any game get its own set of trophies, that's why I'm hoping the PS4 version on PS5 plays at 60fps as well so I can continue on it.Yes? And you will get that version whether you buy the new PS5 version or upgrade your PS4 version for free.

I don't think you quite know how these PS4/PS5 versions work. Yes, we will get the PS5 version for free but that doesn't mean the PS4 will receive a 60fps patch when playing it on PS5. The PS4 version will be patched with the Netflix stuff but that might be it. Also a native PS5 version of any game get its own set of trophies, that's why I'm hoping the PS4 version on PS5 plays at 60fps as well so I can continue on it.

You underestimate the number of fans of PowerPoint and input lag.everyone will change graphics option to quality ONE'S and play 5 minutes (at the best) & say ok....this looks cool, then swap to performance mode & finish the game in that mode.

RT@30 laughable. Yeah Im not gonna play it again.

A couple of wrong things.RT is expensive. Modern GPUs are really expensive and power hungry. An RTX 4090 is $1599.00 and draws up to 450 Watts. The PS5/XSX are $400.00-500.00 devices that top out at about 200 Watts. So even if you want to inflate the price and wattage for the next generation in 2028, they probably aren't going to go above $600 and I doubt they'd come anywhere near 300 Watts. They'll punch above their weight being dedicated devices and will have new tech with better efficiencies but it's unrealistic to think they'll bridge that gap in price/power. The current consoles are still chasing the 4K dream.

Meh. Fuck playing at 30fps.

I also think 40fps modes should come as an added standard now.

PC doesn’t have Sony day 1 releases on PC. Always keep a PS system until that day occurs and even then i’ll probably still have one.Why bother with a console when you have a PC?

PC doesn’t have Sony day 1 releases on PC. Always keep a PS system until that day occurs and even then i’ll probably still have one.

I know. Just generalized overall what RT can bring to the picture. As for pop-in - it's a part of "graphics will not disturb you" approach with remasters.This will have neither RT shadows (just RTGI and RTAO) nor RT reflections (they are still screen-space, just higher quality in the 30fps mode) on console, so you'll still have all those issues. And RT has nothing to do with pop-in.

Why bother with a console when you have a PC?

Because just adjusting contrast you can have realistic light casting and bouncing in movement?in witcher 3, all you need for for full raytracing is a contrast adjustment!

See below.I mean to play this particular game on

Yep. Also simplicity and framerate consistency.Trophies and dualsense support are certainly valid reasons, imo

Why is it only Sony developers are using unlocked frame rates in 4K modes with vrr ? Why aren’t most other developers doing this by now ?

Yeah. Skyrim was the only case I've seen where the PS4 version was updated to play at 60fps on PS5 while also releasing a PS5 native version. I'll keep my hopes up.Yeah, I guess "patched" wasn't the right word. Your original post was asking if the PS4 version would run at 60fps on PS5, and I thought you hadn't realized that if you have the PS4 version you will get the full PS5 upgrade for free. I missed the part about trophies, and yeah, I guess it's true that the upgraded version will have its own set since it's now a PS5 game.

But then no, the PS4 version will definitely not be updated to run at 60. I don't think even the Pro could handle that (and base PS4 obviously couldn't). I guess they could in theory include an unlocked framerate mode in the PS4 version specifically for your use case, but it seems unlikely to happen.

Same here. When I first got a 4k tv I thought fidelity or bust. But as time has gone on with the new consoles, I have finally seen the performance light.As it stands, the performance mode seems like the easy choice for me, on console.

Why bother with a console when you have a PC?

A couple of wrong things.

For one, the $1600 price tag is almost irrelevant when it comes to consoles. They pay nowhere near that price for their parts. NVIDIA's profits margins and even AMD's are pretty insane. The estimated cost to produce the 4090 is less than half its selling price. There's a reason that the PS5 in its entirety costs $500 and Sony still turns in a slight profit whereas an equivalent GPU (the 6650 XT) came out with an MSRP of $399, the same price as the digital PS5. And that's ignoring the fact that the PS5 has an entire APU, an optical drive, a cooling solution, an integrated PSU, an SSD, etc.

Two, the wattage question is a bit more nuanced than this. The 4090 at a 100% power limit does draw about 450W but that's chiefly because NVIDIA has cranked it up far past its maximum efficiency. It's actually by a wide margin the most power efficient GPU currently on the market and in gaming scenarios at 4K draws slightly less power than a 3090 Ti despite being over 50% faster and 70%+ faster in heavy RT workloads.

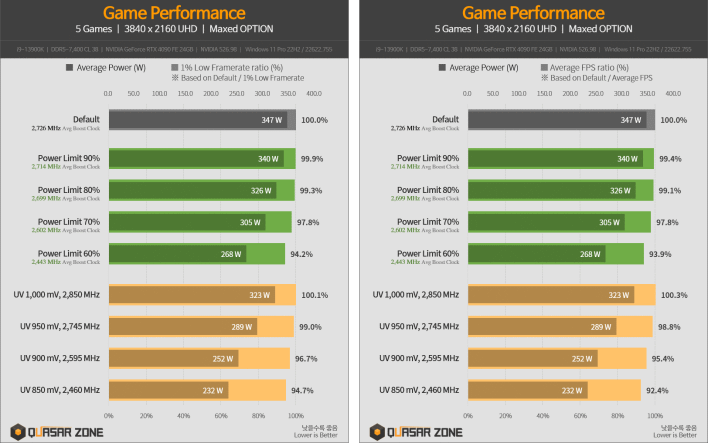

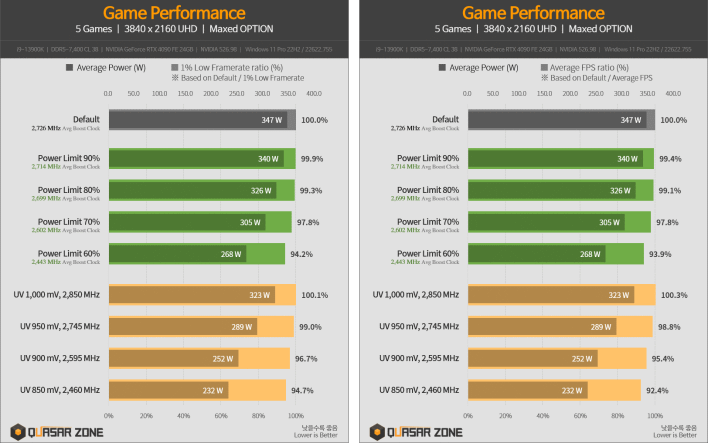

Here's how power efficient it actually is:

With proper undervolting and power management, it performs at 94.7% of its full potential drawing a meager 232W. This stomps all over everything else, including the 4080 which is quite a bit faster than previous gen flagships. My 4090 despite being this rumored out of control power hungry beast, consumes less power than my 2080 Ti at 3440x1440/120Hz most of the time.

When the consoles came out in 2020, the flagship of 6 years prior was the 980 Ti (actually the 980 because it came out in September 2014 whereas the Ti came out in June 2015 but they're on the same process node with the same architecture). 6 years from now is 3 generations worth of graphics cards at the current pace and there's no way something like an RTX 7060 will be slower than a 4090, just like a 3060 isn't slower than a 980 Ti.

i don't see any changes in this video that noticeable other than a contrast shift.Because just adjusting contrast you can have realistic light casting and bouncing in movement?

Why would the pc version have more glitches and performance issues?Graphical glitches, performance issues

Everything was wrong though. You said that the 4090 draws 450W and that consoles draw 200W or less. You can get something reasonably close to the 450W 4090 at 232W so something of 200W in 2028 outclassing the full 450W of the 4090 certainly isn't impossible. In fact, it would be unprecedented if it didn't easily beat the 4090. That 450W argument is ignoring some serious context. At 326W, the 4090 essentially performs the same.Of course it is more nuanced. It's a few sentence description for a simple explanation and thus many factors are left out like it taking more than a GPU for a PC so the costs/power calculation will further skew even with components not at 100% draw. And even at your 94.7%, efficiency number, your GPU alone runs at a higher wattage than either console. So no, nothing was really wrong.

Everything was wrong though. You said that the 4090 draws 450W and that consoles draw 200W or less. You can get something reasonably close to the 450W 4090 at 232W so something of 200W in 2028 outclassing the full 450W of the 4090 certainly isn't impossible. In fact, it would be unprecedented if it didn't easily beat the 4090. That 450W argument is ignoring some serious context. At 326W, the 4090 essentially performs the same.

Second, precedents dictate that an x60 class cards 3 generations or 6 years later easily beats the Titan from that far back. Hell, even the shitty 3050 comes reasonably close to the 980 Ti. Might even beat it but I can't recall off the top of my head.

Consoles releasing in 2028 drawing 200W should easily be able to beat a 4090 unless there's some major stagnation in the evolution of semiconductor technology in the coming years. The 4090 looks like some unbeatable monster now but 6 years in that industry is a fucking century in the real world.

That it draws up to 450W is completely irrelevant because it's far past peak efficiency. 232W is just to show how power efficient the node is at the moment. Imagine in 6 years. Even the entire system at 200W could beat a 4090 six years from now.I said it "draws up to". That's just for the GPU whereas these consoles draw up to 200W and that's for the entire system.

Not doing that. You came up with a completely flawed argument that was easily debunked and you get mad at me for correcting you.But please continue to argue in bad faith.

That it draws up to 450W is completely irrelevant because it's far past peak efficiency. 232W is just to show how power efficient the node is at the moment. Imagine in 6 years. Even the entire system at 200W could beat a 4090 six years from now.

The current consoles are comparable in raster to a 1080 Ti, the flagship from Pascal that came out over 4 years before they entered the market. The consoles at 200W trade blows with the 250W flagship from 2 generations back and actually have hardware-accelerated ray tracing and more modern tech and we're supposed to believe that in fucking 6 years they won't surpass the 4090 because it's $1600 and gains 1% in performance increase by adding 125W? Sure thing.

Not doing that. You came up with a completely flawed argument that was easily debunked and you get mad at me for correcting you.

Same here. When I first got a 4k tv I thought fidelity or bust. But as time has gone on with the new consoles, I have finally seen the performance light.

Correct. As a recent converter to PC, there is an overall lack of refinement that you naturally get with console.Graphical glitches, performance issues, micro-stutters due to shader compilation issues, etc.

I think the notion that PC is inherently the best-performing version simply because it has the most powerful CPUs & GPUs available, has been fading away of late.

PC has its pluses for sure. But lets be real- issues like shader comp issues is fucking game breaking. As is constant crashing. Some of this is a symptom of developing for a platform with 1000s of combos. But some of it is also just poor development choices.Why would the pc version have more glitches and performance issues?

Plus, framerate and input lag are still far superior on PC (and not counting the mods). The only problem would be real time shader compilation, but it's a CDPR game, so they should not suck with this.

Not doing that. You came up with a completely flawed argument that was easily debunked and you get mad at me for correcting you.

Like more input lag, lower resolution/blurry IQ, lower framerate, lack of RT effects?there is an overall lack of refinement that you naturally get with console.

No, they are not. At least not here with some insignificant "hiccups" here and there (didn't play CP before patch, but it was patched). This is a symptom of not having pre compilations like several games have.issues like shader comp issues is fucking game breaking. As is constant crashing. Some of this is a symptom of developing for a platform with 1000s of combos. But some of it is also just poor development choices.

Not necessarily that there will be one but if there is a new console in 2028 that's on RDNA6 or whatever AMD decides to call it, yes, its GPU will outperform a 4090. If it doesn't, it'd be a huge disappointment.So you think in 6 years there will be a complete system or console with a power draw of 200w that will have a GPU equal to a 4090 or better?

Then this should make it clear; a 2028 console should have a GPU that outperforms the 4090. 6 years is ancient history.Who is mad? I'm not sure your debunked anything because you seem to be laser focused when I'm talking in generalities (forest for the trees situation) nor do I think our positions are all that different even if we disagree slightly with the outcomes. I'm happy to be wrong about industry trends but that's just how I see it.

The average user would not notice the input lag and everything else listed. I swap between ps5 and my PC for warzone and don’t notice any difference on my Lg C1 besides the downgrade in quality.Like more input lag, lower resolution/blurry IQ, lower framerate, lack of RT effects?

No, they are not. At least not here with some insignificant "hiccups" here and there (didn't play CP before patch, but it was patched). This is a symptom of not having pre compilations like several games have.

For PS5, they confirmed this in their stream. They specifically said "PS5" though, so I wouldn't bet on PC getting it. CP2077 also never got patched and modders had to make an (incredible!) adaptive triggers support for the PC version.Any dual sense support?

Adaptive triggers?

If you even mention anything other than inserting a disc into a console my brother glitches. A 40 year old man that loves fighters, first person shooters, Metal Gear Solid and doesn't give one damn about framerate. My best friend is the same way. Man has a 55' TV in the living room but is playing Darksiders for the first time with his PS4 on a cheap 720p 24' Insignia TV in his kitchen so his kids can watch Disney. And he's having the time of his life, too. This may be anecdotal but the average consumer does not care at allYou underestimate the number of fans of PowerPoint and input lag.

This! It’s unbelievable that developers don’t have the foresight to include a “balanced mode” option for every game that gets released. This way people can decide for themselves if they want to put up with an inconsistent framerate to get the best possible graphics at a higher fps than a capped 30. This also future proofs every game for the inevitable ps5 pro and ps6 which will be backwards compatible.Why is it only Sony developers are using unlocked frame rates in 4K modes with vrr ? Why aren’t most other developers doing this by now ?