Trimesh

Banned

250W/100° in 10nm. Had it been 14 I'd expected it but at 10? What the shit, Intel?

Apparently Intel's next generation products (Lava Lake) will employ an innovative molten basalt based cooling system.

250W/100° in 10nm. Had it been 14 I'd expected it but at 10? What the shit, Intel?

Lava LakeApparently Intel's next generation products (Lava Lake) will employ an innovative molten basalt based cooling system.

Intel Core i9-12900K (Alder Lake) Versus Ryzen 9 5900X (Zen 3)

Several of the leaked benchmarks we've seen so far indicate that Alder Lake will give even AMD's top Zen 3 CPU (Ryzen 9 5950X) a run for its money, depending on the workload. SANDRA tells a different story. Looking at the same graph above, here is how the Core i9-12900K compares to AMD's second-best Zen 3 chip, the Ryzen 9 5900X...

These are clear victories by Zen 3 over Alder Lake, half of which come by double-digit percentage gains. The biggest win is by nearly 20 percent (Quad Integer).

- Quad Float: 53.07 (Alder Lake) / 49.47 (Zen 3)= Zen 3 by 7.3 percent

- Double Float: 1,097.06 (Alder Lake) / 1,190 (Zen 3) = Zen 3 by 8.5 percent

- Single Float: 1,958.74 (Alder Lake) / 2,000 (Zen 3) = Zen 3 by 2.1 percent

- Quad Integer: 131.13 (Alder Lake) / 157 (Zen 3) = Zen 3 by 19.7 percent

- Long: 691.90 (Alder Lake) / 805 (Zen 3) = Zen 3 by 16.3 percent

- Integer: 1,691.13 (Alder Lake) / 2,000 (Zen 3) = Zen 3 by 18.3 percent

By the way, the Ryzen 9 5900X is a 12-core/24-thread CPU with a 3.7GHz base clock, 4.8GHz max boost clock, and 64MB of L3 cache. It is roughly comparable to the Core i9-12900K in core and thread counts, though obviously they are two very different architectures.

This is an interesting comparison for sure. Like every other leak, we'll have to wait for Alder Lake to arrive before knowing if these results stand up to post-launch testing by reviewers and the general public at large. Fun times are ahead.

Biggest change in W11 is "Intel Thread Director", as if the old Windows Schedulers weren't bad enough.

Works wonder for Alder Lake, see:

But it fucks with other CPUs, SPECIALLY AMD CPU's, SPECIALLY crippling one of Ryzen's biggest strengths that is the L3. One example:

Read from 898GB/s to 136GB/s (-85%)

Write from 565GB/s to 51GB/s (-91%)

Copy from 701GB/s to 66GB/s (-91%)

Latency from 11.2ns to 32.1ns (-187%)

This happens even on Ryzens with just one CCD, this is totally Microsoft's fault with Intel blessing (it comes in time for both Alder Lake and Ryzen 3D!)

What AMD can do? Ask Intel for the source code "to fix" it?

The solution here will be Intel releasing an update to force W11 to not use this Thread Disruptor with other CPUs.

Is there an article or MS KB for that? Interested to read but couldn't find anything.The issue has already been fixed and is rolling out

I can't wait to know the truth. I think Intel will kick ass with these new CPUs. If they are barely able to beat Ryzen 3 or even be outclassed by them it will be a huuuuge disappointment.

Windows 11 Gives Alder Lake A Big Boost In Leaked Benchmark But Ryzen Is Still Ahead

Alder Lake stretches its legs in Windows 11 in another leaked benchmark, but gets bested overall by Zen 3.hothardware.com

Time to pump the brakes on the hype train?

They will be fine.I can't wait to know the truth. I think Intel will kick ass with these new CPUs. If they are barely able to beat Ryzen 3 or even be outclassed by them it will be a huuuuge disappointment.

Edit: someone in the comments said the E cores are not used in this test at all, don't know if true. If true AMD is in trouble.

Just google search AMD Win 11 Fix and you'll find tons of articles with both MS and AMD knowing of the issue and promising a fix in OctoberIs there an article or MS KB for that? Interested to read but couldn't find anything.

At this point we'll have to look at LN2 with these power draws and the talk of the next set of GPUs to power up to 600W.OK... so here's the thing, with that power draw what are we looking at to cool it? I don't mean from a "oh it's fine just stick a block on it and where done" I'm talking how fast will the fans have to go to move that heat off the CPU? Because all I can imagine unless your using a custom loop is effectively a jet engine at that draw.

More power = more heat, alot more at the more recent gens. Even triple fan 360mm AIO's had to ramp up last gen at the higher frequencies due to that generations draw, what are going to be looking at now?

Appreciated and you're right but none of those links I've seen have said it is already fixed and that the solution is rolling out already.Just google search AMD Win 11 Fix and you'll find tons of articles with both MS and AMD knowing of the issue and promising a fix in October

Just google search AMD Win 11 Fix and you'll find tons of articles with both MS and AMD knowing of the issue and promising a fix in October

Still not upgrading from my 10900k.

Welp...Intel definitely too greedy

According to Intel, the E-Core consumes 80% less power than Skylake at the same performance level, comparing 4C4T to 2C4T.I don't think Alder Lake consuming a lot of power is greedyness.

They don't have a product that can compete in power efficiency, so they're clocking their big cores well past their ideal power/performance curves so they can compete in performance. AMD did exactly that with Vega and Polaris (RX4xx/5xx) against Nvidia's Pascal.

That's not a huge problem when dealing with tower desktops IMO, but it doesn't look like Alder Lake will be able to compete very well against AMD's Cezanne (Zen3 + Vega), and most likely Rembrandt (Zen3 + RDNA2), in the laptop market.

The LITTLE/e-core is an Atom core. It's fine for light tasks (e.g. office & e-mail, light web browsing, watching youtube, etc.) but once the system loads up a game it'll need to use the big / p-core. That's when the power constraints of a laptop come into play, and where Alder Lake's p-cores will need to use a lot lower clocks than the desktop counterparts.According to Intel, the E-Core consumes 80% less power than Skylake at the same performance level, comparing 4C4T to 2C4T.

So they may be able to compete in power efficiency, just not power efficiency and absolute performance.

Well, it's an "Atom" core with 8% better single threaded performance than Skylake (!), that can deliver up to 80% better MT comparing 4C4T w/2C4T. And Skylake-level performance is already adequate for gaming, so I would be curious to see how the versions of Alder Lake with fewer P-Cores do.The LITTLE/e-core is an Atom core. It's fine for light tasks (e.g. office & e-mail, light web browsing, watching youtube, etc.) but once the system loads up a game it'll need to use the big / p-core. That's when the power constraints of a laptop come into play, and where Alder Lake's p-cores will need to use a lot lower clocks than the desktop counterparts.

Zen 3's APUs (with less L3) seem to use around 3W per core when clocked at 3-3.5GHz, and it seems the Golden Cove p-cores can't reach anywhere near that level of power efficiency.

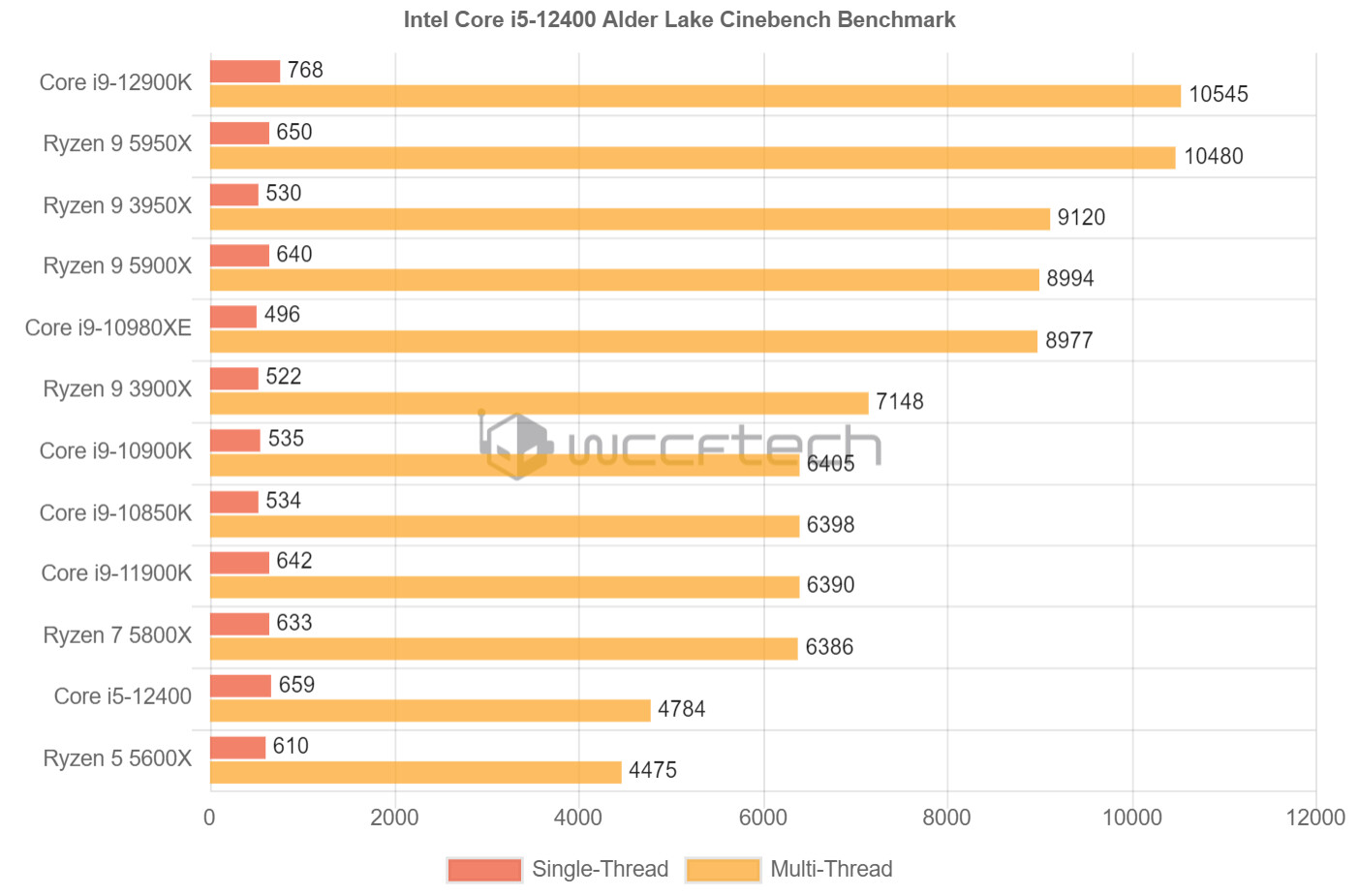

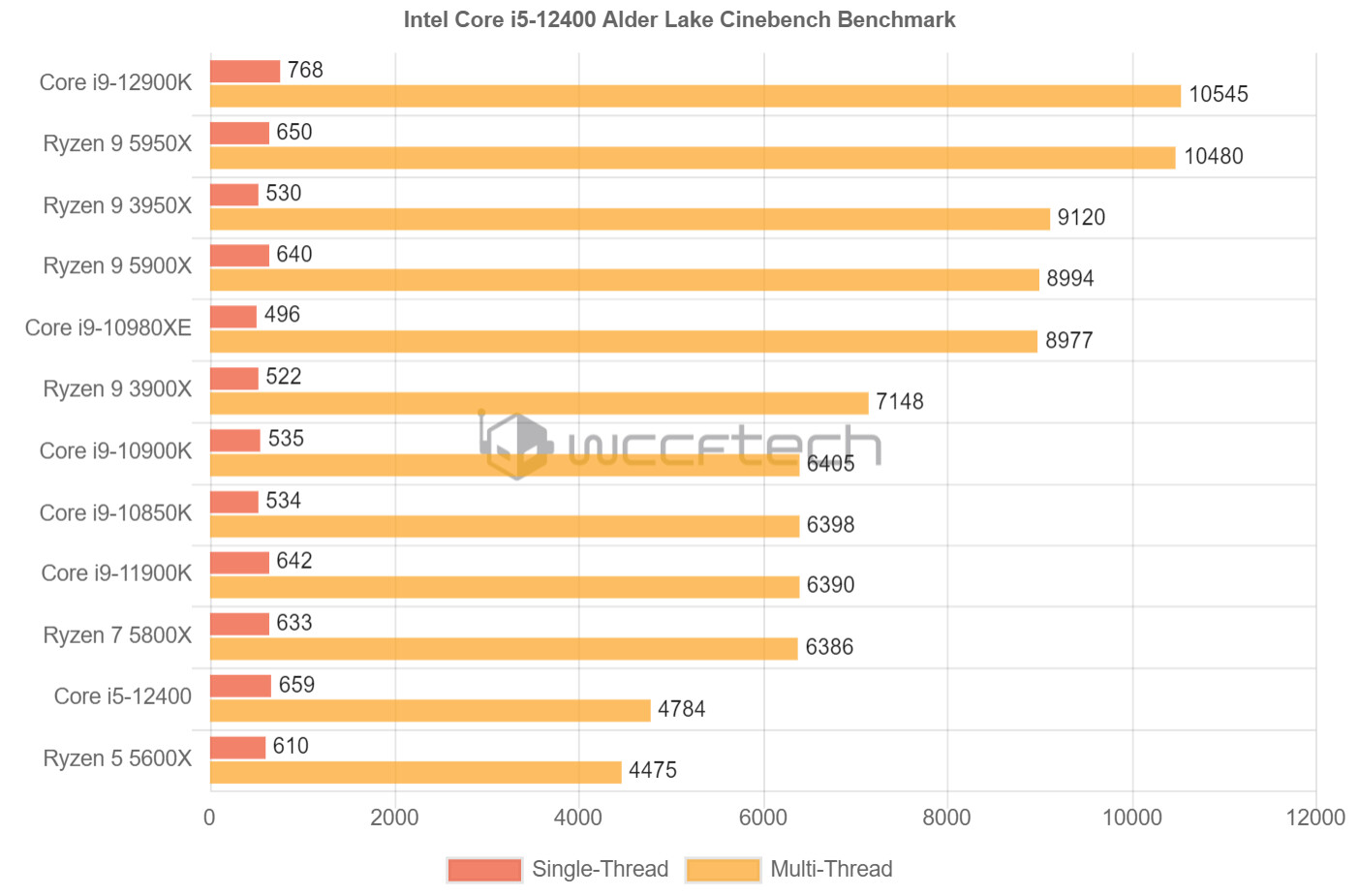

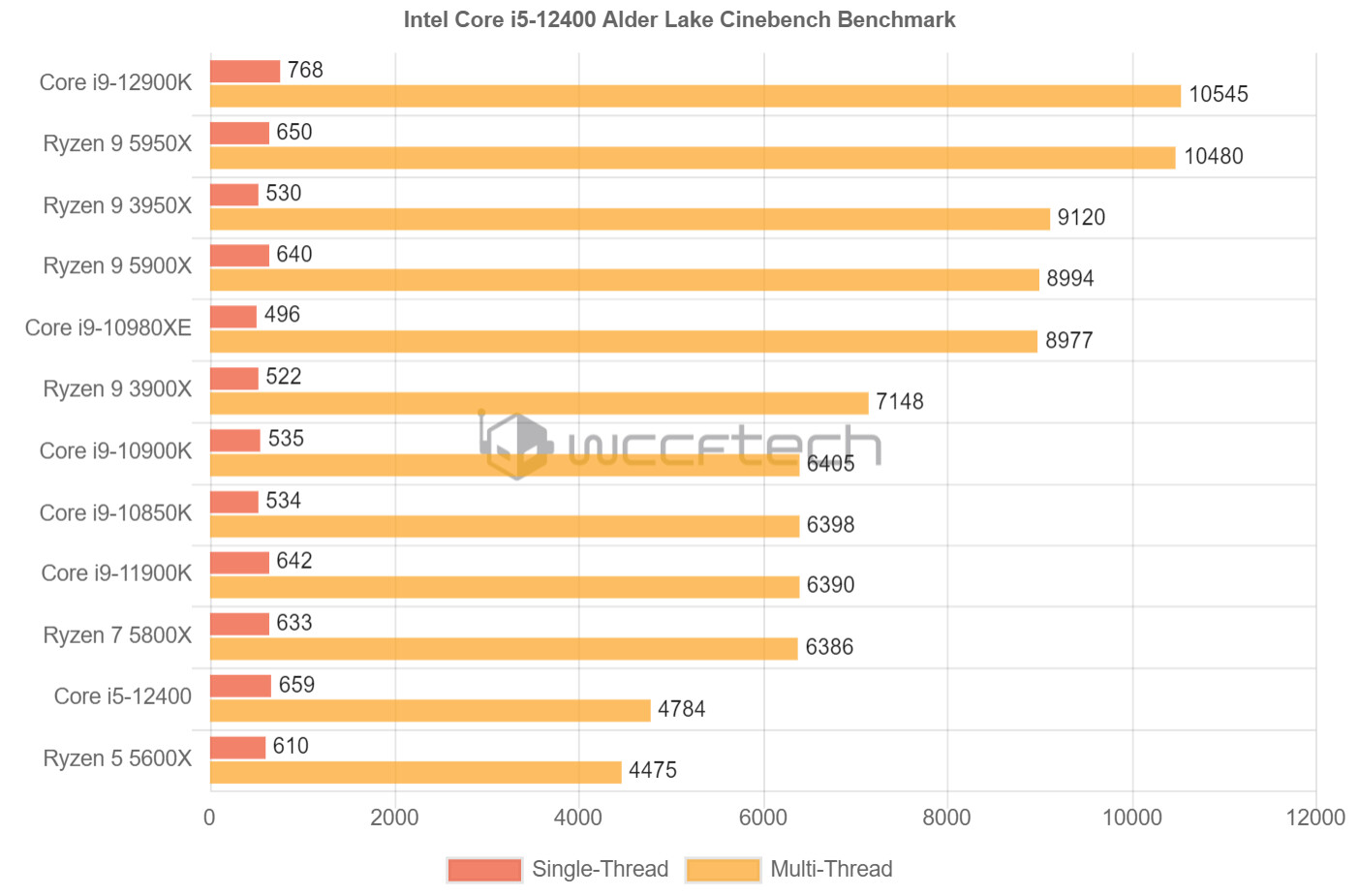

Intel Core i5-12400 Alder Lake Budget Desktop CPU With 6 Cores Faster Than AMD Ryzen 5 5600X In Leaked Benchmarks

The first benchmarks of Intel's Core i5-12400 CPU which will be positioned in the Alder Lake budget desktop segment have leaked out.wccftech.com

I'm just hope there's gonna be a K version of the leaked i5 12400 (6+0 configuration), as it'll easily be the best bang for the buck, by a huge margin.

What do K chips bring to the table these days?

Back in Sandybridge when you went from 3.3GHz to 5.0GHz that was worth the price of admission alone.

But today K chips barely boost over the stock chips and even with added power/overclock potential you gain near negligible gains.

I highly doubt there will be a xx400K.

We havent had a K 400 chips before and havent needed 400Ks cuz they do nearly everything the 5/600K chips can do at a bargain price.

Intel Core i9-12900K overclocked to 5.2 GHz on all Performance cores reportedly consumes 330W of power - VideoCardz.com

Overclocked Intel Core i9-12900K outperforms Ryzen 9 5950X in CPUZ multi-thread test Bilibili content creator 热心市民描边怪 published the results of the upcoming Alder Lake Core i9 processor with all ‘big’ cores overclocked to 5.2 GHz. According to the screenshot from the CPU-Z benchmark, the Core...videocardz.com

Reportedly 330W with OC, so right in time for winter season.

You want to get a 12900K....it fights with the 5950 and 5900 and the price will show that.How much is the power consumption of this in comparison to 5800X. I'm just about to build a 3070Ti build PC and but the 3070Ti is already power hungry so not sure to add even more power hungry parts in especially with the cost of electricity going up in the UK.

Yeah I'm definitely going to go intel 12 gen as the chip will come out in the next month. As well as a motherboard that supports PCIE 5.0 but I can't seem to find any news of these 5.0 motherboards coming out soon but thats my ideal purchase so my PC is future proof in terms of CPU and Motherboard. In 5 years time hopefully I'd just need a GPU and RAM upgrade.

wait for independent benchmarks before making a decision. current info is based on "leaks", which i suspect intel has done deliberately to hype up their product.

intel has a horrible reputation for manipulating benches to make them look good:

The other day the CEO had said that AMD was already done, that Intel was back.

But yesterday on the finalcial report he said that Intel expects to achieve "performance per watt parity in 2024 and leadership in 2025".

Maybe he was talking about Apple?

But yesterday on the finalcial report he said that Intel expects to achieve "performance per watt parity in 2024 and leadership in 2025".

/cdn.vox-cdn.com/uploads/chorus_asset/file/22947149/intelcorei912900k.jpg)

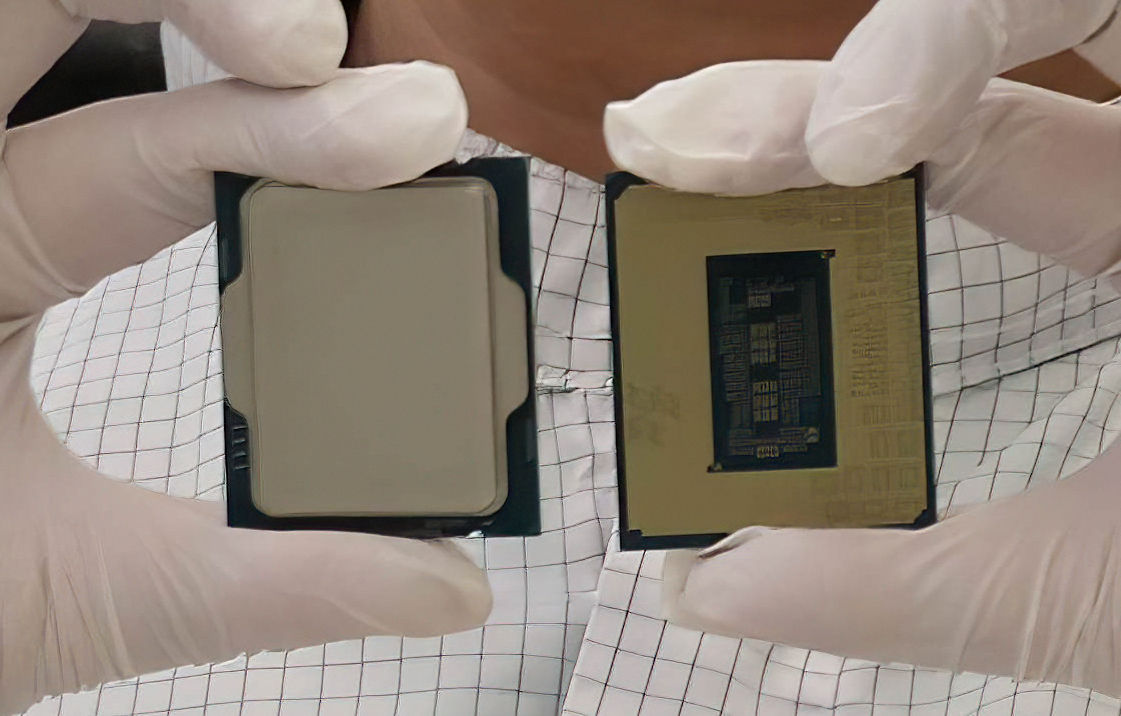

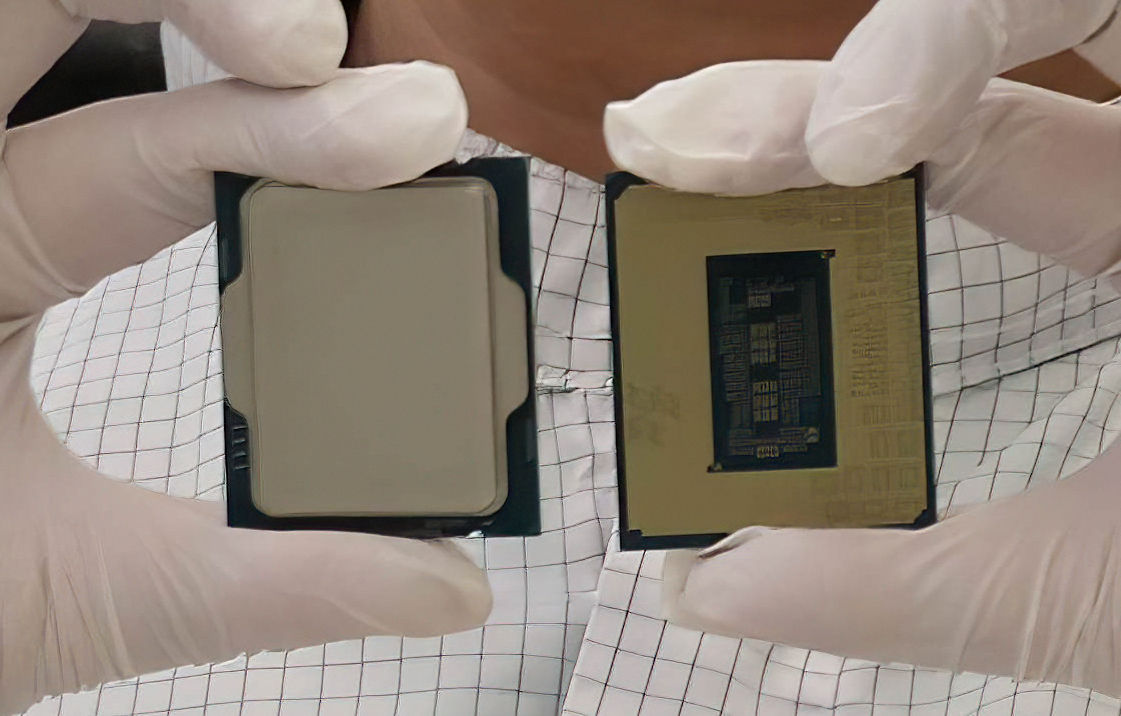

A Reddit poster, spotted by Techspot, managed to purchase two Core i9-12900K processors in retail packaging from an unnamed retailer for $610 per CPU. A similar mix-up happened earlier this year, when retailers started selling Intel’s Rocket Lake CPUs nearly a month before their launch.

On/Of Topic

The 12400 looks like its gonna be king of the hill again (400) series for gamers.

Overclocking is dead, memory gains are useless.

Long live budget Intel CPUs:

^The gen on gen gains Intel seem to have made are actually pretty insane.

Heres to hoping the reviews are good.....the leaked prices already have me scratching my head.

Intel you have this shit in the bag.....just price your chips at Ryzen level or a few dollars above...dont entire tier increase the price, else ill be sticking to the 10th gen till they are legit bottlenecks.....in 2030.

That packaging is sexy as hell. Just told my wife what I want for Christmas./cdn.vox-cdn.com/uploads/chorus_asset/file/22947149/intelcorei912900k.jpg)

Intel’s unreleased Core i9-12900K processor goes on sale early

Intel’s next flagship CPU is already on sale.www.theverge.com

You want to get a 12900K....it fights with the 5950 and 5900 and the price will show that.

The competish for the 5800X is i7s.

Intel generally eats more power than AMD at full tilt.

But for gaming they will be pretty close to each other.

The CPU is never truly at full tilt while gaming. (or atleast for now there arent really games that truly stress a CPU like AIDA, Prime or other "stress" tests which benchmarkers use to get absolute peaks.

My advice wait for the 12600 or better yet the 12400, that still leaves you with an upgrade path if that CPU is somehow a bottleneck for you.

On/Of Topic

The 12400 looks like its gonna be king of the hill again (400) series for gamers.

Overclocking is dead, memory gains are useless.

Long live budget Intel CPUs:

^The gen on gen gains Intel seem to have made are actually pretty insane.

Heres to hoping the reviews are good.....the leaked prices already have me scratching my head.

Intel you have this shit in the bag.....just price your chips at Ryzen level or a few dollars above...dont entire tier increase the price, else ill be sticking to the 10th gen till they are legit bottlenecks.....in 2030.

Thanks, this is the kind of comparison I’m really interested in. Most sites just measure the “all cores full load” peak power consumption + idle consumption but that doesn’t give you a realistic idea of the power/heat you will be dealing with while gaming.You want to get a 12900K....it fights with the 5950 and 5900 and the price will show that.

The competish for the 5800X is i7s.

Intel generally eats more power than AMD at full tilt.

But for gaming they will be pretty close to each other.

The CPU is never truly at full tilt while gaming. (or atleast for now there arent really games that truly stress a CPU like AIDA, Prime or other "stress" tests which benchmarkers use to get absolute peaks.

Thanks, this is the kind of comparison I’m really interested in. Most sites just measure the “all cores full load” peak power consumption + idle consumption but that doesn’t give you a realistic idea of the power/heat you will be dealing with while gaming.

I’m especially interested in what this will look like with efficiency cores in the mix. Wondering if we might even see gaming scenarios where Alder Lake average wattage is lower than Zen 3 even as peak wattage is higher.

Looks like Alder Lake is now going to be power efficient

Looks like Alder Lake is now going to be power efficient