to avoid waiting of such bright screens I sugges this BFI method.Strobing at 10,000 nits for only 10% of the time, still only averages 1000 nits to the human eye.

I guess, FALD TVs already use same method in normal cases, not sure about BFI.

to avoid waiting of such bright screens I sugges this BFI method.Strobing at 10,000 nits for only 10% of the time, still only averages 1000 nits to the human eye.

ThisPlasmas motion is the next best thing to crt. I've got a calibrated Panasonic still as my main display.

SONY GDM-FW900 BEST CRT EVER MADE PVM BVM RETRO GAMING | eBay

Urge to spend rising

This is a hipster thing, it has to be. I grew up around CRT TVs, the resolution is shit, there's color bleeding from the analog signal and they are big boys for a tiny screen.

There's a very good reason why everyone switched to LCD.

the resolution is shit? the current PC standard so to say is 1440p... there are sony Trinitron monitors that support 2304 x 1440 which is basically the 16:10 version of 1440p and that at 80hz

I miss my Panisonic 1080i HD CRT. That picture quality was nuts and feels like I've never been able to reach it again.Wish I still had my old HD Sony CRT...

The great majority of them during the golden age of CRTs were standard definition. We are at 4K and dabbling with 8K, I don't understand why you'd want to go back to a deformed, small, and very heavy display.

for some games it is simply is superior. also I'm playing Apex legends on PS4 because the game doesn't have cross progression yet, and there I have to play at about 720p with a shitty framerate and an image completely ruined by a terrible temporal AA implementation.

so there is plenty worse things even in modern games compared to a bit of a warped image on the side.

if CRTs were still standard the game wouldn't need temporal AA in the desperate attempt to scale up the image, because on a CRT they could simply play the game at 720p or 800p and it would look good because it doesn't need to fit a fixed pixel grid.

also good PC CRTs were usually in the 800p area not SD

You're saying Sony and Panasonic actually have a BFI implementation that really does reduce motion blur? I had a 2015 60in Vizio that had black frame insertion to reduce motion blur but honestly all it did was introduce flicker, I couldn't really tell if it reduced motion blur or not, in fact I don't think it did it all.BFI implementation differs with each company / display. On monitors, yes it does suck. On Sony and Panasonic televisions, it's a godsend. You also don't need extra brightness unless it's an hdr source.

You have 240hz LCD, and you're able to surf the web in 240fps? And when you scroll you can still read the text huh? That's honestly why I said 240fps@240hz might be good enough.Response time. That makes 50/60hz CRTs look perfectly clear.

240hz LCDs is still not as clean but a hell lot cleaner than 60hz LCDs. I can read the text in this forum while smooth scrolling. The text retains it's clarity and doesn't look like a blurry mess like on a 60hz LCD, where i have to keep the screen still to read it. Even at 120/144hz, they are a bit too blurry for that.

It says 16ms flash per frame at any Hz, but how can you have a flash for 16ms if the content you're viewing, is in 120fps or higher? Because at 120fps each frame is only there for 8ms before the next frame is shown, I mean I understand the reason we see motion blur on LCD displays is because the back light is always on, the back light doesn't strobe it's always 100% on, one of the ways to reduce motion blur is to strobe the backlight for each frame, you need to ELI5 (explain like I'm 5). How can you have a flash that lasts for 16ms when each frame is only being shown for 8ms as in 120fps, you see this is kind of heady stuff for a non-engineer type person like myself?Chief Blur Buster Here, inventor of TestUFO!

While some of this is true, This needs further explanation.

Display Science

For those who haven't been studying the redesigned Area 51 Display Research Section of Blur Busters lately, I need to correct some myths.

OLEDs also have motion blur too unless impulse driven too. Even 0ms GtG has lots of motion blur because MPRT is still big.

VR headsets such as Oculus Rift can strobe (impulse-drive like a CRT), as well as LC CX OLED BFI setting. However, the MPRT of Oculus Rift Original is 2ms MPRT, and LG CX OLED is about 4ms MPRT. Be careful not to confuse GtG and MPRT -- see Pixel Response FAQ: Two Pixel Response Benchmarks: GtG Versus MPRT. To kill motion blur, both GtG *and* MPRT must be low. OLED has fast GtG but high MPRT, unless strobed.

Faster GtG Can Reduce Blur -- But Only To a Point

However, faster GtG does lower the flicker fusion threshold of the stutter-to-blur continuum where objects during low framerates "appear" to vibrate (like slow guitar/harp string) and high framerates vibrate so fast they blur (like a fast guitar/harp string). Regular stutter (aka 30fps) and persistence motion blur (aka 120fps) is the same thing --- the stutter-to-blur continuum is easily watched at www.testufo.com/vrr (a frame rate ramping animation during variable refresh rate).

Slow GtG fuzzies up the fade between refresh cycles as seen in High Speed Videos of LCD Refresh Cycles (videos of LCD and OLED in high speed video), which can lower the threshold of the stutter-to-blur continuum.

Some great animations to watch to help you understand the stutter-to-blur continuum:

Stutters & Persistence Motion Blur is the Same Thing

It's simply a function of how slow/fast the regular-stutter vibration. Super fast stutter vibrates so fast it blends into motion blur.

- TestUFO: Demo of Stutter-to-Blur Continuum - Most Important Educational Demo

- TestUFO: 5-UFO Framerates Animation: Pixel visibility time = amount of motion blur

- TestUFO: 60Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: 144Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: 240Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: This Animation Will Have Motion Blur On OLEDs, Guaranteed (Unless BFI/impulse Enabled)

- TestUFO: Another Motion Blur Optical Illusion

- TestUFO: Emulation of variable refresh rate

Educational Takeaways of Above Animations

View all of the above on any fast-GtG display (LCD or OLED, such as TN LCD, modern "1ms-GtG"-IPS-LCD, or an OLED). You'll observe once GtG is an insignificant percentage of refresh cycle, these things are easy to observe (assuming framerate = Hz).

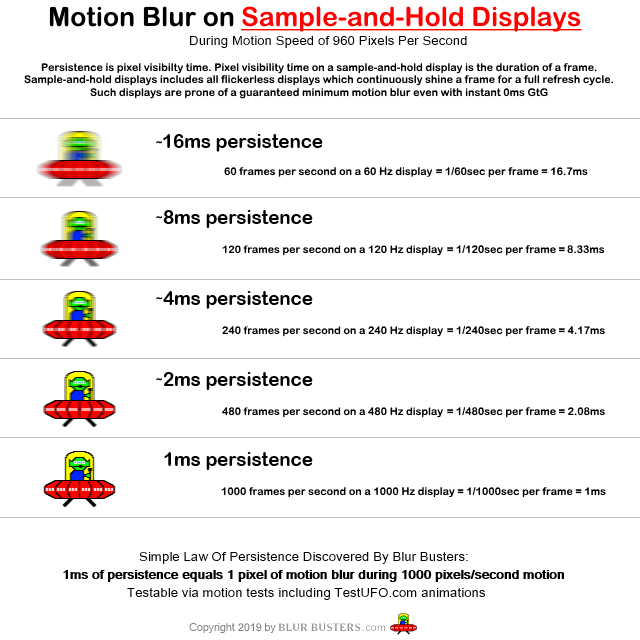

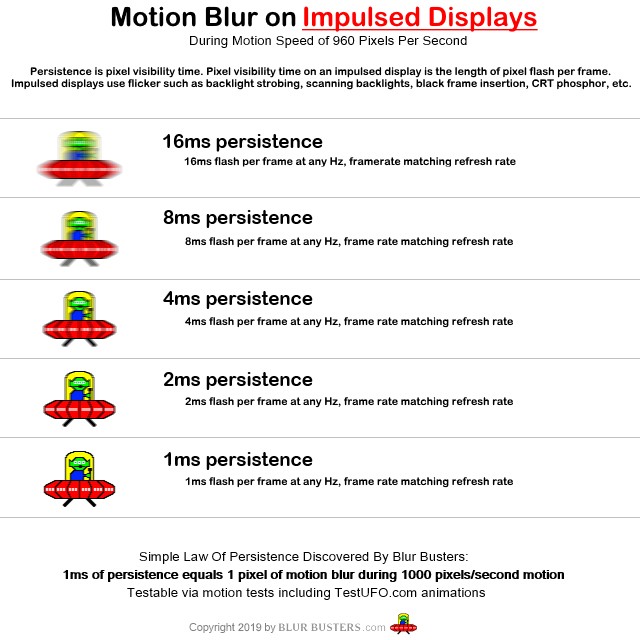

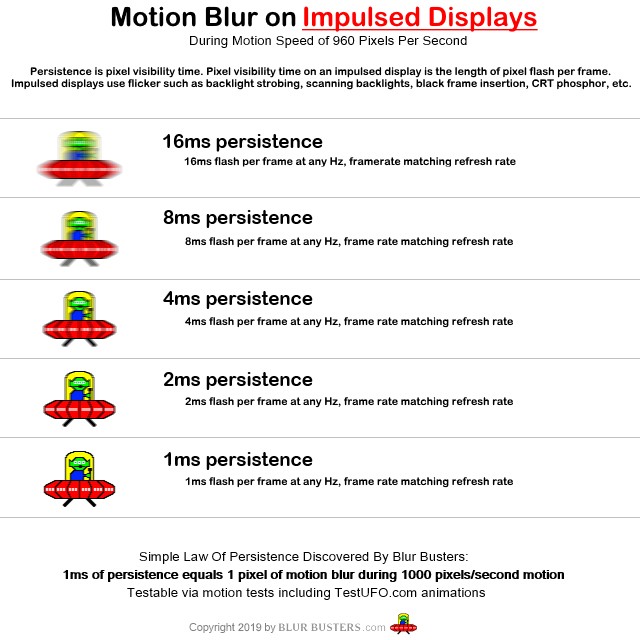

1. Motion blur is pixel visibility time

2. Pixel visibility time of a strobed display is the flash time

3. Pixel visibility time of a non-strobed display is the refresh cycle time

4. Stutter and persistence blur is the same thing; it's a function of the flicker fusion threshold where stutter blends to blur

5. OLED has motion blur just like LCDs

6. You need to flash briefer to reduce motion blur on an impulse driven display.

Once you've seen all the educational animations, this helps greatly improve the understanding of displays.

Now, LCDs do not need to be 1000Hz to reduce motion blur -- you can simply use strobing. But strobing (CRT, LCD, OLED) are just a humankind bandaid because real-life doesn't flicker or strobe. We need 1000Hz OLEDs / LCDs, regardless of whether OLED or LCD.

Only Two Ways To Reduce Persistence (MPRT) Display Motion Blur

There are only two ways to shorten frame visibility time (aka motion blur)

1. Flash the frame briefer (1ms MPRT requires flashing each frame only for 1ms) -- like a CRT

2. Add more frames in more refresh cycles per second. (1ms MPRT requires 1000fps @ 1000Hz)

And, the OP forgot to post both images, which are equally important due to context

So everyone who might prefer a CRT monitor to play games with no input lag or better motion clarity are hipsters?Their resolution is also insultingly low. It's a hipster thing, just because the tech has one or two aspects that are still very good by today's standards, it doesn't mean that the most important factors of a TV are way better in what is available in the market now. I would never want to code in a curved CRT tv ever again, give me high resolution, bright colors and ample screen space.

Yes. Everything i do on my desktop runs at 240fps, expect playing most games obviously. I use smooth scrolling on the browser so i don't have to stop the scrolling while i'm reading the text. This is how i used the internet since the late 90's. Standard 60hz LCDs were a pain for me to use because i had to re-wire my brain and make the screen stop from moving in order to read. Mouse movement is also much more responsive and doesn't feel like i'm dragging the pointer.svbarnard said:You have 240hz LCD, and you're able to surf the web in 240fps?

Their resolution is also insultingly low. It's a hipster thing, just because the tech has one or two aspects that are still very good by today's standards, it doesn't mean that the most important factors of a TV are way better in what is available in the market now. I would never want to code in a curved CRT tv ever again, give me high resolution, bright colors and ample screen space.

When you say "lines of motion resolution" you're referring to frames per second right? Remember a lot of people here are casual people.Adendum on my part, you were right:

-> http://www.panasonic.com/business/plasma/pdf/TH152UX1SpecSheet.pdf

4K, 152", 3700 W

That's surprising for me to read.

Any idea of how many lines of motion resolution that translates to? Above 600?

Why did plasma die out? They had great picture.

mainly it comes down to:

+plasma tvs were more expensive to create when compared to lcd tvs (also heavier)

+plasma was marketed poorly and couldn't keep up with the competition of lcd

Two words "motion blur".The great majority of them during the golden age of CRTs were standard definition. We are at 4K and dabbling with 8K, I don't understand why you'd want to go back to a deformed, small, and very heavy display.

I think the key thing that started killing it was the PR that they were heavy (which they were). But when they started fizzling out, they still had best picture quality. Darks, speed were in no way better on LED/LCD.

But plasmas still tanked.

I never understood the heavy thing. Its not like anyone is moving a TV every day. Once you plunk it somewhere it stays. And lots of people even hung plasmas on the wall, so it's not like its so heavy it wont hang. Anyone mounting a big LCD/LED requires two people anyway.

I think another issue was technology for plasmas I think was tapering out while LCDs and such kept going, improving, OLED etc.... You never really saw plasma makers say giant innovations were coming.

Personally, i'm mostly interested in high-res PC monitors and not standard def TVs.The great majority of them during the golden age of CRTs were standard definition. We are at 4K and dabbling with 8K, I don't understand why you'd want to go back to a deformed, small, and very heavy display.

Yup.Personally, i'm mostly interested in high-res PC monitors and not standard def TVs.

But even then, there is a large community of retro gamers who prefer playing retro games on such TVs. Every game from N64 or older was made with these TVs in mind and they look much better (and correct) in them.

I didn't know you were on here. That's great! Your work is legendary, and it's been very educational. Thanks!Chief Blur Buster Here, inventor of TestUFO!

While some of this is true, This needs further explanation.

Display Science

For those who haven't been studying the redesigned Area 51 Display Research Section of Blur Busters lately, I need to correct some myths.

OLEDs also have motion blur too unless impulse driven too. Even 0ms GtG has lots of motion blur because MPRT is still big.

VR headsets such as Oculus Rift can strobe (impulse-drive like a CRT), as well as LC CX OLED BFI setting. However, the MPRT of Oculus Rift Original is 2ms MPRT, and LG CX OLED is about 4ms MPRT. Be careful not to confuse GtG and MPRT -- see Pixel Response FAQ: Two Pixel Response Benchmarks: GtG Versus MPRT. To kill motion blur, both GtG *and* MPRT must be low. OLED has fast GtG but high MPRT, unless strobed.

Faster GtG Can Reduce Blur -- But Only To a Point

However, faster GtG does lower the flicker fusion threshold of the stutter-to-blur continuum where objects during low framerates "appear" to vibrate (like slow guitar/harp string) and high framerates vibrate so fast they blur (like a fast guitar/harp string). Regular stutter (aka 30fps) and persistence motion blur (aka 120fps) is the same thing --- the stutter-to-blur continuum is easily watched at www.testufo.com/vrr (a frame rate ramping animation during variable refresh rate).

Slow GtG fuzzies up the fade between refresh cycles as seen in High Speed Videos of LCD Refresh Cycles (videos of LCD and OLED in high speed video), which can lower the threshold of the stutter-to-blur continuum.

Some great animations to watch to help you understand the stutter-to-blur continuum:

Stutters & Persistence Motion Blur is the Same Thing

It's simply a function of how slow/fast the regular-stutter vibration. Super fast stutter vibrates so fast it blends into motion blur.

- TestUFO: Demo of Stutter-to-Blur Continuum - Most Important Educational Demo

- TestUFO: 5-UFO Framerates Animation: Pixel visibility time = amount of motion blur

- TestUFO: 60Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: 144Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: 240Hz version of TestUFO Variable Black Frames Animation: Pixel visibility time = amount of motion blur

- TestUFO: This Animation Will Have Motion Blur On OLEDs, Guaranteed (Unless BFI/impulse Enabled)

- TestUFO: Another Motion Blur Optical Illusion

- TestUFO: Emulation of variable refresh rate

Educational Takeaways of Above Animations

View all of the above on any fast-GtG display (LCD or OLED, such as TN LCD, modern "1ms-GtG"-IPS-LCD, or an OLED). You'll observe once GtG is an insignificant percentage of refresh cycle, these things are easy to observe (assuming framerate = Hz).

1. Motion blur is pixel visibility time

2. Pixel visibility time of a strobed display is the flash time

3. Pixel visibility time of a non-strobed display is the refresh cycle time

4. Stutter and persistence blur is the same thing; it's a function of the flicker fusion threshold where stutter blends to blur

5. OLED has motion blur just like LCDs

6. You need to flash briefer to reduce motion blur on an impulse driven display.

Once you've seen all the educational animations, this helps greatly improve the understanding of displays.

Now, LCDs do not need to be 1000Hz to reduce motion blur -- you can simply use strobing. But strobing (CRT, LCD, OLED) are just a humankind bandaid because real-life doesn't flicker or strobe. We need 1000Hz OLEDs / LCDs, regardless of whether OLED or LCD.

Only Two Ways To Reduce Persistence (MPRT) Display Motion Blur

There are only two ways to shorten frame visibility time (aka motion blur)

1. Flash the frame briefer (1ms MPRT requires flashing each frame only for 1ms) -- like a CRT

2. Add more frames in more refresh cycles per second. (1ms MPRT requires 1000fps @ 1000Hz)

And, the OP forgot to post both images, which are equally important due to context

Anyone who is knowledgeable on the subject know if it is technically possible to make a CRT that didn't require so much depth?

I wonder, given unlimited funds for R & D, and zero care for what consumers bought, what display technology could produce the best display? Could they make a flat 4K CRT? Would HDR be possible with CRT or plasma?

Importantly, I think, is that they were more expensive for the consumer.

Thank you for the compliment!I didn't know you were on here. That's great! Your work is legendary, and it's been very educational. Thanks!

It's an ancient benchmark from the NTSC TV / plasma TV days that doesn't convert well to different refresh rates and different resolution screensWhen you say "lines of motion resolution" you're referring to frames per second right? Remember a lot of people here are casual people.

I am sorry to be the bearer of bad news, but SED and FED prototypes had more artifacts than plasma. Some of it caused by certain similarities to plasma, but others caused by the need to concurrently refresh multiple parts of the screen to keep the image bright, etc. On some prototypes, there was a segmented multiscanning-artifact problem (e.g. zigzag artifacts, a complex cousin of interlacing artifacts).SED and FED

Died before it could get to market sadly. Would have been interesting how much more advanced it would be if it had today.

It says 16ms flash per frame at any Hz, but how can you have a flash for 16ms if the content you're viewing, is in 120fps or higher? Because at 120fps each frame is only there for 8ms before the next frame is shown, I mean I understand the reason we see motion blur on LCD displays is because the back light is always on, the back light doesn't strobe it's always 100% on, one of the ways to reduce motion blur is to strobe the backlight for each frame, you need to ELI5 (explain like I'm 5). How can you have a flash that lasts for 16ms when each frame is only being shown for 8ms as in 120fps, you see this is kind of heady stuff for a non-engineer type person like myself?

Listen Mr. Rejhon you know what you really need to do you need to make an accompanying companion video to your 2017 article "the amazing journey to Future 1,000Hz displays." You need to make a an in-depth video where you go over every point and explain it like you're talking to five year olds, so people either have the option of reading the article or watching the video (the video should have a link at the very beginning of the article), seriously please do this?

You're welcome! Your contributions to the space were badly needed. Question - any new blur busters approved monitors coming up on the horizon?Thank you for the compliment!

to avoid waiting of such bright screens I sugges this BFI method.

I guess, FALD TVs already use same method in normal cases, not sure about BFI.

Four in 2021 (assuming none of them cancels/delays them to 2022).You're welcome! Your contributions to the space were badly needed. Question - any new blur busters approved monitors coming up on the horizon?

This is not true.the 1000 fps part seems silly to suggest when what you want is the response time of 1000 Hz.

If you had that, it probably wouldn't matter if frames are being doubled/interpolated or not.

I'm glad to see more manufacturers recognize the value of the blur busters approved validation process. Does "strobe any custom Hz" mean that we can finally have strobing with VRR?Four in 2021 (assuming none of them cancels/delays them to 2022).

Two manufacturers have committed (signed agreements) to 4 models coming out in 2021, and a third manufacturer have already unofficially committed.

Be warned, there is the common typical 12 months for monitor engineering cycles.

The first one is a 24 inch 240Hz IPS monitor that supports 60Hz single strobe, strobe-any-custom-Hz (60Hz thru 240Hz in one analog continuum), and supports optional Strobe Utility operation (no, it's not a BenQ ZOWIE monitor).

Good move.I use 1080p plasma for PC gaming as much as I can - still less motion blur than either 200 or 240 Hz monitor.

I'm glad to see more manufacturers recognize the value of the blur busters approved validation process. Does "strobe any custom Hz" mean that we can finally have strobing with VRR?

which is more important for motion clarity , HZ or response time?

Well it's the most important to me as bad motion clarity makes some games unplayable, it is as simple as that.that it's only an aspect of image quality

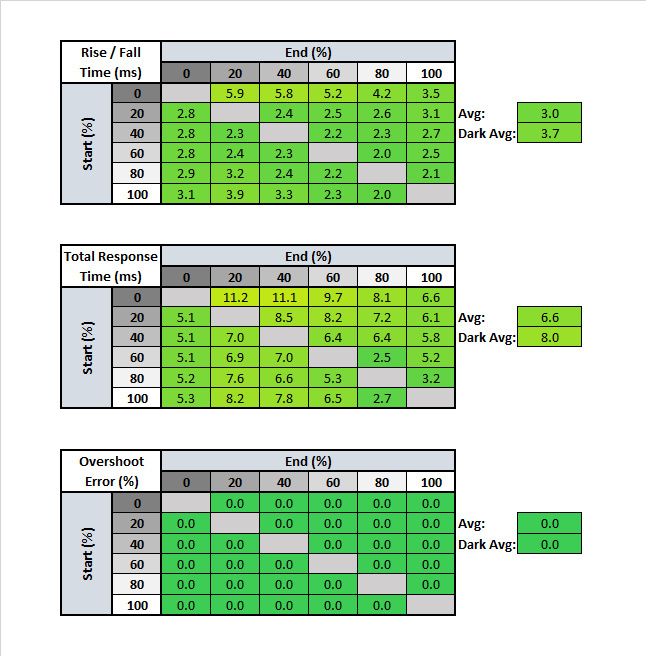

Yes, it is always ideal for a LCD to have GtG faster than a refresh cycle.Below are the response times of the Dell Alienware AW2521H (taken from https://www.rtings.com/monitor/reviews/dell/alienware-aw2521h)

A 120hz CRT has a constant 8.3ms response time (with a ~2ms decay)

The AW2521H is faster in most cases but unequal.

For a 360hz monitor, everything should be at least under 2.7ms to avoid ghosting and blur.

What monitor manufacturers should target is not to raise the refresh rate but lower the response times.

You're saying Sony and Panasonic actually have a BFI implementation that really does reduce motion blur? I had a 2015 60in Vizio that had black frame insertion to reduce motion blur but honestly all it did was introduce flicker, I couldn't really tell if it reduced motion blur or not, in fact I don't think it did it all.

I had that TV up until a year ago and it looked great, but it was HUGE. Must have weighed well over 100 pounds.the 1000 fps part seems silly to suggest when what you want is the response time of 1000 Hz.

If you had that, it probably wouldn't matter if frames are being doubled/interpolated or not.

I don't think they ever sold one.

But here's a new's article for it:

-> https://newatlas.com/panasonic-hd-3d/13842/

What they sold were PDP panels with 105 inches, 1080p. I believe.

Well... Thing is there was nothing stopping CRT's from doing what LCD was doing in regards to resolution, people already pointed out Sony FW900 Pc monitor but there were also CRT HD TV's.

Here's a thread from the time, of a Phillips HD CRT and how crazy people thought it was:

-> https://adrenaline.com.br/forum/threads/xbox360-na-crt-philips-32-wide-hd.133146/

This was a really thin CRT for what it was.

That's actually s good thing. Eventually, like at 960hz maybe, the mouse trail would become a complete blur to us, and wallah! Actual, real motion blur has been achieved. That kind of motion blur is good, motion blur from extended image persistence on sample-and-hold displays (LCD, OLED, etc.) baaaad. CRTs get around that of course bc they're impulse-driven displays.Whats even worse is that mouse trail effect gets fainter as the refresh goes up, but gets alot more distracting since you can still see it, and more of it.

Correct.That's actually s good thing. Eventually, like at 960hz maybe, the mouse trail would become a complete blur to us, and wallah! Actual, real motion blur has been achieved. That kind of motion blur is good, motion blur from extended image persistence on sample-and-hold displays (LCD, OLED, etc.) baaaad. CRTs get around that of course bc they're impulse-driven displays.

Actually the mouse cursor is less distracting + easier to see at higher Hz.Whats even worse is that mouse trail effect gets fainter as the refresh goes up, but gets alot more distracting since you can still see it, and more of it.

First you need to rebuild every component from the ground up, cost would be very high. But this is not the main problem.Are CRT impossible to make anymore? Seems like there would be a market for a nice premium one for gamers.

And they could also burn in as many Destiny 1 players found out.Importantly, I think, is that they were more expensive for the consumer.