SlimySnake

Flashless at the Golden Globes

because it has far higher CUs.If it was full RDNA 2 efficiency, why is it not using its party trick? Why is it not tuned higher like the leaked full RDNA2 cards for PC?

think of it like this. PS5 only has 22% faster clocks at 36 CUs, but xsx has 40% more CUs. a bigger chip will always be slower in consoles where you have to watch the TDP. Desktop gpus dont give a fuck about power draws. the 3080 has 135 CUs worth of shader processors and comes in around 320w on its own. nvidia can afford to not give two fucks about the tdp because PC gamers dont.

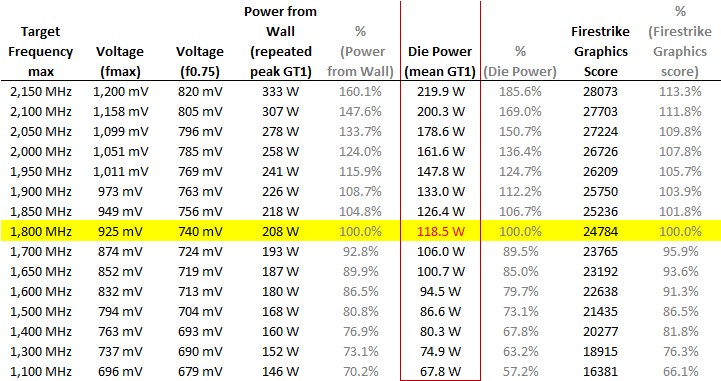

i can promise you, you are not getting 1.825 ghz on 52 CUs in a console with RDNA 1.0 efficiencies. it's flat out impossible. the 5700xt is 1.825 ghz ingame and pulls in 118w on its own. a gpu with 30% more CUs would draw 30% more power. thats over 165w for the gpu alone. xsx is pulling 165w for the entire console. you are not getting those thermals if the xsx wasnt using 50% rdna 2.0 perf/watts efficiencies.

Last edited: